Real Computer Vision for mobile and embedded

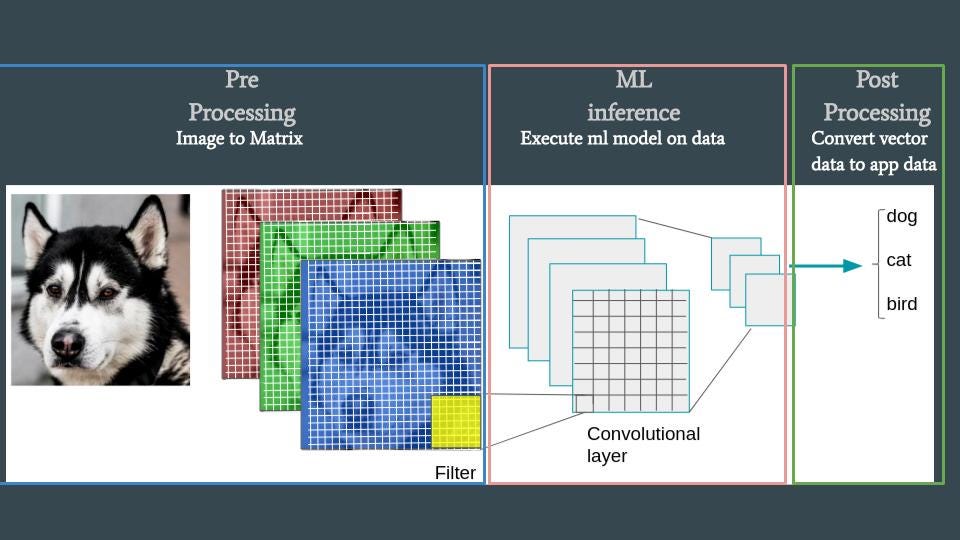

This is the second part of the series of articles about Computer Vision for mobile and embedded devices. Last time I discussed ways to optimize Image Preprocessing even before you try to get ML model inference directly on the device.

Inbounds of this article I am going to talk about the most crucial step —

on-device ML model execution

What is the right ML tool for mobile?And this is a really good question! The answer to that could be found by going through the next items :

- Performance

- , in other words, how often are you going to run your ML model on the device?

- Some Mobile apps (like photo editor with smart ML effects) could use ML output one time per run session, others required to track ML result one time per minute or even second but if we are talking about Real Computer Vision application it is a good idea to do it

- 10–30 times per second (10–30 FPS)

- . As often as we have a new frame from our video source.ML operations (layers) capability: or ML framework which was used for

- the server-side model training process

- .

- This item is mostly about ML operations (layers) capability between different Training frameworks. In some cases, it could be a kind complicated task to make your Server-side trained ML model work with mobile (embedded) ML environment due to the absence of necessary operations. And this can be a vital factor in choosing a tool.

- Performance

- , in other words, how often are you going to run your ML model on the device?

- Some Mobile apps (like photo editor with smart ML effects) could use ML output one time per run session, others required to track ML result one time per minute or even second but if we are talking about Real Computer Vision application it is a good idea to do it

- 10–30 times per second (10–30 FPS)

- . As often as we have a new frame from our video source.ML operations (layers) capability: or ML framework which was used for

- the server-side model training process

- .

- This item is mostly about ML operations (layers) capability between different Training frameworks. In some cases, it could be a kind complicated task to make your Server-side trained ML model work with mobile (embedded) ML environment due to the absence of necessary operations. And this can be a vital factor in choosing a tool.

Let’s take a look closer to the two most popular mobile platforms.

iOS

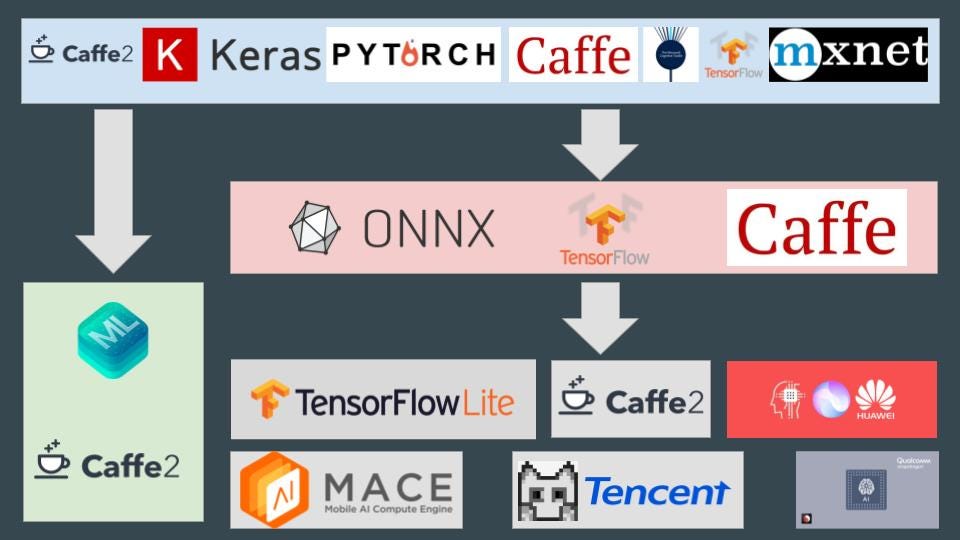

Apple gave iOS developers a brilliant gift — CoreMl. But it is not the only solution for this mobile platform. So what options do we have, let’s check it one by one:

Pure Apple solution is** **CoreMl

- Performance: It works with a high level of performance through Metal shaders, directly on mobile GPU (or specially dedicated for ML operations hardware for latest models of iPhone).

- ML operations (layers) capability: Almost all modern server-side ML frameworks have prepared scripts for converting to CoreMl format. Even if the converter does not support necessary layers you can write these operations by yourself using Metal shaders. But be careful with that! From the **second generation of CoreML **tool, it is better to use only layers “from the box” at least for the latest iPhones (iPhone XS, XS Max, and XR). In that case, all the operations will be executed on special hardware, which will lead to fast performance and less power consuming. Custom Metal-shaders operations will bring your ML model **back to GPU **and you will not get advantages of CoreML 2 and above.

- Hardware specifications: As soon as all iOS phones have almost the same hardware vendor it is a simple topic. We have around 10 specifications which support the same kind of technologies.

Tensorflow Lite associated in our mind with Google (Android) technologies but it could be a solution for iOS platform.

- Performance: It works with a high level of performance through Metal shaders, directly on mobile GPU (or specially dedicated for ML operations hardware for latest models of iPhone).

- ML operations (layers) capability: Almost all modern server-side ML frameworks have prepared scripts for converting to CoreMl format. Even if the converter does not support necessary layers you can write these operations by yourself using Metal shaders. But be careful with that! From the **second generation of CoreML **tool, it is better to use only layers “from the box” at least for the latest iPhones (iPhone XS, XS Max, and XR). In that case, all the operations will be executed on special hardware, which will lead to fast performance and less power consuming. Custom Metal-shaders operations will bring your ML model **back to GPU **and you will not get advantages of CoreML 2 and above.

- Hardware specifications: As soon as all iOS phones have almost the same hardware vendor it is a simple topic. We have around 10 specifications which support the same kind of technologies.

The third alternative is Caffe2, made in Facebook labs it uses NNPACK and QNNPACK to performs as fast as possible on ARM CPUs

In terms of usage and performance, Caffe2 is similar to TF Lite but much more flexible in the converting process. Using ONNX as the middle format you can easily bring your server-side model to iOS platform.

Android

As I mentioned above — efficient on-device ML inference is quite a hardware-specific task and it makes a lot of troubles for Android devices. Nowadays there are more than 16 000 Google Play Certificated devices (device models), overall more than 24 000! Each model can have its hardware as well as software specifications. So the answer to the question of “the right tool” selection can be application-specific.Let’s take a look at our options and a short description for them.

Tensorflow Lite is the most promoted by Google Android ML tool and there is a set of reasons for that.

- Performance: It works with a high level of performance through Metal shaders, directly on mobile GPU (or specially dedicated for ML operations hardware for latest models of iPhone).

- ML operations (layers) capability: Almost all modern server-side ML frameworks have prepared scripts for converting to CoreMl format. Even if the converter does not support necessary layers you can write these operations by yourself using Metal shaders. But be careful with that! From the **second generation of CoreML **tool, it is better to use only layers “from the box” at least for the latest iPhones (iPhone XS, XS Max, and XR). In that case, all the operations will be executed on special hardware, which will lead to fast performance and less power consuming. Custom Metal-shaders operations will bring your ML model **back to GPU **and you will not get advantages of CoreML 2 and above.

- Hardware specifications: As soon as all iOS phones have almost the same hardware vendor it is a simple topic. We have around 10 specifications which support the same kind of technologies.

Qualcomm Neural Processing SDK for AI is a brilliant example of excellent developers support by the hardware vendor. Qualcomm provides us with a set of efficient tools to establish the whole pipeline of ML processing on the device. I am not talking only about their fast-performed ML libs but also about tools for Digital Signal Processing, Video Stream processing, compilation, etc.

- Performance: It works with a high level of performance through Metal shaders, directly on mobile GPU (or specially dedicated for ML operations hardware for latest models of iPhone).

- ML operations (layers) capability: Almost all modern server-side ML frameworks have prepared scripts for converting to CoreMl format. Even if the converter does not support necessary layers you can write these operations by yourself using Metal shaders. But be careful with that! From the **second generation of CoreML **tool, it is better to use only layers “from the box” at least for the latest iPhones (iPhone XS, XS Max, and XR). In that case, all the operations will be executed on special hardware, which will lead to fast performance and less power consuming. Custom Metal-shaders operations will bring your ML model **back to GPU **and you will not get advantages of CoreML 2 and above.

- Hardware specifications: As soon as all iOS phones have almost the same hardware vendor it is a simple topic. We have around 10 specifications which support the same kind of technologies.

HUAWEI HiAI is one more example of hardware-specific solutions. The good thing about this product that it has Android Studio plugin which makes a lot of work for you but bad things — that this plugin has plenty of bugs. Anyway, you have an opportunity to convert your ML model and generate Java code to use it using the UI tool.

-

Performance: It works with a high level of performance through Metal shaders, directly on mobile GPU (or specially dedicated for ML operations hardware for latest models of iPhone).

-

ML operations (layers) capability: Almost all modern server-side ML frameworks have prepared scripts for converting to CoreMl format. Even if the converter does not support necessary layers you can write these operations by yourself using Metal shaders. But be careful with that! From the **second generation of CoreML **tool, it is better to use only layers “from the box” at least for the latest iPhones (iPhone XS, XS Max, and XR). In that case, all the operations will be executed on special hardware, which will lead to fast performance and less power consuming. Custom Metal-shaders operations will bring your ML model **back to GPU **and you will not get advantages of CoreML 2 and above.

-

Hardware specifications: As soon as all iOS phones have almost the same hardware vendor it is a simple topic. We have around 10 specifications which support the same kind of technologies.

platform by HiSilicon (part of Huawei). A surprising fact for me that each version of HiAI library is designed only for one Kirin model — so if you add HiAI 2.0 to your app it will work only with Kirin 980 and for an older model you should use the older version of HiAI. I guess it makes troubles on the way to use it in production.

MACE by XiaoMi is a good try to create unified ML solution for ARM-Based devices.

- Performance: It works with a high level of performance through Metal shaders, directly on mobile GPU (or specially dedicated for ML operations hardware for latest models of iPhone).

- ML operations (layers) capability: Almost all modern server-side ML frameworks have prepared scripts for converting to CoreMl format. Even if the converter does not support necessary layers you can write these operations by yourself using Metal shaders. But be careful with that! From the **second generation of CoreML **tool, it is better to use only layers “from the box” at least for the latest iPhones (iPhone XS, XS Max, and XR). In that case, all the operations will be executed on special hardware, which will lead to fast performance and less power consuming. Custom Metal-shaders operations will bring your ML model **back to GPU **and you will not get advantages of CoreML 2 and above.

- Hardware specifications: As soon as all iOS phones have almost the same hardware vendor it is a simple topic. We have around 10 specifications which support the same kind of technologies.

Caffe2 is also can be an option for Android devices. Its CPU runtime performs well — in many cases better than other frameworks CPU runtimes. And as we remember it is optimized for ARM-Based devices so it should work for almost all Android phones.

From all the above, you can see that the term “The right mobile ML tool” really depends on the needs of your app. It is a good idea to investigate the potential market, find out mobile hardware and software dominance before you start your mobile ML project.In the next article, I am going to talk about the potential outcome of ML models in **Computer Vision **and ways to efficiently process it. **Output post-processing **can be a tricky thing in terms of application performance.

Don’t forget to give us your 👏 !

Further reading:

☞ What is TensorFrames? TensorFlow + Apache Spark

☞ How to optimize your Jupyter Notebook

☞ 5 Common Python Mistakes and How to Fix Them

☞ How to Program a GUI Application (with Python Tkinter)

☞ 5 TensorFlow and ML Courses for Programmers

☞ Best Java machine learning library

#python #machine-learning #tensorflow