Not going to lie, Microsoft has been doing some good things in the software development community. I love coding in Visual Studio Code and ONNX has been great if you want to optimize your deep learning models for production. WSL2 allowing you to have access to an entire Linux Kernel is exactly what I’ve been wanting, but the lack of CUDA support means it was a non-starter for me as an A.I. Engineer.

As an A.I. Engineer and a Content Creator, I need Windows for the tools to create my content, but I need Linux to easily run and train my A.I. software projects. I have a dual boot setup on my machine, but it’s not ideal. I hate that if I’m training a model I can’t access my creation tools. So I have to work synchronously instead of asynchronously.

I know most deep learning libraries support Windows but the experience to get things working, especially open source A.I. software, was always a headache. I know I can use something like qemu for running Windows software on Linux, but that requires me to isolate an entire GPU to the VM, causing my Linux instance to not have access to it.

Here come Microsoft and Nvidia with CUDA WSL2 support! The promise of all Linux tools running natively on Windows would be a dream for my workflow. I immediately jumped on it when they released a preview version. In this post, I will write about my first impressions as well as some benchmarks!

Setting up CUDA on WSL2

Setting up Cuda on WSL2 was super easy for me. Nvidia has really good docs explaining the steps you need to take. I was pleasantly surprised that I did not run into a single error! I can’t remember the last time that happened when setting up software that’s still in beta.

Setting up my Machine Learning Tools

As a A.I. Engineer that specifically spends a lot of time doing deep learning, there are a few tools that I need to make my developing experience much better.

- Docker with CUDA support (nvidia-docker)

- PyTorch as my deep learning framework of choice

- Horovod for distributed training

I’ve not tried WSL2 since I dual boot into Linux, so I was pleasantly surprised that I can easily download and install my tools like I was on a normal Ubuntu machine. I had no issue installing each of these packages. It was an Ubuntu experience as you would expect it.

Training Models

OK now, this is the real test. First I will talk about my experience, and then I’ll present some benchmarks to compare Cuda on WSL2 and bare-metal Linux.

I think a common workflow when training deep learning models regardless if you have your own hardware or if you’re training on the cloud is to have a separate disk for all of your data and a disk for the actual operating system. WSL2 will automatically detect and mount any disk that Windows 10 recognizes so that was cool; but I ran into issues with file permissions on my mounted data drive.

NOTE: The issue is only on mounted drives and works fine if you do everything within your WSL2 file system.

So my training script would error out on random data files due to the file permissions being restricted. So I read the WSL docs and it states that…

When accessing Windows files from WSL the file permissions are either calculated from Windows permissions, or are read from metadata that has been added to the file by WSL. This metadata is not enabled by default.

Ok, so WSL2 calculates the file permissions, and sometimes it screws up I guess, I just need to enable this metadata thingie to get it working right? Well sorta… So I added the metadata and then did chmod -R 777 to all of my data files as a quick and dirty way to just free up the permissions so I can continue training! Well, it worked for a bit… then it broke again with the same permissions error! So I looked at the permissions and it somehow reverted my changes and went back to restricted access. The kicker is if I check file permissions multiple times it would then revert to my chmod permissions. So it was randomly changing permissions and the only way I would know it is if I checked the permissions using ls -l. I discovered a weird WSL2 quantum phenomenon that I’ll coin… _The Schrödinger’s file permissions. _The Issue stopped when I used chmod 700 to give full read, write, and execution permissions to only the WSL2 user and not to everybody and their mom. This somehow fixed the _Schrödinger’s file permissions _issue so I just went on with life.

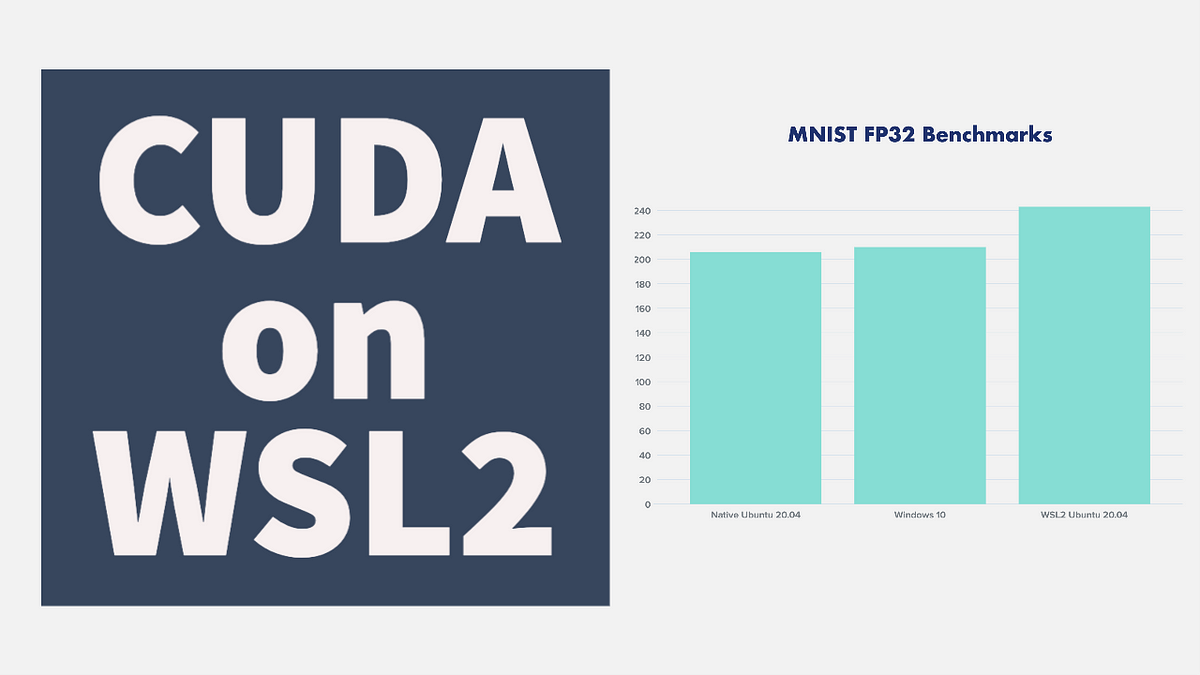

I started training with no issues after that! Everything looked good, the model loss was going down and nothing looked out of the ordinary. I decided to do some benchmarking to compare deep learning training performance of Ubuntu vs WSL2 Ubuntu vs Windows 10.

#nvidia #machine-learning #deep learning