If you are someone who is familiar with Data Science, you must have realized that somewhere between Simple Linear Regression and Deep Neural Networks we grow up to become a Data Scientist.

Linear Regression is a very powerful Machine Learning algorithm that used to calculate a baseline results for predicting future outcomes.

In this article we’ll learn about the following topics:

- Introduction to Linear Regression

- Cost Function

- Applications of Linear Regression

- Implementing Linear Regression with Scikit-Learn

- Pros and Cons

- Summary

Introduction to Linear Regression

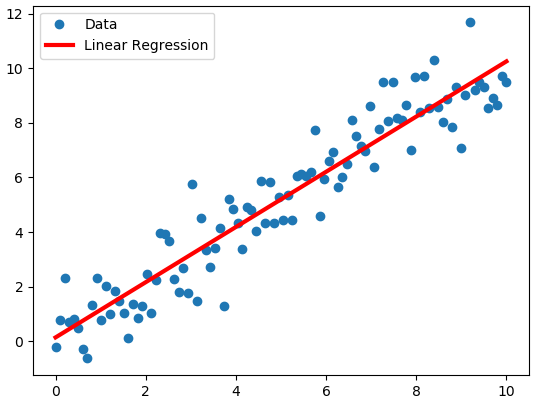

Linear Regression comes under the subfield of supervised learning algorithms. Let’s start with understanding regression. It’s a predictive modeling technique that investigates the relationship between a dependent and Independent variable. Linear Regression comes under the subfield of supervised learning algorithms. Let’s start with understanding regression. It’s a predictive modeling technique that investigates the relationship between a dependent and Independent variable.

The essential idea of Linear regression is to examine two things:

- Do Independent variables do a good job in predicting an outcome (dependent) variable?

- Which variables in particular are significant predictors of the Outcome (dependent) variable?

The simplest form of the regression equation with one dependent and one independent variable is defined by the formula

Cost Function

The essential goal of the Cost Function is to find the line which minimizes the Sum of Squared Errors. Let’s have a look at coefficients(m, b) in the above equation relate to the line which minimizes the error. we can see a graphical depiction of calculations.

#analytics-vidhya #data-science #machine-learning #sklearn #data analysis