Web Scraping With NodeJS and Puppeteer

Introduction

As you maybe checked my profile, I’m not in charge of the technical stuff at Koopol. Thus, this post is a bit out of nowhere.

Why?

In a sentence, Koopol helps brands and resellers automating online price monitoring. By definition, Koopol is an innovative and technical SaaS solution.

Passionate about innovations and technical matters anyway, I was always a bit frustrated about being nowhere in development, I mean up-to-date development ;-).

That’s why I decided to re-open my programming chapter and improve my technical skills, besides my main activities.

As a project owner, I’m convinced that being aware, understand, and imagine technical challenges, the technical team is facing is crucial for the good development of Koopol.

In this article, I would like to explain how it can be easy to start web scraping. Don’t be afraid. Even if you are not a developer, I’m sure many of you are interested about that important subject.

Web scraping is useful in a lot of various matters. As soon as you have to copy/paste data from multiple sources as a business developer, a sales, or even a recruiter. The challenge is always similar: gather the relevant data.

Again, keep in mind that this article is dedicated to not technical guys, like me.

This being said, the following lines will go through how I learned by myself on how to start web scraping.

Here is an overview of what we will discuss about:

- Code Editor: Visual Studio Code

- Programming language: JavaScript (Node.js)

- Web Scraping library: the famous Puppeteer

Ready? Let’s start.

Codes Editor: Visual Studio Code

In this tutorial, I will use Visual Code Editor. You can download the latest version by clicking here: https://code.visualstudio.com/

Here are a few advantages of the IDE:

- Terminal: Visual Studio Code owns its Terminal. It will help you avoiding switching all the time between IDE and the Terminal for code running. This is efficient.

- Integrated Git: Visual Studio Code includes Git to follow each change you might perform in your code. In other words (for beginners), it will allow you coming back in the code history if you made a mistake and want to turn back.

- Automatic saving: Visual Studio Code will automatically save your code. Thus, if any errors occurred while you are programming, be sure you will retrieve your code back. So you can keep the focus on what matter.

- Extensions: Visual Studio Code allows you to add many possible extensions thanks to its large developers’ community. For instance, you can add extensions highlighting code to help you retrieve your path, etc.

This blog post aims not at promoting this solution. If you feel better with another one, please use yours!

Part I — Install the Environment

A. How to install Node.j on a Mac?

To use Puppeteer, we previously need to set up our development environment. To use Puppeteer library (JavaScript Library), we need to set up a Node.js environment. Don’t worry, it takes a few minutes only…

Step 1

Open the Terminal

Step 2.a: If you do have Node.js installed

Enter the following code to check the Node.js version already installed.

node -v

To update your Node.JS version: I recommend you to run the following command line:

npm i -g npm

If you get a lot of checkPermissions warnings, you might have to run the command as a superuser by running:

sudo npm i -g npm

Terminal will probably ask you to type your password, in that case.

Step 2.b: If you do not have Node.js already installed

- Go to nodejs.org, and download the latest version for macOS.

- When the file finishes downloading, double-click on the .pkg file to install it. I recommend you to download the LTS version dedicated for Most Users.

3. Go through the entire installation process

4. When the installation is complete, open the Terminal and enter the below code, to verify that Node.JS is installed correctly, and to check the version.

node -v

If a version is displayed, you are ready for the next part.

B. How to install Puppeteer Library?

Puppeteer is a Node library allowing you to control and automate a Chrome browser but in a headless way. Ok, a bit confusing, let’s take a moment to detail a bit that part.

Headless Chrome is shipping in Chrome 59. It’s a way to run the Chrome browser in a headless environment. Essentially, running Chrome without chrome! It brings all modern web platform features provided by Chromium and the Blink rendering engine to the command line.

Why is that useful?

A headless browser is a great tool for automated testing and server environments where you don’t need a visible UI shell. For example, you may want to run some tests against a real web page, create a PDF of it, or just inspect how the browser renders an URL.

Source: https://developers.google.com/web/updates/2017/04/headless-chrome

Now, you understand Puppeteer library purpose.

So, how to install it in the right place. Open the Terminal, choose the place you want (e.g. on your Desktop), and create a dedicated directory for our web scraping project:

mkdir project1

Now install puppeteer inside that directory project1 by running below commands

npm install puppeteer

npm is a package manager which comes automatically with Node.js previously installed. In other words, it will manage the Puppeteer installation process for you, at the place you are. Thus by running the above-mentioned code, it will download and bundle the latest version of Chromium.

That’s it.

Now, we can start web scraping.

Part II — Web Scraping

Now, the best part. Let’s start scraping the web. Oh, ok, let’s start on the first page…

Here are our scraping objectives:

- Start puppeteer and go to a specific product page

- Scrape Title, Description, and the Price of the product

Step 1 — Starting

Scraping Permissions

Let’s start with a very simple example: https://www.theslanket.com .

Before scraping, make sure the website is not forbidding it in its robots.txt file. In this case, let’s check: https://www.theslanket.com/robots.txt

# Hello Robots and Crawlers! We're glad you are here, but we would

# prefer you not create hundreds and hundreds of carts.

User-agent: *

Disallow: /cgi-bin/UCEditor

Disallow: /cgi-bin/UCSearch

Disallow: /cgi-bin/UCReviewHelpful

Disallow: /cgi-bin/UCMyAccount

Disallow: /merchant/signup/signup2Save.do

Disallow: /merchant/signup/signupSave.do

Crawl-delay: 5

# Sitemap files

Sitemap: https://www.theslanket.com/sitemapsdotorg_index.xml

This is not forbidden, so let’s go.

Objectives

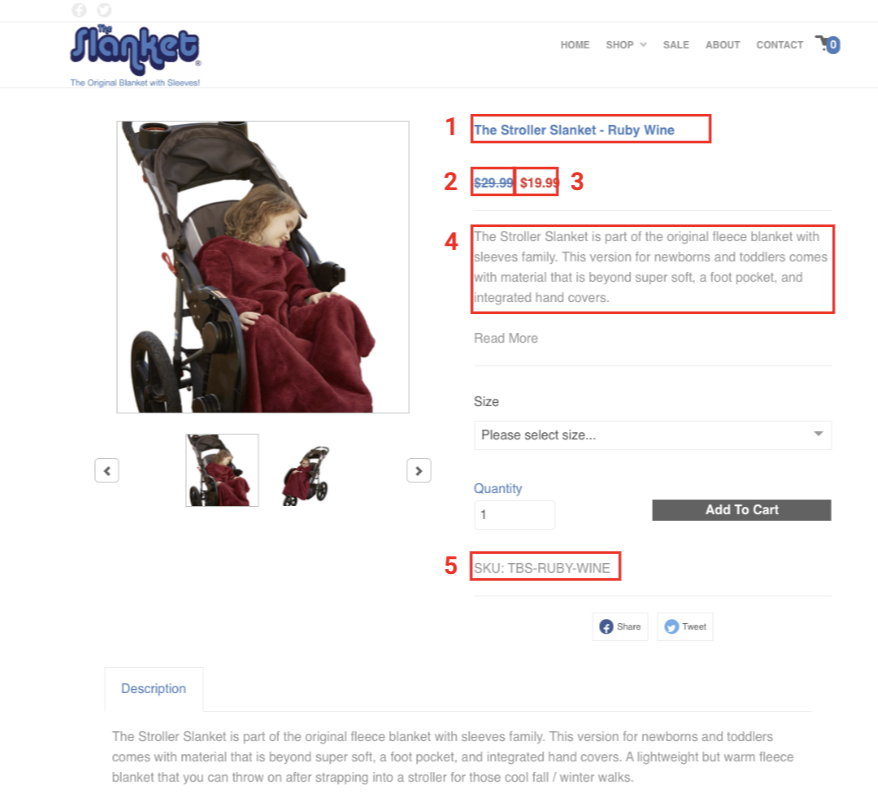

Let’s consider a random product, like https://www.theslanket.com/shop/the-stroller-slanket/TBS-RUBY-WINE.html

I highlighted the 5 elements we will scrape in red:

- ProductTitle

- NormalPrice

- DiscountedPrice

- ShortDescription

- SKU

Step 2 — Scraping

Create a Node.js file Create a new file, let’s name it SlanketScraping.js. Save that file in your specified directory. In our case, it will be in project1.

Create a browser instance

(async () => {

const browser = await puppeteer.launch()

})()

(Optional) Pass options via object to puppeteer.launch(). In that case, let’s pass 2 options to puppeteer.

Headless: this option consists of showing Chromium while Puppeteer is browsing. As defined earlier, Puppeteer is a headless Chrome browser. But, as a beginner, I recommend you to start by displaying (working in headless: false) to see what’s happening and debug. You can always switch it in True, and nothing will appear then.

SlowMo: the slow-motion option allows to slowdown puppeteer. It can be used in many situations, but here, let’s say it will be used to see what the browser is doing and to avoid disturbing the server… We will set it at 250 ms (milliseconds). By default, slowMo will be set at 0 ms, so full speed.

(async () => {

const browser = await puppeteer.launch({

headless: false,

slowMo: 250,

})

})()

Next, we will use the newPage() method to get the page object. If you work in headless: false, you will see a new tab appearing.

(async () => {

const browser = await puppeteer.launch({

headless: false,

slowMo: 250,

})

const page = await browser.newPage()

})()

What you should see

Next, we will pass the URL we want to scrape. To perform that, let’s call the goto() method on the page object to load the page.

const puppeteer = require('puppeteer');

(async () => {

const browser = await puppeteer.launch({

headless: false,

slowMo: 250,

})

const page = await browser.newPage()

await page.goto('https://www.theslanket.com/shop/the-stroller-slanket/TBS-RUBY-WINE.html')

browser.close()

})()

Here we launched puppeteer, and went to the specific product we want to scrape, then closed the browser. At this stage, we didn’t scrape anything but only browsing.

Let’s scrape the 5 elements we described earlier.

Get the page content

When a page is loaded with a URL, we will use the evaluate() method to get the page content.

const puppeteer = require('puppeteer');

(async () => {

const browser = await puppeteer.launch({

headless: false,

slowMo: 250,

})

const page = await browser.newPage()

await page.goto('https://www.theslanket.com/shop/the-stroller-slanket/TBS-RUBY-WINE.html')

const results = await page.evaluate(() =>{

//... elements to scrape

})

browser.close()

})()

Inside the evaluate() method, we will target the element we want to scrape, by using specific Selectors.

Finding the right Selectors can be tricky sometimes. If you need more information about selectors, I recommend you to read the following documentation about that topic. Trust me, you will need it.

https://developer.mozilla.org/en-US/docs/Web/API/Document/querySelector

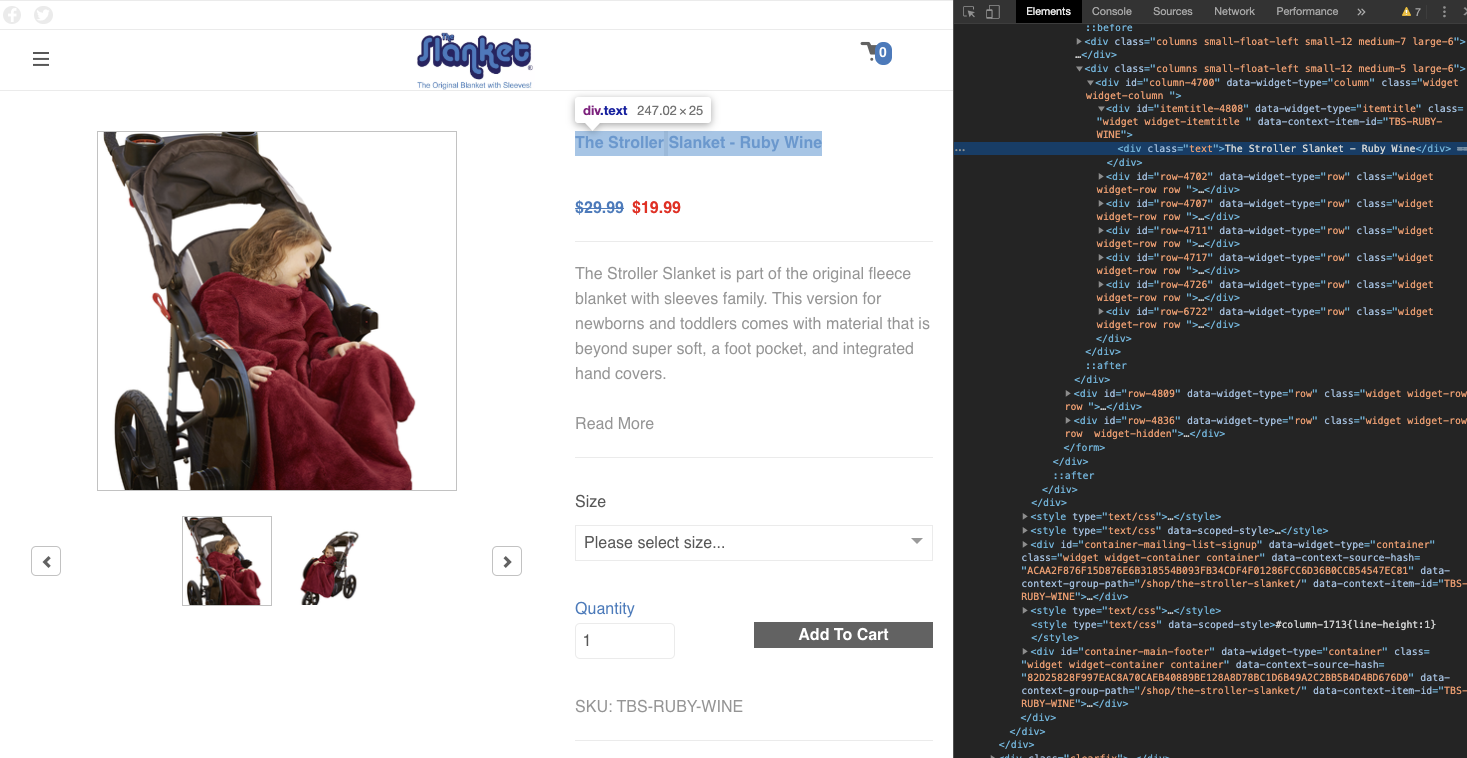

Back to our selector. The best practice, I would recommend, is to use the Google Chrome console to define and test your selectors. To open the console:

- open the targeted URL in Chrome

- ctrl+click on a specific element you want to scrape, let’s start with the ProductTitle, and

- Select Inspect

A black panel called Elements is opening on the right side of your page, at the right position of the element you click on.

Google Chrome > Inspect element by clicking on the title

Here, our element is included in

The title we are looking for is: “The Stroller Slanket — Ruby Wine”, included in the <div class=”text”>.

So, let’s try to select it directly in the Google Chrome Console. Beside the Elements panel, click on the Console tab.

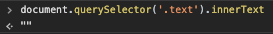

document.querySelector('.text').innerText

The answer is empty: “”.

The result is empty…

Damned, we failed.

Let’s try the parent div, as following

document.querySelector('.widget.widget-itemtitle ').innerText

Here is our title!

So, this selector is working to provide us with the ProductTitle of the product.

Let’s add the selector in our code to see if Puppeteer can scrape it.

const puppeteer = require('puppeteer');

(async () => {

const browser = await puppeteer.launch({

headless: false,

slowMo: 250,

})

const page = await browser.newPage()

await page.goto('https://www.theslanket.com/shop/the-stroller-slanket/TBS-RUBY-WINE.html')

const results = await page.evaluate(() =>{

//our new selector

return document.querySelector('.widget.widget-itemtitle ').innerText;

})

//log results at the screen

console.log(results)

browser.close()

})()

In the Visual Studio Code, in your project1 directory, run :

node SlanketScraping.js

Visual Studio Code should log

The Stroller Slanket - Ruby Wine

Let’s add the other elements following the same methodology than used to scrape the Title. Since we are scraping several elements, we will define an object containing the five elements.

Here are the 5 elements selector:

ProductTitle: document.querySelector('.widget.widget-itemtitle ').innerText,

NormalPrice: document.querySelector('.price').innerText,

DiscountedPrice: document.querySelector('.price.sale').innerText,

ShortDescription: document.querySelector('.widget-itemdescription-excerpt').innerText,

SKU: document.querySelector('.widget.widget-itemsku ').innerText,

Full Code

Here is the full code of our example.

const puppeteer = require('puppeteer');

(async () => {

const browser = await puppeteer.launch({

headless: false,

slowMo: 250,

})

const page = await browser.newPage()

await page.goto('https://www.theslanket.com/shop/the-stroller-slanket/TBS-RUBY-WINE.html')

const results = await page.evaluate(() =>{

return{

ProductTitle: document.querySelector('.widget.widget-itemtitle ').innerText,

NormalPrice: document.querySelector('.price').innerText,

DiscountedPrice: document.querySelector('.price.sale').innerText,

ShortDescription: document.querySelector('.widget-itemdescription-excerpt').innerText,

SKU: document.querySelector('.widget.widget-itemsku ').innerText,

}

})

//log results at the screen

console.log(results)

browser.close()

})()

About Koopol

This article aimed at presenting you a very first and simple scraping exercise using Puppeteer.

In the future, I will publish more “complex” web scraping missions.

I hope you learned a few things, and it will help you develop and improve your web scraping skills.

By the way…

At Koopol we want everybody to be able to scrape. As already said, it can be useful in various projects. So, if it interests you, no matter your current job, never hesitate to drop us an email (info@koopol.com). We will be more than happy to meet you, and who knows, maybe work together?

Scrape safely.

#nodejs #javascript #Puppeteer #node-js