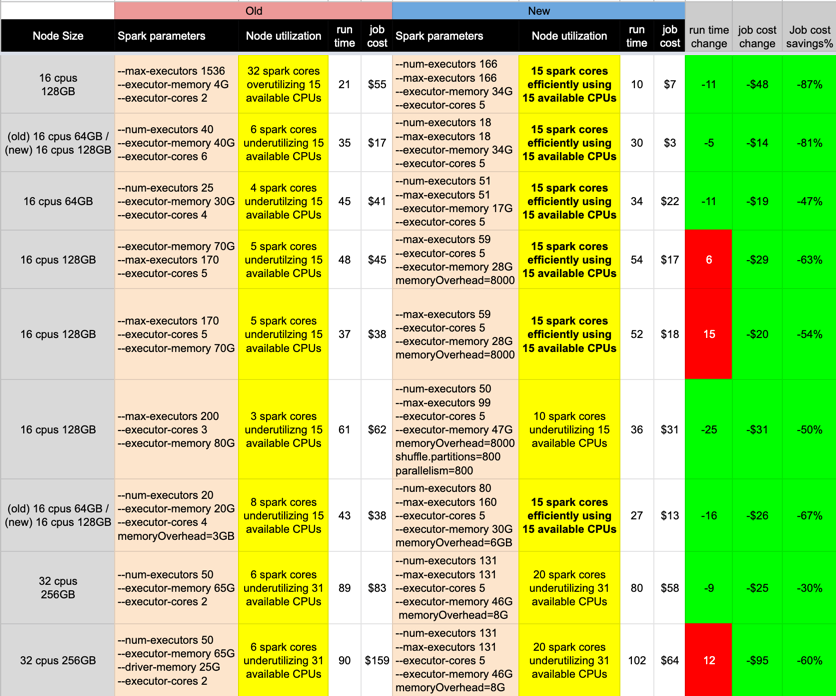

Below is a screenshot highlighting some jobs at Expedia Group™ that were cost tuned using the principles in this guide. I want to stress that no code changes were involved, only the spark submit parameters were changed during the cost tuning process. Pay close attention to the Node utilization column that is highlighted in yellow.

Cost reductions of Apache Spark jobs achieved by getting the node utilization right — costs are representative

Here you can see how improving the CPU utilization of a node lowered the costs for that job. If we have too many Spark cores competing for node CPUs, then time slicing occurs which slows down our Spark cores which in turn hampers job performance. If we have too few Spark cores utilizing node CPUs, then we are wasting money spent for that node’s time because of the node CPUs that are going unused.

You may also notice that perfect node CPU utilization was not achieved in every case. This will happen at times and is acceptable. Our goal is to improve node CPU utilization every time we cost tune rather than trying to get it perfect.

#software-engineering #cost #aws #efficiency #apache-spark