Feature selection is a crucial part of the machine learning workflow. How well the features were selected directly related to the model’s performance. There are usually 2 pain points for data scientists to go through:

- Which feature selection algorithm is better?

- How many columns from the input dataset need to be kept?

So I wrote a handful Python library called **_OptimalFlow _**with an ensemble feature selection module in it, called autoFS to simplify this process easily.

OptimalFlow is an Omni-ensemble Automated Machine Learning toolkit, which is based on Pipeline Cluster Traversal Experiment(PCTE) approach, to help data scientists building optimal models in easy way, and automate Machine Learning workflow with simple codes.

Why we use OptimalFlow? You could read another story of its introduction: “An Omni-ensemble Automated Machine Learning — OptimalFlow”.

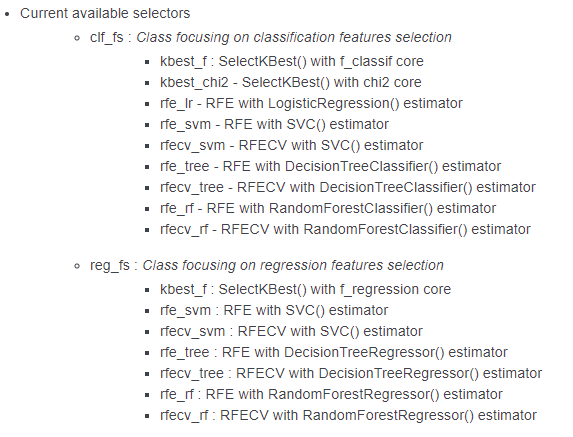

The autoFS module will go through popular feature selection algorithms(selectors), like kBest, RFE, etc. , in an ensemble way and select the majority features selected from their outputs as the top important features. Here’re link of details of autoFS module and the default selectors as below:

You can read the Documentation of OptimalFlow to understand details about OptimalFlow’s _autoFS _module. Besides, OptimalFlow also provides feature preprocessing, model selection, model assessment, and Pipeline Cluster Traversal Experiments(PCTE) automated machine learning modules.

#data-science #machine-learning #data-engineering #feature-selection #feature-engineering