Mesos vs. Kubernetes

Originally published at https://www.baeldung.com

1. Overview

In this tutorial, we'll understand the basic need for a container orchestration system.

We'll evaluate the desired characteristic of such a system. From that, we'll try to compare two of the most popular container orchestration systems in use today, Apache Mesos and Kubernetes.

2. Container Orchestration

Before we begin comparing Mesos and Kubernetes, let's spend some time in understanding what containers are and why we need container orchestration after all.

2.1. Containers

A container is a standardized unit of software that packages code and all its required dependencies.

Hence, it provides platform independence and operational simplicity. Docker is one of the most popular container platforms in use.

Docker leverages Linux kernel features like CGroups and namespaces to provide isolation of different processes. Therefore, multiple containers can run independently and securely.

It's quite trivial to create docker images, all we need is a Dockerfile:

FROM openjdk:8-jdk-alpine VOLUME /tmp COPY target/hello-world-0.0.1-SNAPSHOT.jar app.jar ENTRYPOINT ["java","-jar","/app.jar"] EXPOSE 9001

So, these few lines are good enough to create a Docker image of a Spring Boot application using the Docker CLI:

docker build -t hello_world

2.2. Container Orchestration

So, we've seen how containers can make application deployment reliable and repeatable. But why do we need container orchestration?

Now, while we've got a few containers to manage, we're fine with Docker CLI. We can automate some of the simple chores as well. But what happens when we've to manage hundreds of containers?

For instance, think of architecture with several microservices, all with distinct scalability and availability requirements.

Consequently, things can quickly get out of control, and that's where the benefits of a container orchestration system realize. A container orchestration system treats a cluster of machines with a multi-container application as a single deployment entity. It provides automation from initial deployment, scheduling, updates to other features like monitoring, scaling, and failover.

3. Brief Overview of Mesos

Apache Mesos is an open-source cluster manager developed originally at UC Berkeley. It provides applications with APIs for resource management and scheduling across the cluster. Mesos gives us the flexibility to run both containerized and non-containerized workload in a distributed manner.

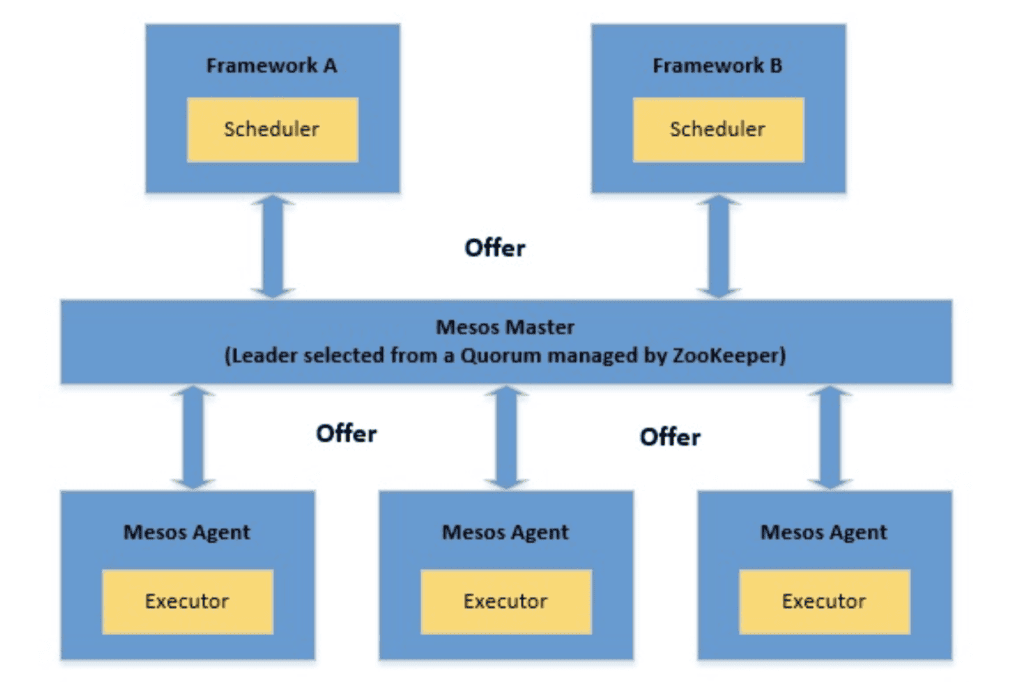

3.1. Architecture

Mesos architecture consists of Mesos Master, Mesos Agent, and Application Frameworks:

Let's understand the components of architecture here:

Let's understand the components of architecture here:

- Frameworks: These are the actual applications that require distributed execution of tasks or workload. Typical examples are Hadoop or Storm. Frameworks in Mesos comprise of two primary components:

- Scheduler: This is responsible for registering with the Master Node such that the master can start offering resources

- Executor: This is the process which gets launched on the agent nodes to run the framework's tasks

- Mesos Agents: These are responsible for actually running the tasks. Each agent publishes its available resources like CPU and memory to the master. On receiving tasks from the master, they allocate required resources to the framework's executor.

- Mesos Master: This is responsible for scheduling tasks received from the Frameworks on one of the available agent nodes. Master makes resource offers to Frameworks. Framework's scheduler can choose to run tasks on these available resources.

3.2. Marathon

As we just saw, Mesos is quite flexible and allows frameworks to schedule and execute tasks through well defined APIs. However, it's not convenient to implement these primitives directly, especially when we want to schedule custom applications. For instance, orchestrating applications packaged as containers.

This is where a framework like Marathon can help us. Marathon is a container orchestration framework which runs on Mesos. In this regard, Marathon acts as a framework for the Mesos cluster. Marathon provides several benefits which we typically expect from an orchestration platform like service discovery, load balancing, metrics, and container management APIs.

Marathon treats a long-running service as an application and an application instance as a task. A typical scenario can have multiple applications with dependencies forming what is called Application Groups.

3.3. Example

So, let's see how we can use Marathon to deploy our simple Docker image we created earlier. Note that installing a Mesos cluster can be little involved and hence we can use a more straightforward solution like Mesos Mini. Mesos Mini enables us to spin up a local Mesos cluster in a Docker environment. It includes a Mesos Master, single Mesos Agent, and Marathon.

Once we've Mesos cluster with Marathon up and running, we can deploy our container as a long-running application service. All we need a small JSON application definition:

#hello-marathon.json

{

"id": "marathon-demo-application",

"cpus": 1,

"mem": 128,

"disk": 0,

"instances": 1,

"container": {

"type": "DOCKER",

"docker": {

"image": "hello_world:latest",

"portMappings": [

{ "containerPort": 9001, "hostPort": 0 }

]

}

},

"networks": [

{

"mode": "host"

}

]

}

Let's understand what exactly is happening here:

- We have provided an id for our application

- Then, we defined the resource requirements for our application

- We also defined how many instances we'd like to run

- Then, we've provided the container details to launch an app from

- Finally, we've defined the network mode for us to be able to access the application

We can launch this application using the REST APIs provided by Marathon:

curl -X POST \ http://localhost:8080/v2/apps \ -d @hello-marathon.json \ -H "Content-type: application/json"

4. Brief Overview of Kubernetes

Kubernetes is an open-source container orchestration system initially developed by Google. It's now part of Cloud Native Computing Foundation (CNCF). It provides a platform for automating deployment, scaling, and operations of application container across a cluster of hosts.

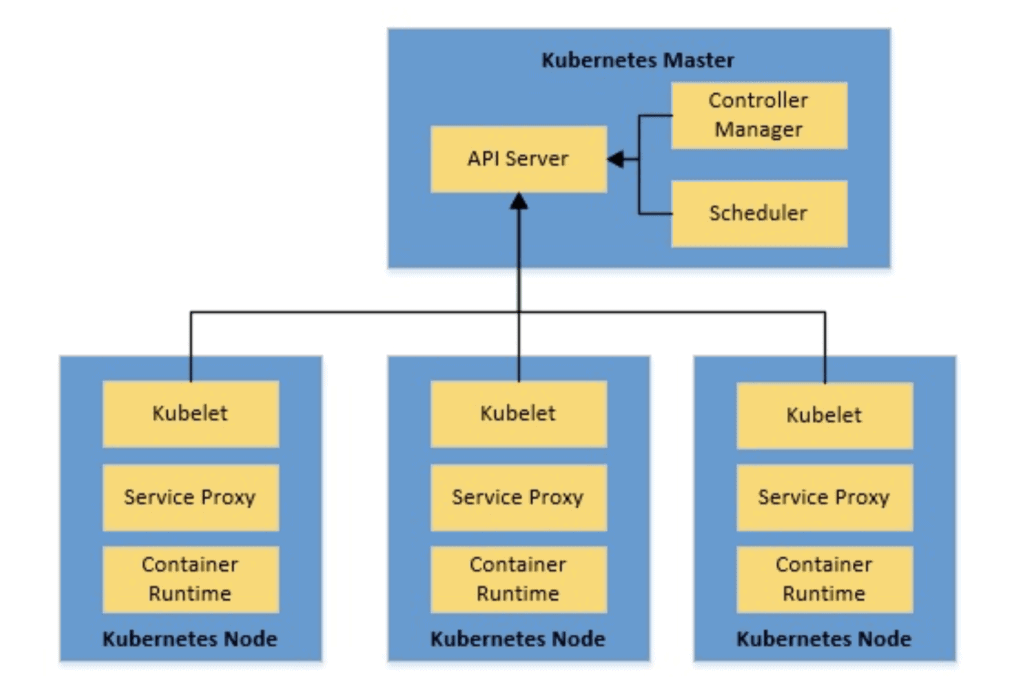

4.1. Architecture

Kubernetes architecture consists of a Kubernetes Master and Kubernetes Nodes:

Let's go through the major parts of this high-level architecture:

Let's go through the major parts of this high-level architecture:

- Kubernetes Master: The master is responsible for maintaining the desired state of the cluster. It manages all nodes in the cluster. As we can see, the master is a collection of three processes:

- kube-apiserver: This is the service that manages the entire cluster, including processing REST operations, validating and updating Kubernetes objects, performing authentication and authorization

- kube-controller-manager: This is the daemon that embeds the core control loop shipped with Kubernetes, making the necessary changes to match the current state to the desired state of the cluster

- kube-scheduler: This service watches for unscheduled pods and binds them to nodes depending upon requested resources and other constraints

- Kubernetes Nodes: The nodes in a Kubernetes cluster are the machines that run our containers. Each node contains the necessary services to run the containers:

- kubelet: This is the primary node agent which ensures that the containers described in PodSpecs provided by kube-apiserver are running and healthy

- kube-proxy: This is the network proxy running on each node and performs simple TCP, UDP, SCTP stream forwarding or round-robin forwarding across a set of backends

- container runtime: This is the runtime where container inside the pods are run, there are several possible container runtimes for Kubernetes including the most widely used, Docker runtime

4.2. Kubernetes Objects

In the last section, we saw several Kubernetes objects which are persistent entities in the Kubernetes system. They reflect the state of the cluster at any point in time.

Let's discuss some of the commonly used Kubernetes objects:

- Pods: Pod is a basic unit of execution in Kubernetes and can consist of one or more containers, the containers inside a Pod are deployed on the same host

- Deployment: Deployment is the recommended way to deploy pods in Kubernetes, it provides features like continuously reconciling the current state of pods with the desired state

- Services: Services in Kubernetes provide an abstract way to expose a group of pods, where the grouping is based on selectors targetting pod labels

There are several other Kubernetes objects which serve the purpose of running containers in a distributed manner effectively.

4.3. Example

So, now we can try to launch our Docker container into the Kubernetes cluster. Kubernetes provides Minikube, a tool that runs single-node Kubernetes cluster on a Virtual Machine. We'd also need kubectl, the Kubernetes Command Line Interface to work with the Kubernetes cluster.

After we've kubectl and Minikube installed, we can deploy our container on the single-node Kubernetes cluster within Minikube. We need to define the basic Kubernetes objects in a YAML file:

# hello-kubernetes.yaml apiVersion: apps/v1 kind: Deployment metadata: name: hello-world spec: replicas: 1 template: metadata: labels: app: hello-world spec: containers: - name: hello-world image: hello-world:latest ports: - containerPort: 9001 --- apiVersion: v1 kind: Service metadata: name: hello-world-service spec: selector: app: hello-world type: LoadBalancer ports: - port: 9001 targetPort: 9001

A detailed analysis of this definition file is not possible here, but let's go through the highlights:

- We have defined a Deployment with labels in the selector

- We define the number of replicas we need for this deployment

- Also, we've provided the container image details as a template for the deployment

- We've also defined a Service with appropriate selector

- We've defined the nature of the service as LoadBalancer

Finally, we can deploy the container and create all defined Kubernetes objects through kubectl:

kubectl apply -f yaml/hello-kubernetes.yaml

5. Mesos vs. Kubernetes

Now, we've gone through enough context and also performed basic deployment on both Marathon and Kubernetes. We can attempt to understand where do they stand compared to each other.

Just a caveat though, it's not entirely fair to compare Kubernetes with Mesos directly. Most of the container orchestration features that we seek are provided by one of the Mesos frameworks like Marathon. Hence, to keep things in the right perspective, we'll attempt to compare Kubernetes with Marathon and not directly Mesos.

We'll compare these orchestration systems based on some of the desired properties of such a system.

5.1. Supported Workloads

Mesos is designed to handle diverse types of workloads which can be containerized or even non-containerised. It depends upon the framework we use. As we've seen, it's quite easy to support containerized workloads in Mesos using a framework like Marathon.

Kubernetes, on the other hand, works exclusively with the containerized workload. Most widely, we use it with Docker containers, but it has support for other container runtimes like Rkt. In the future, Kubernetes may support more types of workloads.

5.2. Support for Scalability

Marathon supports scaling through the application definition or the user interface. Autoscaling is also supported in Marathon. We can also scale Application Groups which automatically scales all the dependencies.

As we saw earlier, Pod is the fundamental unit of execution in Kubernetes. Pods can be scaled when managed by Deployment, this is the reason pods are invariably defined as a deployment. The scaling can be manual or automated.

5.3. Handling High Availability

Application instances in Marathon are distributed across Mesos agents providing high availability. Typically a Mesos cluster consists of multiple agents. Additionally, ZooKeeper provides high availability to the Mesos cluster through quorum and leader election.

Similarly, pods in Kubernetes are replicated across multiple nodes providing high availability. Typically a Kubernetes cluster consists of multiple worker nodes. Moreover, the cluster can also have multiple masters. Hence, Kubernetes cluster is capable of providing high availability to containers.

5.4. Service Discovery and Load Balancing

Mesos-DNS can provide service discovery and a basic load balancing for applications. Mesos-DNS generates an SRV record for each Mesos task and translates them to the IP address and port of the machine running the task. For Marathon applications, we can also use Marathon-lb to provide port-based discovery using HAProxy.

Deployment in Kubernetes creates and destroys pods dynamically. Hence, we generally expose pods in Kubernetes through Service, which provides service discovery. Service in Kubernetes acts as a dispatcher to the pods and hence provide load balancing as well.

5.5 Performing Upgrades and Rollback

Changes to application definitions in Marathon is handled as deployment. Deployment supports start, stop, upgrade, or scale of applications. Marathon also supports rolling starts to deploy newer versions of the applications. However, rolling back is as straight forward and typically requires the deployment of an updated definition.

Deployment in Kubernetes supports upgrade as well as rollback. We can provide the strategy for Deployment to be taken while relacing old pods with new ones. Typical strategies are Recreate or Rolling Update. Deployment's rollout history is maintained by default in Kubernetes, which makes it trivial to roll back to a previous revision.

5.6. Logging and Monitoring

Mesos has a diagnostic utility which scans all the cluster components and makes available data related to health and other metrics. The data can be queried and aggregated through available APIs. Much of this data we can collect using an external tool like Prometheus.

Kubernetes publish detailed information related to different objects as resource metrics or full metrics pipelines. Typical practice is to deploy an external tool like ELK or Prometheus+Grafana on the Kubernetes cluster. Such tools can ingest cluster metrics and present them in a much user-friendly way.

5.7. Storage

Mesos has persistent local volumes for stateful applications. We can only create persistent volumes from the reserved resources. It can also support external storage with some limitations. Mesos has experimental support for Container Storage Interface (CSI), a common set of APIs between storage vendors and container orchestration platform.

Kubernetes offers multiple types of persistent volume for stateful containers. This includes storage like iSCSI, NFS. Moreover, it supports external storage like AWS, GCP as well. The Volume object in Kubernetes supports this concept and comes in a variety of types, including CSI.

5.8. Networking

Container runtime in Mesos offers two types of networking support, IP-per-container, and network-port-mapping. Mesos defines a common interface to specify and retrieve networking information for a container. Marathon applications can define a network in host mode or bridge mode.

Networking in Kubernetes assigns a unique IP to each pod. This negates the need to map container ports to the host port. It further defines how these pods can talk to each other across nodes. This is implemented in Kubernetes by Network Plugins like Cilium, Contiv.

6. When to use What?

Finally, in comparison, we usually expect a clear verdict! However, it's not entirely fair to declare one technology better than another, regardless. As we've seen, both Kubernetes and Mesos are powerful systems and offers quite competing features.

Performance, however, is quite a crucial aspect. A Kubernetes cluster can scale to 5000-nodes while Marathon on Mesos cluster is known to support up to 10,000 agents. In most practical cases, we'll not be dealing with such large clusters.

Finally, it boils down to the flexibility and types of workloads that we've. If we're starting afresh and we only plan to use containerized workloads, Kubernetes can offer a quicker solution. However, if we've existing workloads, which are a mix of containers and non-containers, Mesos with Marathon can be a better choice.

7. Other Alternatives

Kubernetes and Apache Mesos are quite powerful, but they are not the only systems in this space. There are quite several promising alternatives available to us. While we'll not go into their details, let's quickly list a few of them:

- Docker Swarm: Docker Swarm is an open-source clustering and scheduling tool for Docker containers. It comes with a command-line utility to manage a cluster of Docker hosts. It's restricted to Docker containers, unlike Kubernetes and Mesos.

- Nomad: Nomad is a flexible workload orchestrator from HashiCorp to manage any containerized or non-containerised application. Nomad enables declarative infrastructure-as-code for deploying applications like Docker container.

- OpenShift: OpenShift is a container platform from Red Hat, orchestrated and managed by Kubernetes underneath. OpenShift offers many features on top of what Kubernetes provide like integrated image registry, a source-to-image build, a native networking solution, to name a few.

8. Conclusion

To sum up, in this tutorial, we discussed containers and container orchestration systems. We briefly went through two of the most widely used container orchestration systems, Kubernetes and Apache Mesos. We also compared these system based on several features. Finally, we saw some of the other alternatives in this space.

Before closing, we must understand that the purpose of such a comparison is to provide data and facts. This is in no way to declare one better than others, and that normally depends on the use-case. So, we must apply the context of our problem in determining the best solution for us.

Thanks for reading ❤

If you liked this post, share it with all of your programming buddies!

Follow me on Facebook | Twitter

Further reading about Kubernetes

☞ Docker and Kubernetes: The Complete Guide

☞ Learn DevOps: The Complete Kubernetes Course

☞ Docker and Kubernetes: The Complete Guide

☞ Kubernetes Certification Course with Practice Tests

☞ An illustrated guide to Kubernetes Networking

☞ An Introduction to Kubernetes: Pods, Nodes, Containers, and Clusters

☞ An Introduction to the Kubernetes DNS Service

☞ Kubernetes Deployment Tutorial For Beginners

☞ Kubernetes Tutorial - Step by Step Introduction to Basic Concepts

#kubernetes #apache #devops #docker