It has been a long time since I posted last time so this time I will make it up by writing a long and technical post with examples. We start with a little introduction to K8s:

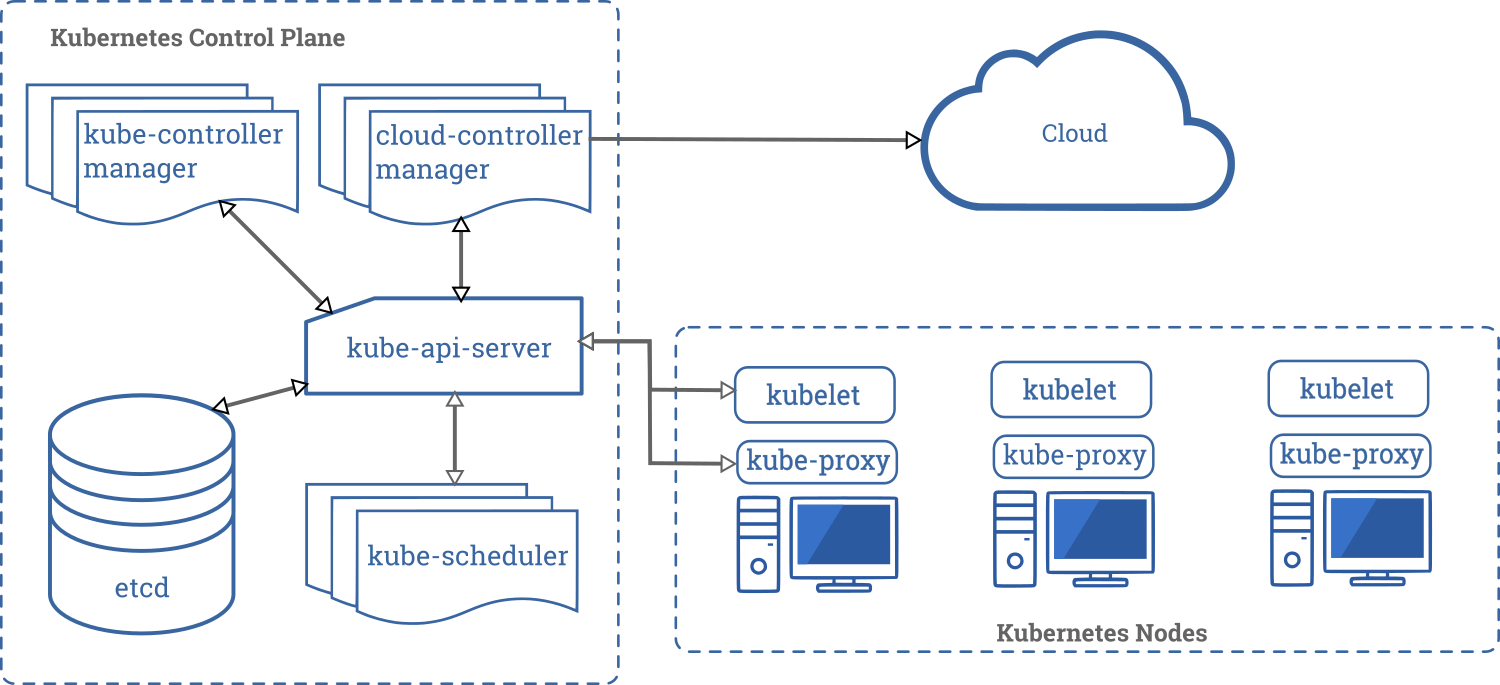

Kubernetes Architecture

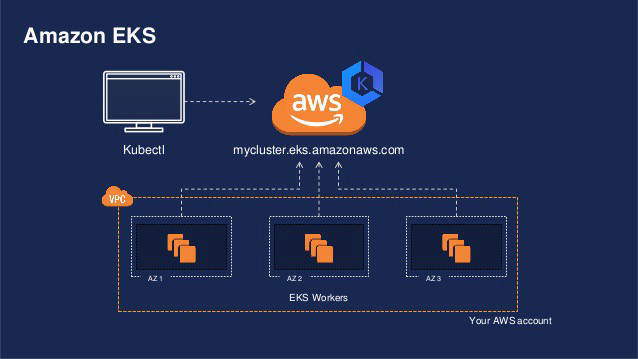

Elastic Kubernetes Service, aka EKS, is Amazon’s implementation of Kubernetes in the AWS cloud.

A Kubernetes cluster consists of the components that represent the “control plane” and a set of machines called “nodes”.

The worker nodes host the Pods that are the components of the application workload. The control plane manages the worker nodes and the Pods in the cluster.

(In production environments, the control plane usually runs across multiple computers and a cluster usually runs multiple nodes, providing fault-tolerance and high availability.)

A simple architecture picture is shown as follows:

EKS

As said, EKS is Amazon’s implementation of Kubernetes in the AWS cloud. The difference between EKS and a standard Kubernetes cluster (or what you get as a service in most other cloud providers, or what you install yourself from ground up), is that you don’t really “own” the control plane. Instead, in AWS EKS, you pay a very little fee (almost nothing compared to your EC2 nodes) and AWS manages it for you.

To setup an EKS cluster, there are multiple ways: through the web UI, using eksctl, using Terraform, etc. No matter which method you use, behind the scene, this is what happens when you create a cluster:

- a control plane is created

- an auto scaling group is created, min/max/desired numbers set, then EC2s are added into the auto scaling group, using a pre-built image joining the control plane

Of course there are more resources created, for example IAM roles, etc, but for the simplicity we only mentioned two things here, which are: the control plane, and the “node group”.

Managed Node Group V.S. Self-managed Node Group

Managed

With Amazon EKS managed node groups, you don’t need to separately provision or register the Amazon EC2 instances that provide compute capacity to run your Kubernetes applications. You can create, update, or terminate nodes for your cluster with a single operation. Nodes run using the latest Amazon EKS-optimized AMIs in your AWS account while node updates and terminations gracefully drain nodes to ensure that your applications stay available.

While AWS documentation tells you a lot, it also manages to tell you nothing. In essence, a “managed” node group means AWS creates the auto scaling group and manages it, for example, deciding which AMI to use, what cloudinit script to put into it so that when the EC2 is booted it knows how to and where to join the cluster.

A managed node group is the most simple way to get started, but there are a few limitations as of today. For example, if you work in a large enterprise environment, chances are, every egress traffic is regulated via an internal proxy instead of an internet gateway or a NAT gateway. But managed node groups don’t allow you to set proxy; nor can you set EKS API as a private endpoint so that you can access them without internet gateway/NAT. For another example, if you would like to use custom CNI networking, you would also need to set a few parameters in the cloudinit script, which managed node groups don’t allow you to do so.

Self-Managed

On the contrary, a self-managed node group, as the name suggests, is totally managed by yourself, thus giving you all the control over anything. While this gives you more control over it and more possibilities (as aforementioned proxy issue or custom CNI), you also need to take care of more details, like you have to prepare the cloudinit script so that the EC2 knows where to join and how many pods are allowed per node, tagging for auto scaling groups and subnets, etc. This article isn’t going to focus on self-managed node groups and how it works and how to create one so we are not going to dive too much into details here; just to make sure that we are on the same page and we know there are two types of worker node groups in the case of EKS.

#kubernetes #devops #autoscaling #aws #cloud