This blog-post will go over the following subjects:

- Why to pre-process a given dataset.

- Package dependencies and installation.

- Package libraries and utilities.

- Selected usage examples.

**The full package usage is elaborated in this **Jupyter Notebook

Why to pre-process a given dataset

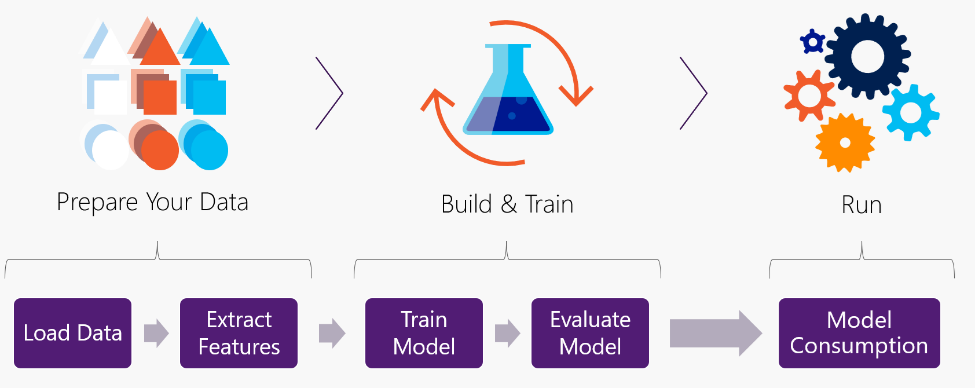

**Explore and Pre-process our dataset is probably the most important step in building an efficient Machine Learning model. **Raw data contains noise, missing values, inconsistent representation of features, and many more issues. To achieve an accurate model that meets the business problem and performs high-precision forecasting, one will have to manipulate it.

There are several steps to take. First, understand the business problem at hand and the purpose of the model. Second, **Explore the dataset (EDA): **distribution and correlation of features, missing values, etc. Next is **Prosses the dataset: **fill missing values, drop outliers, handle imbalanced features, etc. Last will be Plot insights on the dataset.

“Big data isn’t about bits, it’s about talent” , Douglas Merrill

What differentiates a brilliant Data Scientist from a good one is the ability to prosses the dataset based on the given business problem and the features at hand. Never underestimate the importance of EDA and Pre-processing the data. **The data is the foundation of the model **and the performance of it is very much based on the data that you provide.

To read more about EDA and Pre-processing data I highly recommend reading this blog post: Data Preprocessing Concepts written by Pranjal Pandey

#data-science #package #data-preprocessing #python #machine-learning