Beginner’s guide in building a Naive Bayes classifier model (simple classification model) from scratch using Python.

In machine learning, we can use probability to make predictions. Perhaps the most widely used example is called the Naive Bayes algorithm. Not only it is straightforward to understand, but it also achieves surprisingly good results on a wide range of problems.

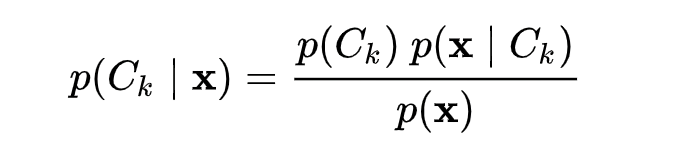

Naive Bayes algorithm is a classification technique based on the Bayes Theorem. It assumes that a feature in a class is unrelated to the presence of any other feature. As shown in the following formula, the algorithm relies on the posterior probability of the class given a predictor:

where:

- P(c|x) is the posterior probability of class given a predictor

- P(x|c) is the probability of the predictor given the class. Also known as Likelihood

- P© is the prior probability of the class

- P(x) is the prior probability of predictor.

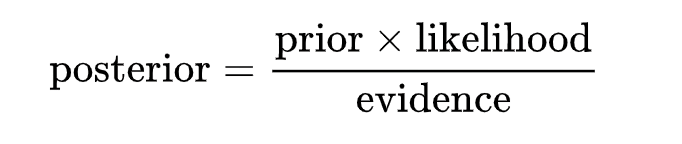

Or in plain english, the Naive Bayes classifier equation can be written as :

The good news is Naive Bayes classifier is easy to implement and performs well, even with a small training data set. It is one of the best fast solutions when it comes to predicting the class of the data. Scikit-learn offers different algorithms for various types of problems. One of them is the Gaussian Naive Bayes. It is used when the features are continuous variables, and it assumes that the features follow a Gaussian distribution. Let’s dig deeper and build our own Naive Bayes classifier from scratch using Python.

#machine-learning #artificial-intelligence #python #programming #data-science