Imagine you have an image, say, 4K (38402160 pixels) that was resampled to a smaller resolution, say, Full HD (19201080). Smaller images take less storage, are faster to process and send through internet. However, when it comes up to display this image on your 4K screen, you will likely prefer the original 4K image.

You may indeed interpolate the Full HD picture to 4K resolution using a standard interpolation method (bilinear, bicubic, etc.) This is what happens anyway when the picture gets stretched to the entire screen. And this does definitely not produce the nicest result, although at Full HD/4K scale you may not perceive the difference unless your screen is really big or you zoom in. Still, if you have got a 4K/8K screen, you likely care about the visual quality. So an option for you to upscale the image would be a more elaborate approach rendering a nicer picture at a cost of increased processing time. This article is about such an approach.

The problem of reconstructing a higher resolution image (HR) from a low resolution one (LR) with no other inputs is ill-posed, since the downsampling process producing the LR image from the HR image typically entails an information loss, so that for a given LR image and an interpolation method there are many HR images that may lead to the same LR output. Standard interpolation methods reconstruct one possible HR counterpart, not necessarily the most naturally appearing one. To reconstruct a visually better HR image one may need to introduce a prior, and this is what neural networks are good at.

The ill-posednesss together with easily accessible data and somewhat emotional context of having clearer pictures for people purchasing 8K screens lead to thousands of papers, each claiming being the best.

This article discusses

- a small easily trainable fully convolutional architecture rendering 2x higher resolution images heavily inspired by ESPCN network by Twitter engineers,

- a way to implement the inference solely using OpenGL ES 2.0-compliant shaders with no CPU compute at all. The latter allows to run the model on laptops, Android smartphones and Raspberry Pi, all on GPU.

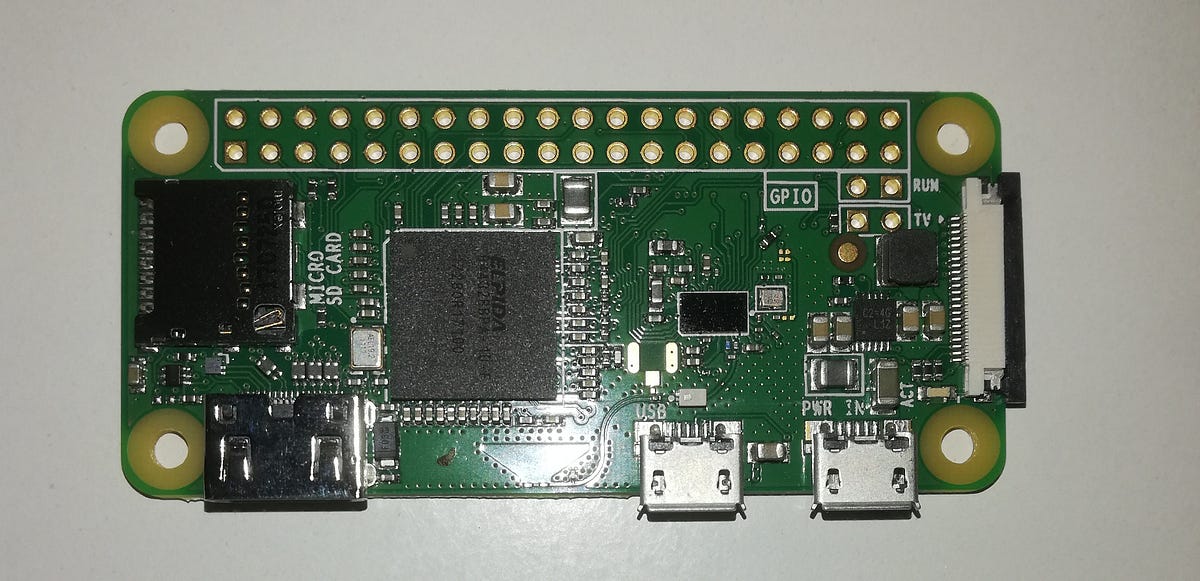

I do not have 8K screen, but I do have a Raspberry Pi. In what follows I do not try to beat state-of-the-art results, so there will be no high PSNR numbers. Instead, the focus here is on making things practical. Running the inference on Raspberry Pi GPU was a non-negotiable requirement I set to myself for this study, so the main outcome is the use of OpenGL for inference of a neural net on a large spectrum of devices. Raspberry Pi GPU is a nice baseline: thanks to OpenGL, if the thing runs on Pi, it runs pretty much on any decent GPU.

There are images, PSNR and time measurements and some code down there. Let’s get started.

Architecture

The model here is quite an incarnation of ESPCN. The use of OpenGL ES 2.0 as inference back-end and its Raspberry Pi implementation put some constraints on the architecture making the network somewhat uncommon according to modern ML practices, but let accept it as it is for the moment and discuss this later.

Main differences with ESPCN are:

- the use of grouped convolutions followed by pointwise (1x1) convolutions,

- the activation function is bounded ReLU in range [0, 1]. It is applied on top of all the convolutions.

Here is the architecture. The main ingredients are 5x5, grouped 3x3, 1x1 convolutions and BReLU activations.

The model operates on a 9x9 pixels neighborhood for a given pixel in LR image and produces 2x2 pixels on output in the way shown below. We deal with grayscale images here: the training is done on the luminance (Y) channel, while the inference may be run on R, G, B channels separately, or on Y only and the chroma component is upscaled by a standard interpolation. The latter is quite common: the rationale is that any image or video encoder neglects the chroma component applying an additional downscaling to it, and it all works because our eyes are not much sensitive to the chroma resolution.

#super-resolution #gpu #deep-learning #android #raspberry-pi