I have received a number of requests for how to implement leave-one-person-out cross validation with random forests. I refined my methods into a function and published it on the Digital Biomarker Discovery Pipeline (DBDP). If you want to implement this cross validation in your own work with random forests, please visit the repository on the DBDP GitHub. The ‘how-to’ of using the function is well documented and can be easily implemented with only one line in a number of projects.

If you want to learn how to implement leave-one-person-out cross validation into your own work (perhaps beyond random forests), please continue. In this tutorial, I am going to describe a process for implementing leave-one-person-out cross validation in Python.

What is leave-one-person-out cross validation?

When you build a machine learning model, it is standard practice to validate your methodology by leaving aside a portion of your data as a test set. You may have previously employed a train/test split where you trained your data on 80% of your data and you held the remaining 20% aside to test out your model (what we would refer to as an 80:20 split).

Leave-one-person-out cross validation (LOOCV) is a cross validation approach that utilizes each individual person as a “test” set. It is a specific type of k-fold cross validation, where the number of folds, k, is equal to the number of participants in your dataset.

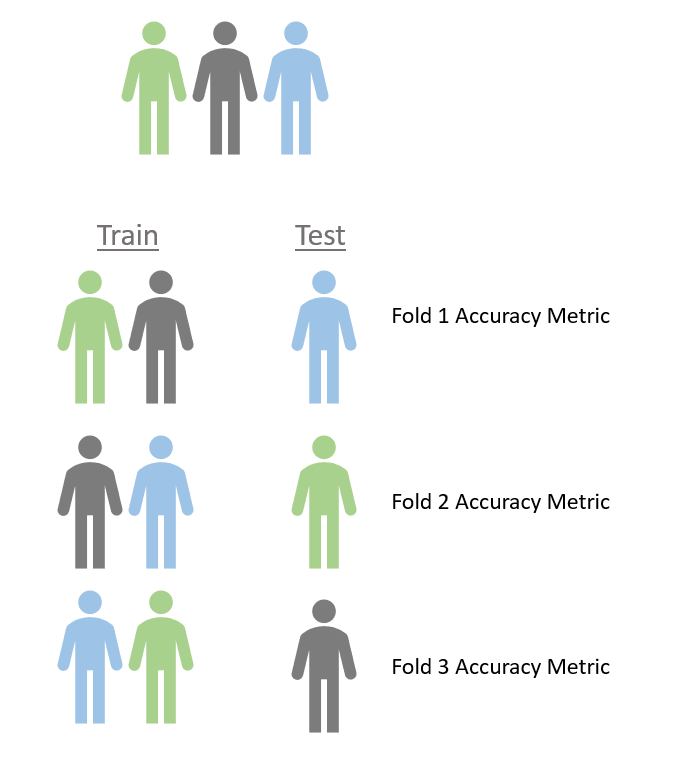

As an example, let’s say you have three people in your dataset. You want to validate your model using LOOCV. As shown in the figure below, you would complete 3 folds, or iterations, of your model. Each time, the model would be trained on two people and tested on the “left out” person. You would score your using an evaluation metric to assess the accuracy or errors of your model.

After iterating through and building a model for each fold and then testing it on a unique person for each fold, you will have three different evaluation metrics, one from each fold. To assess your accuracy of the entire model, you will take the mean +/- standard deviation of these accuracy metrics.

#data-science #random-forest #crossvalidation #machine-learning #python