Introduction :

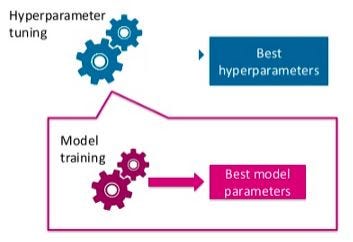

Hyperparameter optimization is the science of tuning or choosing the best set of hyperparameters for a learning algorithm. A set of optimal hyperparameter has a big impact on the performance of any machine learning algorithm. It is one of the most time-consuming yet a crucial step in machine learning training pipeline.

A Machine learning model has two types of tunable parameter :

· Model parameters

· Model hyperparameters

Model parameters vs Model hyperparameters (source)

Model parameters are learned during the training phase of a model or classifier. For example :

- coefficients in logistic regression or linear regression

- weights in an artificial neural network

**_Model Hyperparameters _**are set by the user before the model training phase. For example :

- ‘c’ (regularization strength), ‘penalty’ and ‘solver’ in logistic regression

- ‘learning rate’, ‘batch size’, ‘number of hidden layers’ etc. in an artificial neural network

The choice of Machine learning model depends on the dataset, the task in hand i.e. prediction or classification. Each model has its own unique set of hyperparameter and the task of finding the best combination of these parameters is known as hyperparameter optimization.

For solving hyperparameter optimization problem there are various methods are available. For example :

- Grid Search

- Random Search

- Optuna

- HyperOpt

In this post, we will focus on Optuna library which has one of the most accurate and successful hyperparameter optimization strategy.

#hyperparameter-tuning #optimization-algorithms #xgboost #optuna #machine-learning #algorithms