And I thought that I'd explain just what was going on and how I'd managed to speed up page render time from almost 3 seconds down to 700 milliseconds without (and this is the key part) needing to use any kind of caching.

One of the things you need to know going into this, is that I'm not great at front end development - I'm about average at it, I'd say. Which is why this (what I eventually found out) was a bit of a eureka moment to me. But it also makes perfect sense.

The Site

Firstly you need to understand the original state of the site that I was attempting to optimise. First, the HTML which seems unassuming:

<!DOCTYPE html> <html><head>

<meta charset=“utf-8”>

<meta name=“viewport” content=“width=device-width, initial-scale=1.0, shrink-to-fit=no”>

<link rel=“stylesheet” href=“dist/css/bootstrap.min.css”>

<link rel=“stylesheet” href=“dist/css/custom.min.css”>

</head><body>

<main class=“page landing-page”>

<section class=“clean-block clean-hero” id=“hero”>

<div class=“text”>

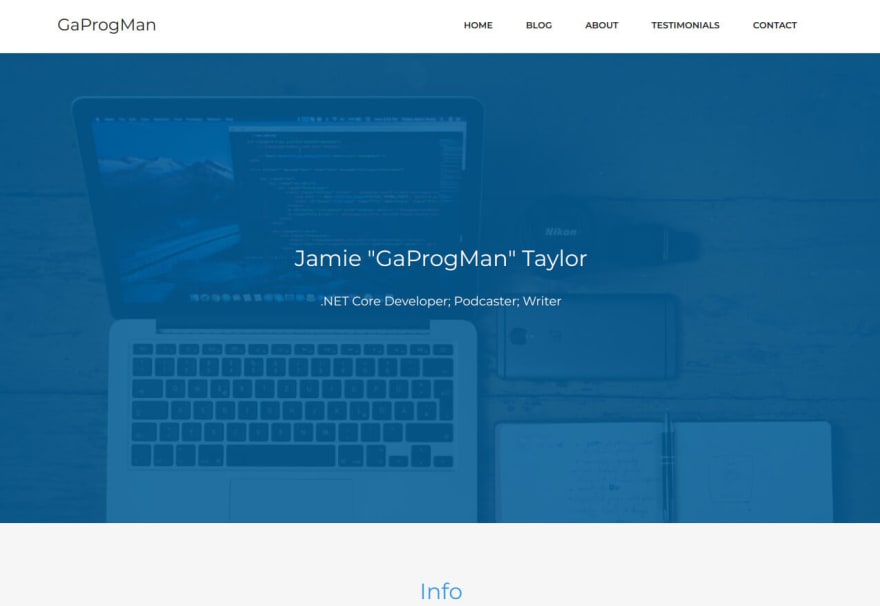

<h2>Jamie “GaProgMan” Taylor<br></h2>

<p>.NET Core Developer; Podcaster; Writer</p>

</div>

</section>

</main>

</body>

</html>

Then some of the contents of custom.css:

/* other rules, which override

- default bootstrap styles here

*/

#hero {

background-image: url(‘…/img/header.jpg’);

color: rgba(0,92,151,0.85);

}

custom.css was designed as a file which overrides some of the default bootstrap styles, so it must be loaded in after bootstrap. Which is why the order of the css files is important.

All of this creates something which matches the following screenshot:

The Issue - And How Browsers* Render HTML

* = some browsers anyway

I’m about to explain how browsers do a fetch, render, fetch, and repaint. I’m about to get the minutiae wrong, as I’m going to be doing it from a very high level. But the idea is, kind of, right.

So when the page is accessed, the above HMTL is downloaded. The browser starts to parse the HTML and sends off requests for the css files that are linked. With me so far?

Because bootstrap.min.css is requested first, regardless of whether custom.min.css comes back faster or not, the browser will parse and apply the rules from bootstrap.min.css first. The browser will only apply the style rules from custom once bootstrap has been applied.

This means that the div with the “hero” Id will not have the background-image rule applied until AFTER bootstrap has been downloaded and applied. Even then, the browser needs to then download the image, too. So we’ll be waiting a long time for the image to download.

When I was testing this, on an intentionally throttled internet connection

I aim to design sites from a mobile first point of view; when I’m doing that, I test them from a mobile first point of view too. This usually means that I throttle my internet connection down to a low-quality 3G connection during my testing loop

I found that the header image would take, on average 2-3 seconds to load and appear.

Not good.

What’s a Dev To Do?

I spent a little time thinking about what I could do. I made the header image smaller; I put it through tools like TinyPng; I moved the image to a very fast server; I even looked at using a caching reverse proxy service like CloudFlare.

I looked into using Brotli to compress the image at the server (but that wouldn’t work on some browsers); I looked into using using the srcsetattribute; I even looked into using server-side caching.

Then I went for lunch.

When I got back from lunch, I stripped out bootstrap and realised that the bottleneck became how fast the server could serve the image. Which, over my throttled connection, took around 400 ms.

Wait a minute. Why would bootstrap be causing this?

It wasn’t.

CSS File Order

Remember when I said earlier that we had to wait for bootstrap to be downloaded and applied before "hero"s background-image rule could be applied? Well, why not create a separate css file which just had that rule?

So I rewrote the HTML:

<!DOCTYPE html>

<html><head>

<meta charset=“utf-8”>

<meta name=“viewport” content=“width=device-width, initial-scale=1.0, shrink-to-fit=no”>

<link rel=“stylesheet” href=“dist/css/header.min.css”>

<link rel=“stylesheet” href=“dist/css/bootstrap.min.css”>

<link rel=“stylesheet” href=“dist/css/custom.min.css”>

</head><body>

<main class=“page landing-page”>

<section class=“clean-block clean-hero” id=“hero”>

<div class=“text”>

<h2>Jamie “GaProgMan” Taylor<br></h2>

<p>.NET Core Developer; Podcaster; Writer</p>

</div>

</section>

</main>

</body>

</html>

and the only rule in header.min.css was:

#hero {

background-image: url(‘…/img/header.jpg’);

color: rgba(0,92,151,0.85);

}

This meant that bootstrap didn’t need to be loaded before the image had to be downloaded.

Great success! The hero banner and nav at the top of the page would load in around 600ms. Since the design placed these items (and nothing else) above the fold, it meant that I wasn’t bothered about how long the rest of the content took to load.

But We Can Do Better

The CSS file was great, and super fast. But the browser had to make one more round trip to the server in order to download the background image. So how to we reduce that?

We can’t assume that the server will support http2, which would allow us to push more content to the client in parallel. So what could I do?

Enter: Base64 strings

For those who don’t know, Base64 is a way of encoding strings - it’s in no way secure, but can be useful if you need to transmit binary data via an ASCII only medium.

You can take an image, encode it as a base64 string, and use that string as the value of the src attribute for an image element. This technique does have it’s drawbacks, tough - the chief two being:

- browser support isn’t perfect

- unless you don’t care about targeting Microsoft browsers

- because of the sheer length of base64 representation of an image, it can take a little longer to download

- the base64 string for the screen shot of the rendered website design at the top of this post is 80,161 characters long for instance

BUT if you combine a base64 string with gzip, you can get pretty good transfer speeds. So I generated a base64 string for my hero image and altered the header.min.css file:

#hero {

background-image: url(‘data:image/jpeg;base64,/9j/4AAQSkZJRgABAQAAAQABAAD/2wBDAAUEBAQEAwUEBAQGBQUGCA0ICAcHC /* and the rest of the base64 string here*/ 5c1O5zUrxIMGEJWl6f/2Q=’);

color: rgba(0,92,151,0.85);

}

This no longer required the extra round trip to the server, which shaved another 50ms from the initial download. With the added bonus that the css would be cached in the browser as soon as it was downloaded.

Reflections

I didn’t really need to go the extra step (using a base64 string) but, if I’m honest with you, I was simply using it as an experiment to see whether it could be faster.

It’s this kind of micro optimisation (moving simpler css rules above blocking calls) which can really make a huge effect on the perceived speed of your sites. Like I said at the top of this article, I’m not the best at front end dev, but thought I’d share this.

Also, I’ve probably not done this in a way that is even vaguely “correct” or an optimised way. If you were me, what would you have done?

By : Jamie

#css #html