In Supervised Learning, we mostly deal with two types of variables i.e **numerical **variables and categorical variables. Wherein regression deals with numerical variables and **classification **deals with categorical variables. Where,

Regressionis one of the most popular statistical techniques used for Predictive Modelling and Data Mining in the world of Data Science. Basically,

Regression Analysis is a technique used for determining the relationship between two or more variables of interest.

However, Generally only 2–3 types of total 10+ types of regressions are used in practice. Linear Regression and Logistic Regression being widely used in general. So, Today we’re going to explore following 4 types of Regression Analysis techniques:

- Simple Linear Regression

- Ridge Regression

- Lasso Regression

- ElasticNet Regression

We will be observing their applications as well as the difference among them on the go while working on Student’s Score Prediction dataset. Let’s get started.

1. Linear Regression

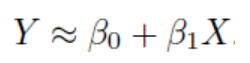

It is the simplest form of regression. As the name suggests, if the variables of interest share a linear relationship, then Linear Regression algorithm is applicable to them. If there is a single independent variable(here, Hours), then it is a Simple Linear Regression. If there are more than 1 independent variables, then it is a Multiple Linear Regression. The mathematical equation that approximates linear relationship between independent (criterion ) variable X and dependent(predictor) variable Y is:

where, β0 and β1 are intercept and slope respectively which are also known as parameters or model co-efficients.

#data-science #regression-analysis #elastic-net #ridge-regression #lasso-regression