Ian Goodfellow introduced Generative Adversarial Networks (GAN) in 2014. It was one of the most beautiful, yet straightforward implementations of Neural Networks, and it involved two Neural Networks competing against each other. _Yann LeCun, _the founding father of Convolutional Neural Networks (CNNs), described GANs as “the most interesting idea in the last ten years in Machine Learning“.

In simple words, the idea behind GANs can be summarized like this:

- Two Neural Networks are involved.

- One of the networks, the Generator, starts off with a random data distribution and tries to replicate a particular type of distribution.

- The other network, the Discriminator, through subsequent training, gets better at classifying a forged distribution from a real one.

- Both of these networks play a min-max game where one is trying to outsmart the other.

Easy peasy lemon squeezy… but when you actually try to implement them, they often don’t learn the way you expect them to. One common reason is the overly simplistic loss function.

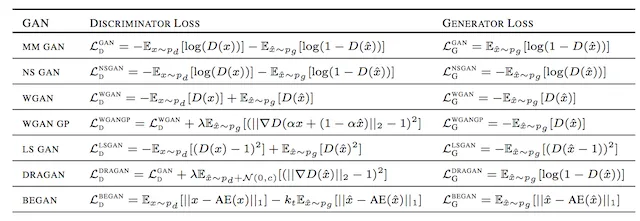

In this blog post, we will take a closer look at GANs and the different variations to their loss functions, so that we can get a better insight into how the GAN works while addressing the unexpected performance issues.

Standard GAN loss function (min-max GAN loss)

The standard GAN loss function, also known as the **min-max loss, **was first described in a 2014 paper by Ian Goodfellow et al., titled “Generative Adversarial Networks“.

The generator tries to minimize this function while the discriminator tries to maximize it. Looking at it as a min-max game, this formulation of the loss seemed effective.

In practice, it saturates for the generator, meaning that the generator quite frequently stops training if it doesn’t catch up with the discriminator.

The Standard GAN loss function can further be categorized into two parts: Discriminator loss and Generator loss.

#computer vision #deep learning #generative models