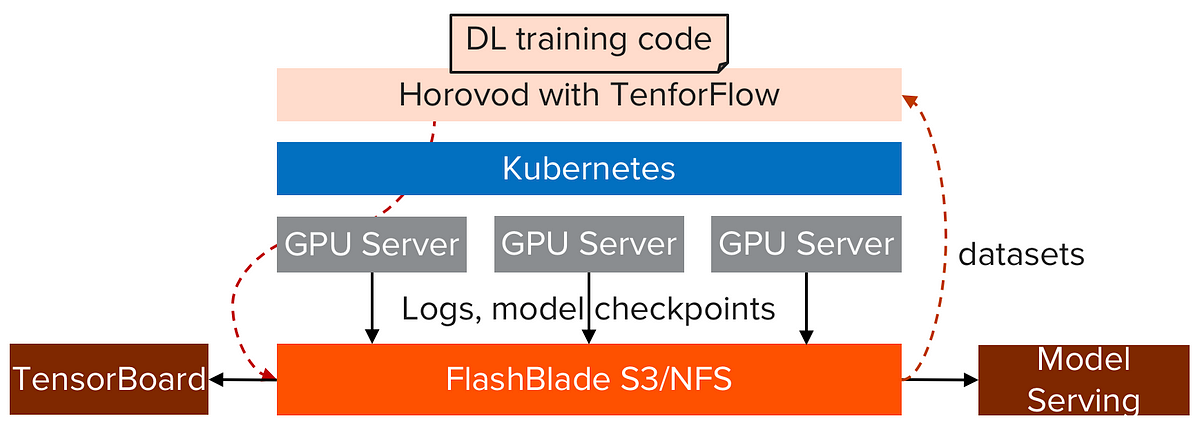

You may have noticed that even a powerful machine like the Nvidia DGX is not fast enough to train a deep learning model quick enough. Not mentioning the long wait time just to copy data into the DGX. Datasets are getting larger, GPUs are disaggregated from storage, workers with GPUs need to coordinate for model checkpointing and log saving. Your system may grow beyond a single server, team wants to share both GPU hardware and data easily.

Enter distributed training with Horovod on Kubernetes. In this blog, I will walk through the setups to train a deep learning model in multi-worker distributed environment with Horovod on Kubernetes.

#tensorflow #s3 #horovod #kubernetes #distributed-deep-learning

3.95 GEEK