Gender bias in credit scoring AI?

A few months ago a number of Apple Card users in the US were reporting that they and their partners have been allocated vastly different credit limits on the branded credit card, despite having the same income and credit score (see BBC article). Steve Wozniak, a co-founder of Apple, tweeted that his credit limit on the card was ten times higher than his wife’s, despite the couple having the same credit limit on all their other cards.

The Department of Financial Services in New York, a financial services regulator, is investigating allegations that the users’ gender may be the base of the disparity. Apple is keen to point out that Goldman Sachs is responsible for the algorithm, seemingly at odds with Apple’s marketing slogan ‘Created by Apple, not a bank’.

Since the regulator’s investigation is ongoing and no bias has yet been proven, I am writing only in hypotheticals in this article.

Bias in AI used in the justice system

The Apple Card story isn’t the only recent example of algorithmic bias hitting the headlines. In July last year, the NAACP (National Association for the Advancement of Colored People) in the US signed a statement requesting a moratorium on the use of automated decision-making tools, since some of them have been shown to have a racial bias when used to predict recidivism — in other words, how likely an offender is to re-offend.

In 2013, Eric Loomis was sentenced to six years in prison, after the state of Wisconsin used a program called COMPAS to calculate his odds of committing another crime. COMPAS is a proprietary algorithm whose inner workings are known only to its vendor Equivant. Loomis attempted to challenge the use of the algorithm in Wisconsin’s Supreme Court but his challenge was ultimately denied.

Unfortunately, incidents such as these are only worsening the widely held perception of AI as a dangerous tool, opaque, under-regulated, capable of encoding the worst of society’s prejudices.

How can an AI be prejudiced, racist, or biased? What went wrong?

I will focus here on the example of a loan application, since it is a simpler problem to frame and analyse, but the points I make are generalisable to any kind of bias and protected category.

I would like to point out first that I strongly doubt that anybody at Apple or Goldman Sachs has sat down and created an explicit set of rules that take gender into account for loan decisions.

Let us, first of all, imagine that we are creating a machine learning model that predicts the probability of a person defaulting on a loan. There are a number of ‘protected categories’, such as gender, which we are not allowed to discriminate on.

Developing and training a loan decision AI is that kind of ‘vanilla’ data science problem that routinely pops up on Kaggle (a website that lets you participate in data science competitions) and which aspiring data scientists can expect to be asked about in job interviews. The recipe to make a robot loan officer is as follows:

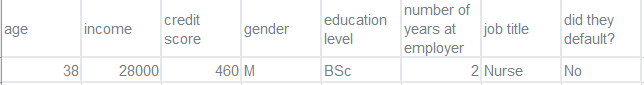

Imagine you have a large table of 10 thousand rows, all about loan applicants that your bank has seen in the past:

An example table of data about potential loan applicants.

The final column is what we want to predict.

You would take this data and split the rows into three groups, called the training set, the validation set, and the test set.

You then pick a machine learning algorithm, such as Linear Regression, Random Forest, or Neural Networks, and let it ‘learn’ from the training rows without letting it see the validation rows. You then test it on the validation set. You rinse and repeat for different algorithms, tweaking the algorithms each time, and the model you will eventually deploy is the one that scored the highest on your validation rows.

When you have finished you are allowed to test your model on the test dataset and check its performance.

#machine-learning #bias #ai #pen-testing #testing