Building a Web Scraper With Python in 30 minutes

My skills in Python are basic, so if you’re here with not a lot of skills in coding, I hope this guide helps you gain more knowledge and understanding.

The Perfect Beginner Project

To source data for ML, AI, or data science projects, you’ll often rely on databases, APIs, or ready-made CSV datasets. But what if you can’t find a dataset you want to use and analyze? That’s where a web scraper comes in.

Working on projects is crucial to solidifying the knowledge you gain. When I began this project, I was a little overwhelmed because I truly didn’t know a thing.

Sticking with it, finding answers to my questions on Stack Overflow, and a lot of trial and error helped me really understand how programming works — how web pages work, how to use loops, and how to build functions and keep data clean. It makes building a web scraper the perfect beginner project for anyone starting out in Python.

What we’ll cover

This guide will take you through understanding HTML web pages, building a web scraper using Python, and creating a DataFrame with pandas. It’ll cover data quality, data cleaning, and data-type conversion — entirely step by step and with instructions, code, and explanations on how every piece of it works. I hope you code along and enjoy!

Disclaimer

Websites can restrict or ban scraping data from their website. Users can be subject to legal ramifications depending on where and how you attempt to scrape information. Websites usually describe this in their terms of use and in their robots.txt file found at their site, which usually looks something like this: [www.example.com/robots.txt](http://www.[site].com/robots.txt.). So scrape responsibly, and respect the robots.txt.

What’s Web Scraping?

Web scraping consists of gathering data available on websites. This can be done manually by a human or by using a bot.

A bot is a program you build that helps you extract the data you need much quicker than a human’s hand and eyes can.

What Are We Going to Scrape?

It’s essential to identify the goal of your scraping right from the start. We don’t want to scrape any data we don’t actually need.

For this project, we’ll scrape data from IMDb’s “Top 1,000” movies, specifically the top 50 movies on this page. Here is the information we’ll gather from each movie listing:

- The title

- The year it was released

- How long the movie is

- IMDb’s rating of the movie

- The Metascore of the movie

- How many votes the movie got

- The U.S. gross earnings of the movie

How Do Web Scrapers Work?

Web scrapers gather website data in the same way a human would: They go to a web page of the website, get the relevant data, and move on to the next web page — only much faster.

Every website has a different structure. These are a few important things to think about when building a web scraper:

- What’s the structure of the web page that contains the data you’re looking for?

- How do we get to those web pages?

- Will you need to gather more data from the next page?

The URL

To begin, let’s look at the URL of the page we want to scrape.

We notice a few things about the URL:

?acts as a separator — it indicates the end of the URL resource path and the start of the parametersgroups=top_1000specifies what the page will be about&ref_adv_prvtakes us to the the next or the previous page. The reference is the page we’re currently on.adv_nxtandadv_prvare two possible values — translated to advance to next page and advance to previous page.

When you navigate back and forth through the pages, you’ll notice only the parameters change. Keep this structure in mind as it’s helpful to know as we build the scraper.

The HTML

HTML stands for hypertext markup language, and most web pages are written using it. Essentially, HTML is how two computers speak to each other over the internet, and websites are what they say.

When you access an URL, your computer sends a request to the server that hosts the site. Any technology can be running on that server (JavaScript, Ruby, Java, etc.) to process your request. Eventually, the server returns a response to your browser; oftentimes, that response will be in the form of an HTML page for your browser to display.

HTML describes the structure of a web page semantically, and originally included cues for the appearance of the document.

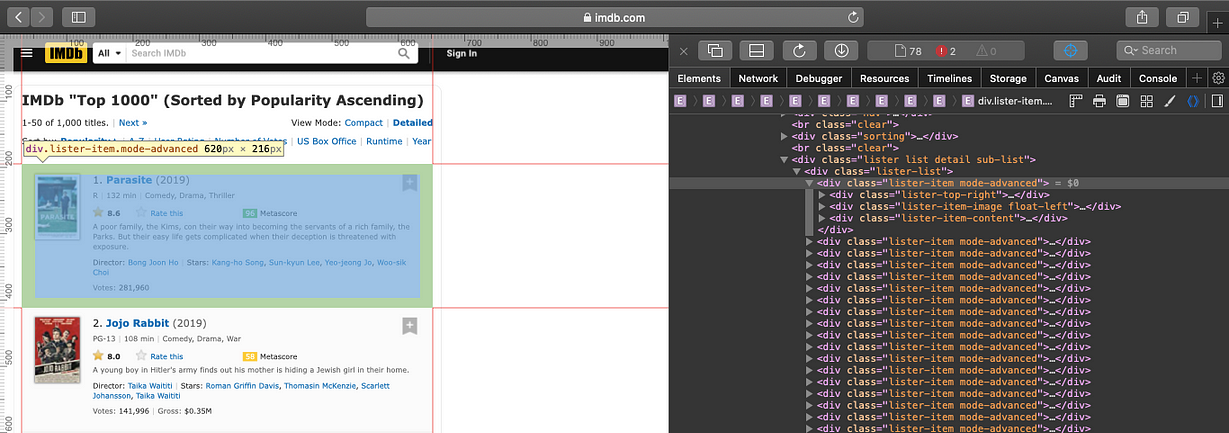

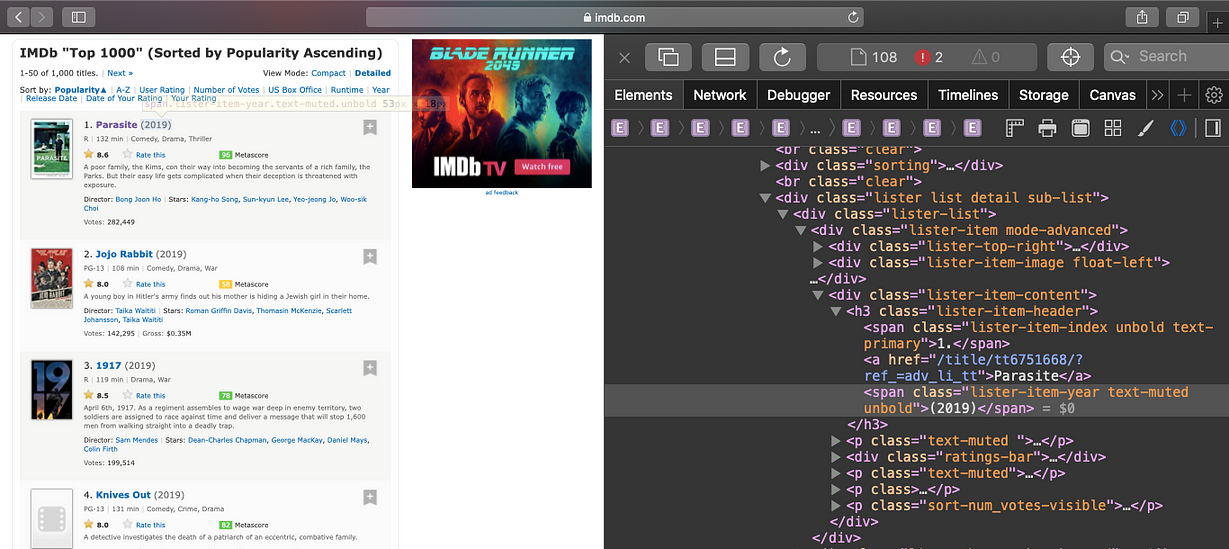

Inspect HTML

Chrome, Firefox, and Safari users can examine the HTML structure of any page by right-clicking your mouse and pressing the Inspect option.

A menu will appear on the bottom or right-hand side of your page with a long list of all the HTML tags housing the information displayed to your browser window. If you’re in Safari (photo above), you’ll want to press the button to the left of the search bar, which looks like a target. If you’re in Chrome or Firefox, there’s a small box with an arrow icon in it at the top left that you’ll use to inspect.

Once clicked, if you move your cursor over any element of the page, you’ll notice it’ll get highlighted along with the HTML tags in the menu that they’re associated with, as seen above.

Knowing how to read the basic structure of a page’s HTML page is important so we can turn to Python to help us extract the HTML from the page.

Tools

The tools we’re going to use are:

- Repl(optional)is a simple, interactive computer-programming environment used via your web browser. I recommend using this just for code-along purposes if you don’t already have an IDE. If you use Repl, make sure you’re using the Python environment.

- Requests will allow us to send HTTP requests to get HTML files

- BeautifulSoupwill help us parse the HTML files

- pandas will help us assemble the data into a

DataFrameto clean and analyze it - NumPy will add support for mathematical functions and tools for working with arrays

Now, Let’s Code

You can follow along below inside your Repl environment or IDE, or you can go directly to the entire code here. Have fun!

Import tools

First, we’ll import the tools we’ll need so we can use them to help us build the scraper and get the data we need.

import requests

from requests import get

from bs4 import BeautifulSoup

import pandas as pd

import numpy as np

Movies in English

It’s very likely when we run our code to scrape some of these movies, we’ll get the movie names translated into the main language of the country the movie originated in.

Use this code to make sure we get English-translated titles from all the movies we scrape:

headers = {"Accept-Language": "en-US, en;q=0.5"}

Request contents of the URL

Get the contents of the page we’re looking at by requesting the URL:

url = "https://www.imdb.com/search/title/?groups=top_1000&ref_=adv_prv"

results = requests.get(url, headers=headers)

Breaking URL requests down:

urlis the variable we create and assign the URL toresultsis the variable we create to store ourrequest.getactionrequests.get(url, headers=headers)is the method we use to grab the contents of the URL. Theheaderspart tells our scraper to bring us English, based on our previous line of code.

Using BeautifulSoup

Make the content we grabbed easy to read by using BeautifulSoup:

soup = BeautifulSoup(results.text, "html.parser")

print(soup.prettify())

Breaking BeautifulSoup down:

soupis the variable we create to assign the methodBeatifulSoupto, which specifies a desired format of results using the HTML parser — this allows Python to read the components of the page rather than treating it as one long stringprint(soup.prettify())will printwhat we’ve grabbedin a more structured tree format, making it easier to read

The results of the print will look more ordered, like this:

</div>

</div>

<div class="lister-item mode-advanced">

<div class="lister-top-right">

<div class="ribbonize" data-caller="filmosearch" data-tconst="tt0071562"></div>

</div>

<div class="lister-item-image float-left">

<a href="/title/tt0071562/"> <img alt="The Godfather: Part II"class="loadlate" data-tconst="tt0071562" height="98" loadlate="https://m.media-amazon.com/images/M/MV5BMWMwMGQzZTItY2JlNC00OWZiLWIyMDctNDk2ZDQ2YjRjMWQ0XkEyXkFqcGdeQXVyNzkwMjQ5NzM@._V1_UY98_CR1,0,67,98_AL_.jpg" src="https://m.media-amazon.com/images/G/01/imdb/images/nopicture/large/film-184890147._CB466725069_.png" width="67"/>

</a> </div>

<div class="lister-item-content">

<h3 class="lister-item-header">

<span class="lister-item-index unbold text-primary">48.</span>

<a href="/title/tt0071562/">The Godfather: Part II</a>

<span class="lister-item-year text-muted unbold">(1974)</span>

</h3>

<p class="text-muted">

<span class="certificate">R</span>

<span class="ghost">|</span>

<span class="runtime">202 min</span>

<span class="ghost">|</span>

<span class="genre">

Crime, Drama </span>

</p>

<div class="ratings-bar">

<div class="inline-block ratings-imdb-rating" data-value="9" name="ir">

<span class="global-sprite rating-star imdb-rating"></span>

<strong>9.0</strong>

</div>

<div class="inline-block ratings-user-rating">

<span class="userRatingValue" data-tconst="tt0071562" id="urv_tt0071562">

etc...

Initialize your storage

When we write code to extract our data, we need somewhere to store that data. Create variables for each type of data you’ll extract, and assign an empty list to it, indicated by square brackets []. Remember the list of information we wanted to grab from each movie from earlier:

#initialize empty lists where you'll store your data

titles = []

years = []

time = []

imdb_ratings = []

metascores = []

votes = []

us_gross = []

Your code should now look something like this. Note that we can delete our print function until we need to use it again.

import requests

from requests import get

from bs4 import BeautifulSoup

import pandas as pd

import numpy as np

url = "https://www.imdb.com/search/title/?groups=top_1000&ref_=adv_prv"

results = requests.get(url)

soup = BeautifulSoup(results.text, "html.parser")

titles = []

years = []

time = []

imdb_ratings = []

metascores = []

votes = []

us_gross = []

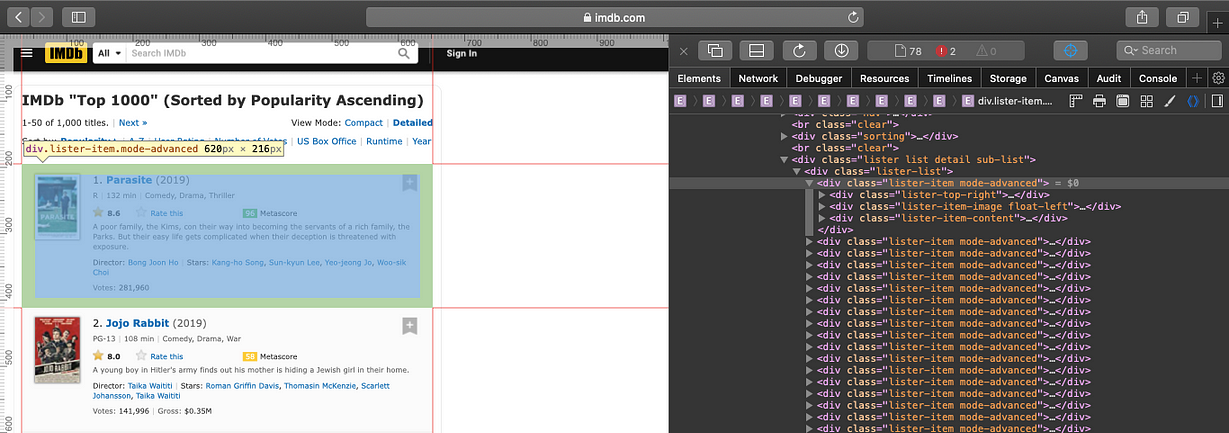

Find the right div container

It’s time to check out the HTML code in our web page.

Go to the web page we’re scraping, inspect it, and hover over a single movie in its entirety, like below:

We need to figure out what distinguishes each of these from other div containers we see.

You‘ll notice the list of div elements to the right with a class attribute that has two values: lister-item and mode-advanced.

If you click on each of those, you’ll notice it’ll highlight each movie container on the left of the page, like above.

If we do a quick search within inspect (press Ctrl+F and type lister-item mode-advanced), we’ll see 50 matches representing the 50 movies displayed on a single page. We now know all the information we seek lies within this specific div tag.

Find all lister-item mode-advanced divs

Our next move is to tell our scraper to find all of these lister-item mode-advanced divs:

movie_div = soup.find_all('div', class_='lister-item mode-advanced')

Breaking **find_all** down:

movie_divis the variable we’ll use to store all of thedivcontainers with a class oflister-item mode-advanced- the

find_all()method extracts all thedivcontainers that have aclassattribute oflister-item mode-advancedfrom what we have stored in our variable soup.

Get ready to extract each item

We’re missing gross earnings! If you look at the second movie, they’ve included it there.

Something to always consider when building a web scraper is the idea that not all the information you seek will be available for you to gather.

In these cases, we need to make sure our web scraper doesn’t stop working or break when it reaches missing data and build around the idea we just don’t know whether or not that’ll happen.

Getting into each lister-item mode-advanced div

When we grab each of the items we need in a singlelister-item mode-advanced div container, we need the scraper to loop to the next lister-item mode-advanced div container and grab those movie items too. And then it needs to loop to the next one and so on — 50 times for each page. For this to execute, we’ll need to wrap our scraper in a for loop.

#initiate the for loop

#this tells your scraper to iterate through

#every div container we stored in move_div

for container in movie_div:

Breaking down the **for** loop:

- A

forloopis used for iterating over a sequence. Our sequence being everylister-item mode-advanceddiv container that we stored inmovie_div containeris the name of the variable that enters eachdiv. You can name this whatever you want (x,loop,banana,cheese), and it wont change the function of the loop.

It can be read like this:

for <variable> in <iterable>:

<do this>

OR in our case...

for each lister-item mode-advanced div container:

scrape these elements

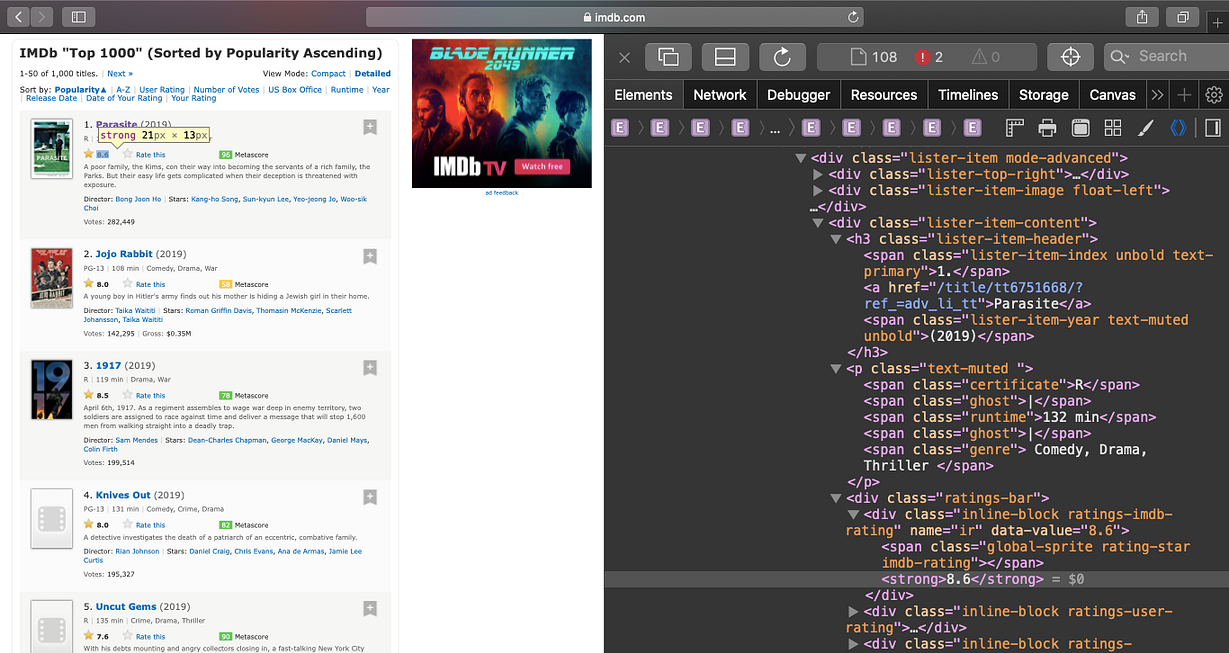

Extract the title of the movie

Beginning with the movie’s name, let’s locate its corresponding HTML line by using inspect and clicking on the title.

We see the name is contained within an anchor tag, . This tag is nested within a header tag, . The tag is nested within a tag. This `` is the third of the divs nested in the container of the first movie.

name = container.h3.a.text

titles.append(name)

Breaking titles down:

nameis the variable we’ll use to store the title data we findcontaineris what used in our for loop — it’s used for iterating over each time.h3and.ais attribute notation and tells the scraper to access each of those tags.texttells the scraper to grab the text nested in the `` tagtitles.append(name)tells the scraper to take what we found and stored innameand to add it into our empty list calledtitles, which we created in the beginning

Extract year of release

Let’s locate the movie’s year and its corresponding HTML line by using inspect and clicking on the year.

We see this data is stored within the tag below the tag that contains the title of the movie. The dot notation, which we used for finding the title data (.h3.a), worked because it was the first tag after the `h3` tag. Since the tag we want is the second `` tag, we have to use a different method.

Instead, we can tell our scraper to search by the distinctive mark of the second ``. We’ll use the find() method, which is similar to find_all() except it only returns the first match.

year = container.h3.find('span', class_='lister-item-year').text

years.append(year)

Breaking years down:

yearis the variable we’ll use to store the year data we findcontaineris what we used in ourforloop — it’s used for iterating over each time.h3is attribute notation, which tells the scraper to access that tag.find()is a method we’ll use to access this particular `` tag(‘span’, class_ = ‘lister-item-year’)is the distinctive `` tag we wantyears.append(year)tells the scraper to take what we found and stored inyearand to add it into our empty list calledyears(which we created in the beginning)

Extract length of movie

Locate the movie’s length and its correspondent HTML line by using inspect and clicking on the total minutes.

The data we need can be found in a `` tag with a class of runtime. Like we did with year, we can do something similar:

runtime = container.find('span', class_='runtime').text if container.p.find('span', class_='runtime') else ''

time.append(runtime)

Breaking time down:

runtimeis the variable we’ll use to store the time data we findcontaineris what we used in ourforloop — it’s used for iterating over each time.find()is a method we’ll use to access this particular `` tag(‘span’, class_ = ‘runtime’)is the distinctive `` tag we wantif container.p.find(‘span’, class_=’runtime’) else ‘-’says if there’s data there, grab it — but if the data is missing, then put a dash there instead.texttells the scraper to grab that text in the `` tagtime.append(runtime)tells the scraper to take what we found and stored inruntimeand to add it into our empty list calledtime(which we created in the beginning)

Extract IMDb ratings

Find the movie’s IMDb rating and its corresponding HTML line by using inspect and clicking on the IMDb rating.

Now, we’ll focus on extracting the IMDb rating. The data we need can be found in a ** tag. Since I don’t see any other ** tags, we can use attribute notation (dot notation) to grab this data.

imdb = float(container.strong.text)

imdb_ratings.append(imdb)

Breaking IMDb ratings down:

imdbis the variable we’ll use to store the IMDB ratings data it findscontaineris what we used in ourforloop — it’s used for iterating over each time.strongis attribute notation that tells the scraper to access that tag.texttells the scraper to grab that text- The

float()method turns the text we find into a float — which is a decimal imdb_ratings.append(imdb)tells the scraper to take what we found and stored inimdband to add it into our empty list calledimdb_ratings(which we created in the beginning)

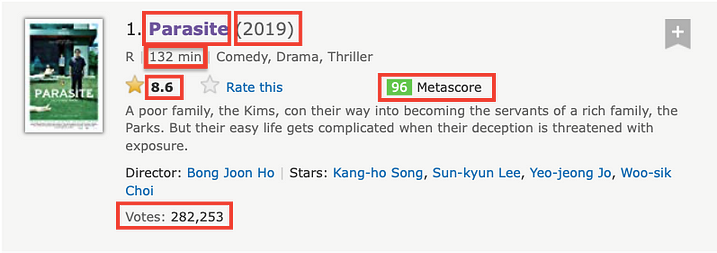

Extract Metascore

Find the movie’s Metascore rating and its corresponding HTML line by using inspect and clicking on the Metascore number.

The data we need can be found in a `` tag that has a class that says metascore favorable.

Before we settle on that, you should notice that, of course, a 96 for “Parasite” shows a favorable rating, but are the others favorable? If you highlight the next movie’s Metascore, you’ll see “JoJo Rabbit” has a class that says metascore mixed. Since these tags are different, it’d be safe to tell the scraper to use just the class metascore when scraping:

m_score = container.find('span', class_='metascore').text if container.find('span', class_='metascore') else '-'

metascores.append(m_score)

Breaking Metascores down:

m_scoreis the variable we’ll use to store the Metascore-rating data it findscontaineris what we used in ourforloop — it’s used for iterating over each time.find()is a method we’ll use to access this particular `` tag(‘span’, class_ = ‘metascore’)is the distinctive `` tag we want.texttells the scraper to grab that textif container.find(‘span’, class_=’metascore’) else ‘-’says if there is data there, grab it — but if the data is missing, then put a dash there- The

int()method turns the text we find into an integer metascores.append(m_score)tells the scraper to take what we found and stored inm_scoreand to add it into our empty list calledmetascores(which we created in the beginning)

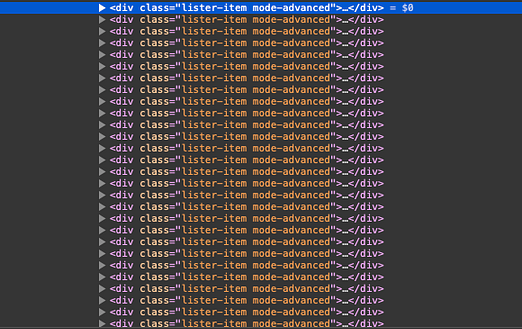

Extract votes and gross earnings

We’re finally onto the final two items we need to extract, but we saved the toughest for last.

Here’s where things get a little tricky. As mentioned earlier, you should have noticed that when we look at the first movie on this list, we don’t see a gross-earnings number. When we look at the second movie on the list, we can see both.

Let’s just have a look at the second movie’s HTML code and go from there.

Both the votes and the gross are highlighted on the right. After looking at the votes and gross containers for movie #2, what do you notice?

As you can see, both of these are in a `` tag that has a name attribute that equals nv and a data-value attribute that holds the values of the distinctive number we need for each.

How can we grab the data for the second one if the search parameters for the first one are the same? How do we tell our scraper to skip over the first one and scrape the second?

This one took a lot of brain flexing, tons of coffee, and a couple late nights to figure out. Here’s how I did it:

nv = container.find_all('span', attrs={'name': 'nv'})

vote = nv[0].text

votes.append(vote)

grosses = nv[1].text if len(nv) > 1 else '-'

us_gross.append(grosses)

Breaking votes and gross down:

nvis an entirely new variable we’ll use to hold both the votes and the gross `` tagscontaineris what we used in ourforloop for iterating over each timefind_all()is the method we’ll use to grab both of the `` tags(‘span’, attrs = ‘name’ : ’nv’)is how we can grab attributes of that specific tag.voteis the variable we’ll use to store the votes we find in thenvtagnv[0]tells the scraper to go into thenvtag and grab the first data in the list — which are the votes becausevotescomes first in our HTML code (computers count in binary — they start count at 0, not 1).texttells the scraper to grab that textvotes.append(vote)tells the scraper to take what we found and stored invoteand to add it into our empty list calledvotes(which we created in the beginning)grossesis the variable we’ll use to store the gross we find in thenvtagnv[1]tells the scraper to go into thenvtag and grab the second data in the list — which isgrossbecausegrosscomes second in our HTML codenv[1].text if len(nv) > 1 else ‘-’says if the length ofnvis greater than one, then find the second datum that’s stored. But if the data that’s stored innvisn’t greater than one — meaning if the gross is missing — then put a dash thereus_gross.append(grosses)tells the scraper to take what we found and stored ingrossesand to add it into our empty list calledus_grosses(which we created in the beginning)

Your code should now look like this:

import requests

from requests import get

from bs4 import BeautifulSoup

import pandas as pd

import numpy as np

url = "https://www.imdb.com/search/title/?groups=top_1000&ref_=adv_prv"

headers = {"Accept-Language": "en-US, en;q=0.5"}

results = requests.get(url, headers=headers)

soup = BeautifulSoup(results.text, "html.parser")

titles = []

years = []

time = []

imdb_ratings = []

metascores = []

votes = []

us_gross = []

movie_div = soup.find_all('div', class_='lister-item mode-advanced')

for container in movie_div:

#Name

name = container.h3.a.text

titles.append(name)

#year

year = container.h3.find('span', class_='lister-item-year').text

years.append(year)

#time

runtime = container.p.find('span', class_='runtime').text if container.p.find('span', class_='runtime').text else '-'

time.append(runtime)

#IMDb rating

imdb = float(container.strong.text)

imdb_ratings.append(imdb)

#metascore

m_score = container.find('span', class_='metascore').text if container.find('span', class_='metascore') else '-'

metascores.append(m_score)

#here are two NV containers, grab both of them as they hold both the votes and the grosses

nv = container.find_all('span', attrs={'name': 'nv'})

#filter nv for votes

vote = nv[0].text

votes.append(vote)

#filter nv for gross

grosses = nv[1].text if len(nv) > 1 else '-'

us_gross.append(grosses)

Let’s See What We Have So Far

Now that we’ve told our scraper what elements to scrape, let’s use the print function to print out each list we’ve sent our scraped data to:

print(titles)

print(years)

print(time)

print(imdb_ratings)

print(metascores)

print(votes)

print(us_gross)

Our lists looks like this

['Parasite', 'Jojo Rabbit', '1917', 'Knives Out', 'Uncut Gems', 'Once Upon a Time... in Hollywood', 'Joker', 'The Gentlemen', 'Ford v Ferrari', 'Little Women', 'The Irishman', 'The Lighthouse', 'Toy Story 4', 'Marriage Story', 'Avengers: Endgame', 'The Godfather', 'Blade Runner 2049', 'The Shawshank Redemption', 'The Dark Knight', 'Inglourious Basterds', 'Call Me by Your Name', 'The Two Popes', 'Pulp Fiction', 'Inception', 'Interstellar', 'Green Book', 'Blade Runner', 'The Wolf of Wall Street', 'Gone Girl', 'The Shining', 'The Matrix', 'Titanic', 'The Silence of the Lambs', 'Three Billboards Outside Ebbing, Missouri', "Harry Potter and the Sorcerer's Stone", 'The Peanut Butter Falcon', 'The Handmaiden', 'Memories of Murder', 'The Lord of the Rings: The Fellowship of the Ring', 'Gladiator', 'The Martian', 'Bohemian Rhapsody', 'Watchmen', 'Forrest Gump', 'Thor: Ragnarok', 'Casino Royale', 'The Breakfast Club', 'The Godfather: Part II', 'Django Unchained', 'Baby Driver']

['(2019)', '(2019)', '(2019)', '(2019)', '(2019)', '(2019)', '(2019)', '(2019)', '(2019)', '(2019)', '(2019)', '(I) (2019)', '(2019)', '(2019)', '(2019)', '(1972)', '(2017)', '(1994)', '(2008)', '(2009)', '(2017)', '(2019)', '(1994)', '(2010)', '(2014)', '(2018)', '(1982)', '(2013)', '(2014)', '(1980)', '(1999)', '(1997)', '(1991)', '(2017)', '(2001)', '(2019)', '(2016)', '(2003)', '(2001)', '(2000)', '(2015)', '(2018)', '(2009)', '(1994)', '(2017)', '(2006)', '(1985)', '(1974)', '(2012)', '(2017)']

['132 min', '108 min', '119 min', '131 min', '135 min', '161 min', '122 min', '113 min', '152 min', '135 min', '209 min', '109 min', '100 min', '137 min', '181 min', '175 min', '164 min', '142 min', '152 min', '153 min', '132 min', '125 min', '154 min', '148 min', '169 min', '130 min', '117min', '180 min', '149 min', '146 min', '136 min', '194 min', '118 min', '115 min', '152 min', '97 min', '145 min', '132 min', '178 min', '155 min', '144 min', '134 min', '162 min', '142 min', '130 min', '144 min', '97 min', '202 min', '165 min', '113 min']

[8.6, 8.0, 8.5, 8.0, 7.6, 7.7, 8.6, 8.1, 8.2, 8.0, 8.0, 7.7, 7.8, 8.0, 8.5, 9.2, 8.0, 9.3, 9.0, 8.3, 7.9, 7.6, 8.9, 8.8, 8.6, 8.2, 8.1, 8.2, 8.1,8.4, 8.7, 7.8, 8.6, 8.2, 7.6, 7.7, 8.1, 8.1, 8.8, 8.5, 8.0, 8.0, 7.6, 8.8, 7.9, 8.0, 7.9, 9.0, 8.4, 7.6]

['96 ', '58 ', '78 ', '82 ', '90 ', '83 ', '59 ', '51 ', '81 ', '91 ', '94 ', '83 ', '84 ', '93 ', '78 ', '100 ', '81 ', '80 ', '84 ', '69 ', '93 ', '75 ', '94 ', '74 ', '74 ', '69 ', '84 ', '75 ', '79 ', '66 ', '73 ', '75 ', '85 ', '88 ', '64 ', '70 ', '84 ', '82 ', '92 ', '67 ', '80 ', '49 ', '56 ', '82 ', '74 ', '80 ', '62 ', '90 ', '81 ', '86 ']

['282,699', '142,517', '199,638', '195,728', '108,330', '396,071', '695,224', '42,015', '152,661', '65,234', '249,950', '77,453', '160,180', '179,887', '673,115', '1,511,929', '414,992', '2,194,397', '2,176,865', '1,184,882', '178,688', '76,291', '1,724,518', '1,925,684', '1,378,968', '293,695', '656,442', '1,092,063', '799,696', '835,496', '1,580,250', '994,453', '1,191,182', '383,958', '595,613', '34,091', '92,492', '115,125', '1,572,354', '1,267,310', '715,623', '410,199', '479,811', '1,693,344', '535,065', '555,756', '330,308', '1,059,089', '1,271,569', '398,553']

['-', '$0.35M', '-', '-', '-', '$135.37M', '$192.73M', '-', '-', '-', '-', '$0.43M', '$433.03M', '-', '$858.37M', '$134.97M', '$92.05M', '$28.34M', '$534.86M', '$120.54M', '$18.10M', '-', '$107.93M', '$292.58M', '$188.02M', '$85.08M', '$32.87M', '$116.90M', '$167.77M', '$44.02M', '$171.48M', '$659.33M', '$130.74M', '$54.51M', '$317.58M', '$13.12M', '$2.01M', '$0.01M', '$315.54M', '$187.71M', '$228.43M', '$216.43M', '$107.51M', '$330.25M', '$315.06M', '$167.45M', '$45.88M', '$57.30M', '$162.81M', '$107.83M']

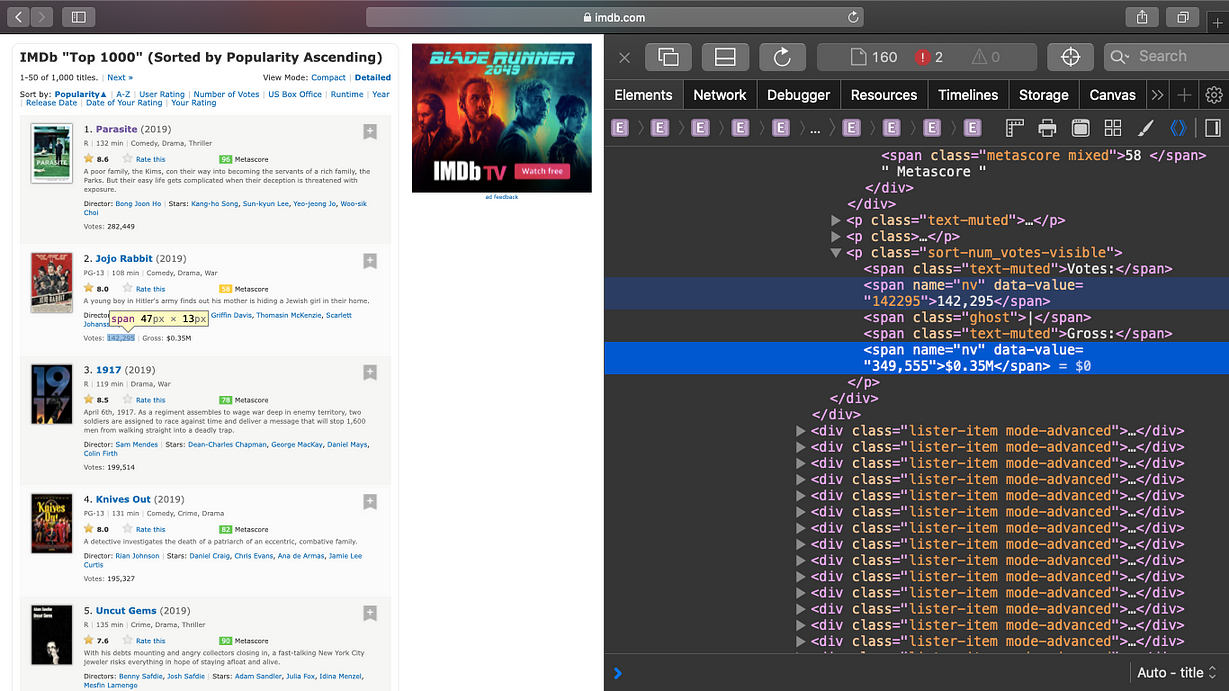

So far so good, but we aren’t quite there yet. We need to clean up our data a bit. Looks like we have some unwanted elements in our data: dollar signs,Ms, mins, commas, parentheses, and extra white space in the Metascores.

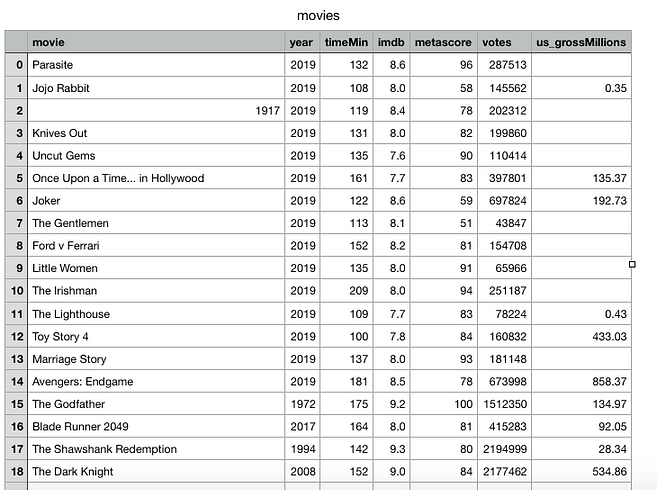

Building a DataFrame With pandas

The next order of business is to build a DataFrame with pandas to store the data we have nicely in a table to really understand what’s going on.

Here’s how we do it:

movies = pd.DataFrame({

'movie': titles,

'year': years,

'timeMin': time,

'imdb': imdb_ratings,

'metascore': metascores,

'votes': votes,

'us_grossMillions': us_gross,

})

Breaking our dataframe down:

moviesis what we’ll name ourDataFramepd.DataFrameis how we initialize the creation of aDataFramewith pandas- The keys on the left are the column names

- The values on the right are our lists of data we’ve scraped

View Our DataFrame

We can see how it all looks by simply using the print function on our DataFrame —which we called movies —at the bottom of our program:

print(movies)

Our pandas DataFrame looks like this

Data Quality

Before embarking on projects like this, you must know what your data-quality criteria is — meaning, what rules or constraints should your data follow. Here are some examples:

- Data-type constraints: Values in your columns must be a particular data type: numeric, boolean, date, etc.

- Mandatory constraints: Certain columns can’t be empty

- Regular expression patterns: Text fields that have to be in a certain pattern, like phone numbers

What Is Data Cleaning?

Data cleaning is the process of detecting and correcting or removing corrupt or inaccurate records from your dataset.

When doing data analysis, it’s also important to make sure we’re using the correct data types.

Checking Data Types

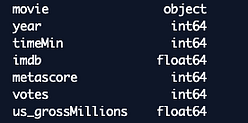

We can check what our data types look like by running this print function at the bottom of our program:

print(movies.dtypes)

Our data type results

Lets analyze this:Our movie data type is an object, which is the same as a string, which would be correct considering they’re titles of movies. Our IMDb score is also correct because we have floating-point numbers in this column (decimal numbers).

But our year, timeMin, metascore, and votes show they’re objects when they should be integer data types, and our us_grossMillionsis an object instead of a float data type. How did this happen?

Initially, when we were telling our scraper to grab these values from each HTML container, we were telling it to grab specific values from a string. A string represents text rather than numbers — it’s comprised of a set of characters that can also contain numbers.

For example, the word cheese and the phrase I ate 10 blocks of cheese are both strings. If we were to get rid of everything except the 10 from the I ate 10 blocks of cheese string, it’s still a string — but now it’s one that only says 10.

Data Cleaning With pandas

Now that we have a clear idea of what our data looks like right now, it’s time to start cleaning it up.

This can be a tedious task, but it’s one that’s very important.

Cleaning year data

To remove the parentheses from our year data and to convert the object into an integer data type, we’ll do this:

movies['year'] = movies['year'].str.extract('(\d+)').astype(int)

Breaking cleaning year data down:

movies[‘year’]tells pandas to go to the columnyearin ourDataFrame.str.extract(‘(\d+’)this method:(‘(\d+’)says to extract all the digits in the string- The

.astype(int)method converts the result to an integer

Now, if we run print(movies[‘year’]) into the bottom of our program to see what our year data looks like, this is the result:

You should see your list of years without any parentheses. And the data type showing is now an integer. Our year data is officially cleaned.

Cleaning time data

We’ll do exactly what we did cleaning our year data above to our time data by grabbing only the digits and converting our data type to an integer.

movies['timeMin'] = movies['timeMin'].str.extract('(\d+)').astype(int)

Cleaning Metascore data

The only cleaning we need to do here is converting our object data type into an integer:

movies['metascore'] = movies['metascore'].astype(int)

Cleaning votes

With votes, we need to remove the commas and convert it into an integer data type:

movies['votes'] = movies['votes'].str.replace(',', '').astype(int)

Breaking cleaning votes down:

movies[‘votes’]is our votes data in our moviesDataFrame. We’re assigning our new cleaned up data to our votesDataFrame.- .

str.replace(‘ , ’ , ‘ ’)grabs the string and uses thereplacemethod to replace the commas with an empty quote (nothing) - The

.astype(int)method converts the result into an integer

Cleaning gross data

The gross data involves a few hurdles to jump. What we need to do is remove the dollar sign and the Ms from the data and convert it into a floating-point number. Here’s how to do it:

movies['us_grossMillions'] = movies['us_grossMillions'].map(lambda x: x.lstrip('$').rstrip('M'))

movies['us_grossMillions'] = pd.to_numeric(movies['us_grossMillions'], errors='coerce')

Breaking cleaning gross down:

Top cleaning code:

movies[‘us_grossMillions’]is our gross data in our moviesDataFrame. We’ll be assigning our new cleaned up data to ourus_grossMillionscolumn.movies[‘us_grossMillions’]tells pandas to go to the columnus_grossMillionsin ourDataFrame- The

.map()function calls the specified function for each item of an iterable lambda x: xis an anonymous functions in Python (one without a name). Normal functions are defined using thedefkeyword.lstrip(‘$’).rstrip(‘M’)is our function arguments. This tells our function to strip the$from the left side and strip theMfrom the right side.

Bottom conversion code:

movies[‘us_grossMillions’]is stripped of the elements we don’t need, and now we’ll assign the conversion code data to it to finish it uppd.to_numericis a method we can use to change this column to a float. The reason we use this is because we have a lot of dashes in this column, and we can’t just convert it to a float using.astype(float)— this would catch an error.errors=’coerce’will transform the nonnumeric values, our dashes, into NaN(not-a-number )valuesbecause we have dashes in place of the data that’s missing

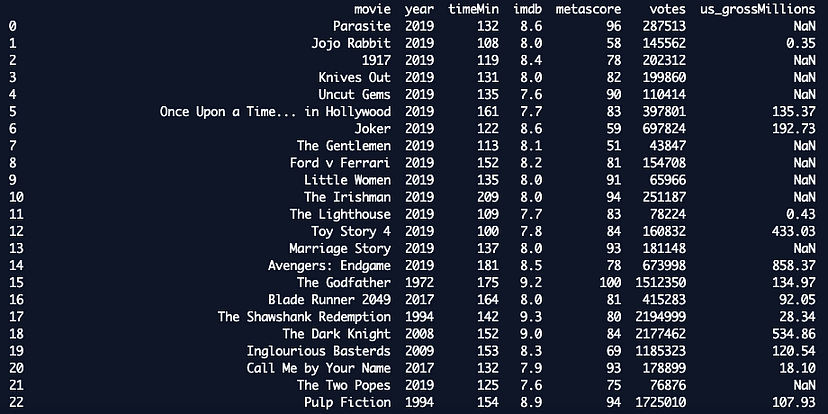

Review the Cleaned and Converted Code

Let’s see how we did. Run the print function to see our data and the data types we have:

print(movies)

print(movies.dtypes)

The result of our cleaned data

The result of our data types

Looks good!

Final Finished Code

Here’s the final code of your single page web scraper:

import requests

from requests import get

from bs4 import BeautifulSoup

import pandas as pd

import numpy as np

url = "https://www.imdb.com/search/title/?groups=top_1000&ref_=adv_prv"

headers = {"Accept-Language": "en-US, en;q=0.5"}

results = requests.get(url, headers=headers)

soup = BeautifulSoup(results.text, "html.parser")

titles = []

years = []

time = []

imdb_ratings = []

metascores = []

votes = []

us_gross = []

movie_div = soup.find_all('div', class_='lister-item mode-advanced')

for container in movie_div:

#name

name = container.h3.a.text

titles.append(name)

#year

year = container.h3.find('span', class_='lister-item-year').text

years.append(year)

#runtime

runtime = container.p.find('span', class_='runtime').text if container.p.find('span', class_='runtime').text else '-'

time.append(runtime)

#IMDB rating

imdb = float(container.strong.text)

imdb_ratings.append(imdb)

#metascore

m_score = container.find('span', class_='metascore').text if container.find('span', class_='metascore') else '-'

metascores.append(m_score)

#There are two NV containers, grab both of them as they hold both the votes and the grosses

nv = container.find_all('span', attrs={'name': 'nv'})

#filter nv for votes

vote = nv[0].text

votes.append(vote)

#filter nv for gross

grosses = nv[1].text if len(nv) > 1 else '-'

us_gross.append(grosses)

#building our Pandas dataframe

movies = pd.DataFrame({

'movie': titles,

'year': years,

'timeMin': time,

'imdb': imdb_ratings,

'metascore': metascores,

'votes': votes,

'us_grossMillions': us_gross,

})

#cleaning data with Pandas

movies['year'] = movies['year'].str.extract('(\d+)').astype(int)

movies['timeMin'] = movies['timeMin'].str.extract('(\d+)').astype(int)

movies['metascore'] = movies['metascore'].astype(int)

movies['votes'] = movies['votes'].str.replace(',', '').astype(int)

movies['us_grossMillions'] = movies['us_grossMillions'].map(lambda x: x.lstrip('$').rstrip('M'))

movies['us_grossMillions'] = pd.to_numeric(movies['us_grossMillions'], errors='coerce')

Saving Your Data to a CSV

What’s the use of our scraped data if we can’t save it for any future projects or analysis? Below is the code you can add to the bottom of your program to save your data to a CSV file:

movies.to_csv('movies.csv')

Breaking the CSV file down:

movies.to_csv('movies.csv')

In order for this code to run successfully, you’ll need to create an empty file and name it whatever you want — making sure it has the .csvextension. I named mine movies.csv,as you can see above, but feel free to name it whatever you like. Just make sure to change the code above to match it.

If you’re in Repl, you can create an empty CSVfile by hovering near Files and clicking the “Add file” option. Name it, and save it with a .csv extension. Then, add the code to the end of your program:

movies.to_csv(‘the_name_of_your_csv_here.csv’)

All your data should populate over into your CSV. Once you download it onto your computer/open it up, your file will look like this:

Conclusion

We’ve come a long way from requesting the HTML content of our web page to cleaning our entire DataFrame. You should now know how to scrape web pages with the same HTML and URL structure I’ve shown you above. Here’s a summary of what we’ve accomplished:

- Inspected our HTML for the data we need

- Wrote code to extract the data

- Put our code in a loop to grab all the data from each movie

- Built a DataFrame with pandas

- Cleaned our data in pandas

- Handled type conversion to make our data consistent

- Saved your scraped data to a CSV

Next steps

I hope you had fun making this!

If you’d like to build on what you’ve learned, here are a few ideas to try out:

- Grab the movie data for all 1,000 movies on that list

- Scrape other data about each movie — e.g., genre, director, starring, or the summary of the movie

- Find a different website to scrape that interests you

In my next piece, I’ll explain how to loop through all of the pages of this IMDb list to grab all of the 1,000 movies, which will involve a few alterations to the final code we have here.

Happy coding!

#python #data science #machine learning #programming