How to Configure Laravel properly for CI/CD

Just a quick note before: if you are afraid to step into some unknown world, just remember what i say — you can never know how easy can be for you to adapt to it and how much joy you can get from it.

The most struggle i had with for a long long time was setting up CI/CD properly for Laravel, and I’m not joking. For the newcomers, there are so much barriers that you must get over in order to reach the fruit of automation that you have to google every single problem you encounter until you ran into a GitHub issue which is closed with a “nvm, fixed it”.

I am going to teach you about CI/CD, how to properly set up CI/CD for your own Laravel projects and how to perform good unit tests and, as a bonus: how to configure the CI/CD to allow browser tests with Laravel Dusk!

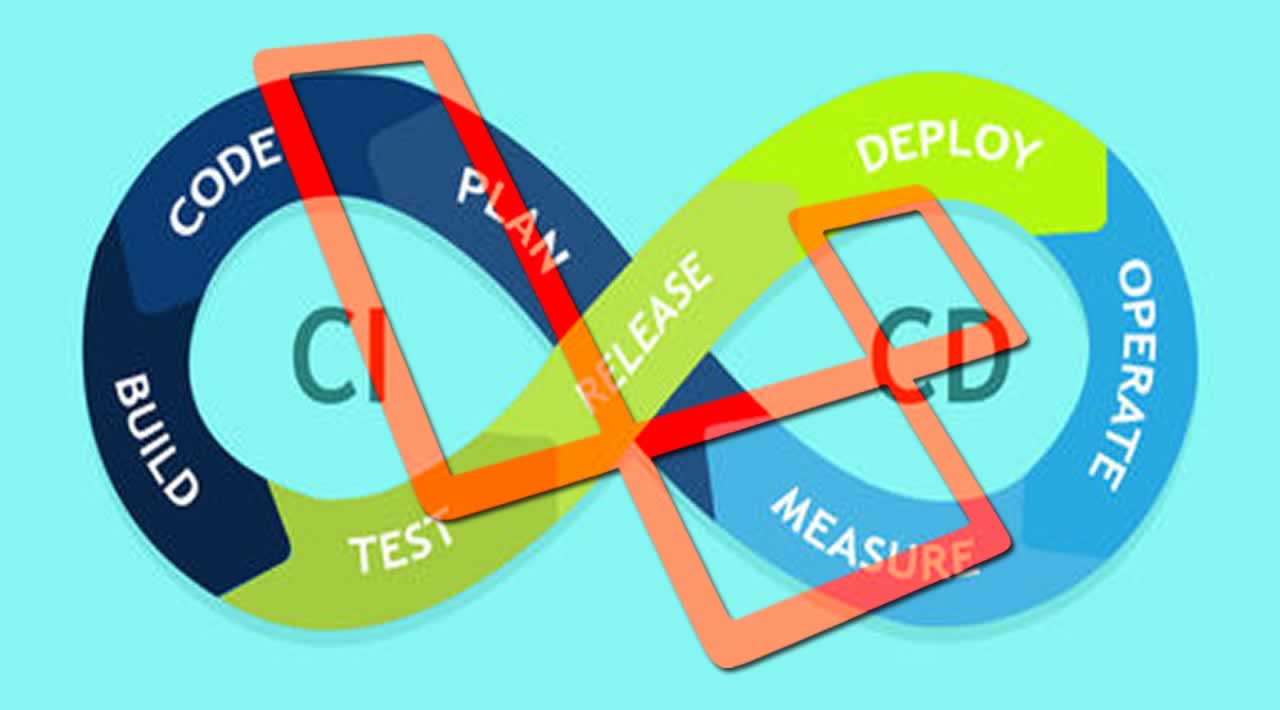

CI/CD the short way

So, at the very beginning of the programming, people were just passing around archived zip files with the source code from dev to dev and it was a total mess, until Git came along and fixed the source control ideology. This happened with CI/CD. At the beginning, people were manually testing their code without having any idea if something is broken (because i can tell you — if you don’t have tests, your app might be broken somewhere). So automated tests came along and eased the work of manually testing — you just tell a virtual machine in the cloud (in newer versions there are Docker containers spin up in a Kubernetes cluster) to start a pipeline (a set of steps): run PHP, Ruby or whatever and do a precise list of commands, like running a MySQL instance and seeding it with fake data for testing, and mark the tests as passed if there are no commands that return a non-zero exit code (i’m going to tell you about this soon).

CI/CD is an acronym for Continous Integration and Continous Deployment. Basically, you write code, the code gets on a VM in the cloud and with that precise list of commands, you either get a result that your code is good or bad, and it jumps in the second step (CD) which gets on the server (gets deployed) without your users even noticing it (or they can notice if you opt-in for non-zero downtime deployments).

So, in the shell, any kind of command which returns a non-zero exit code is treated as good. Otherwise, it might fail the build or might be a risky build. For example, this command returns a zero exit code:

$ ls -al

This returns a non-zero exit code (because in this case, my-inexistent-cli is a non-existent command):

$ my-inexistent-cli

Setting up CI/CD in Laravel

So, Laravel has a built-in, clean way, to write tests using PHPUnit or Dusk, but there’s a lack on which means setting up a configuration for this thing. I’ll tell you how to properly set up CI/CD for two major providers: GitlabCI, CircleCI. I had to give up on TravisCI since it’s good for Open Source, like Laravel packages, but it does not seem to suite the second part, the Continous Deployment part really well.

Gitlab CI

If you plan to use this for your private projects, 2k build minutes are enough for, let’s say, a maximum of two people per project? You can run around 400 builds (with 5 mins each) each month for free. What i have noticed is that it is slow, especially when running the script to install Chromium for Dusk Tests. I will give you two versions, one with PHPUnit and one with both PHPUnit and Dusk, just so you can make an idea.

Some people will complain: “ehhhh, you could have used a version of PHP which comes with Apache” but seriously — as long as Laravel has a built-in method of staring a webserver, there’s no need of additional seconds to my build, also, it can be done properly in Dusk due to built-in nature.

This should stay in your .gitlab-ci.yml inside your root project. Next time you’ll push your app to the branch, check the CI/CD from the left menu in your repository. A new pipeline should start.

stages: - testSet up cache, so we have to run composer update between builds, speeding it up!

cache:

key: ${CI_COMMIT_REF_SLUG}

paths:

- node_modules/

- vendor/test:

image: edbizarro/gitlab-ci-pipeline-php:7.2

stage: test

services:

- mariadb:10.3.11variables:

MYSQL_DATABASE: “test”

MYSQL_ROOT_PASSWORD: “secret”script:

- composer update --no-interaction --no-progress --prefer-dist --optimize-autoloader --ignore-platform-reqs

- cp .env.testing .env

- php artisan key:generate

- php artisan config:cache

- php artisan route:cache

- npm install

- npm run prod

- php artisan migrate --seed

- php artisan storage:link

- php artisan serve &

- vendor/bin/phpunit

So, Gitlab CI relies on containers, so in case you want MySQL instead of MariaDB, feel free to change it to mysql:[your_version], for example: mysql:5.7. Also, if you don’t compile assets with npm, it’s useless to install and run prod.

Also, i use Ed Bizarro’s Gitlab Pipeline images for testing — feel free to change the PHP version to something appropriate.

For this test, this kind of .env.testing is needed to make it run:

APP_NAME=“Laravel”

APP_ENV=testing

APP_KEY=

APP_DEBUG=true

APP_URL=http://127.0.0.1:8000LOG_CHANNEL=null

DB_CONNECTION=mysql

DB_HOST=mariadb #change with string ‘mysql’ if you run mysql

DB_PORT=3306

DB_DATABASE=test

DB_USERNAME=root

DB_PASSWORD=secretBROADCAST_DRIVER=log

CACHE_DRIVER=file

SESSION_DRIVER=file

SESSION_LIFETIME=120

QUEUE_DRIVER=syncREDIS_HOST=127.0.0.1

REDIS_PASSWORD=null

REDIS_PORT=6379MAIL_DRIVER=smtp

MAIL_HOST=localhost

MAIL_PORT=1025

MAIL_USERNAME=null

MAIL_PASSWORD=null

MAIL_ENCRYPTION=nullPUSHER_APP_ID=

PUSHER_APP_KEY=

PUSHER_APP_SECRET=

PUSHER_APP_CLUSTER=mt1MIX_PUSHER_APP_KEY=“${PUSHER_APP_KEY}”

MIX_PUSHER_APP_CLUSTER=“${PUSHER_APP_CLUSTER}”

So, for tests with Dusk we need some more settings:

stages:

- test

cache:

key: ${CI_COMMIT_REF_SLUG}

paths:

- node_modules/

- vendor/

test:

image: edbizarro/gitlab-ci-pipeline-php:7.2

stage: test

services:

- mariadb:10.3.11

variables:

MYSQL_DATABASE: “test”

MYSQL_ROOT_PASSWORD: “secret”

Install Google Chrome

before_script:

- sudo apt-get update -y

- sudo apt-get install unzip xvfb libxi6 libgconf-2-4 libnss3 wget -yqq

- wget -q -O - https://dl-ssl.google.com/linux/linux_signing_key.pub | sudo apt-key add -

- sudo sh -c ‘echo “deb http://dl.google.com/linux/chrome/deb/ stable main” >> /etc/apt/sources.list.d/google.list’

- sudo apt-get update -yqq

- sudo apt-get install -y google-chrome-stable

script:

- composer update --no-interaction --no-progress --prefer-dist --optimize-autoloader --ignore-platform-reqs

- cp .env.testing .env

- php artisan key:generate

- php artisan config:cache

- php artisan route:cache

- npm install

- npm run prod

- php artisan migrate --seed

- php artisan storage:link

- php artisan serve &

- ./vendor/laravel/dusk/bin/chromedriver-linux --port=9515 &

- sleep 5

- vendor/bin/phpunit

- php artisan dusk

For this, an extra env.dusk.testing is required:

APP_NAME=“Laravel”

APP_ENV=testing

APP_KEY=

APP_DEBUG=true

APP_URL=http://127.0.0.1:8000LOG_CHANNEL=null

DB_CONNECTION=mysql

DB_HOST=mariadb

DB_PORT=3306

DB_DATABASE=test

DB_USERNAME=root

DB_PASSWORD=secretBROADCAST_DRIVER=log

CACHE_DRIVER=file

SESSION_DRIVER=file

SESSION_LIFETIME=120

QUEUE_DRIVER=syncREDIS_HOST=127.0.0.1

REDIS_PASSWORD=null

REDIS_PORT=6379MAIL_DRIVER=smtp

MAIL_HOST=localhost

MAIL_PORT=1025

MAIL_USERNAME=null

MAIL_PASSWORD=null

MAIL_ENCRYPTION=nullPUSHER_APP_ID=

PUSHER_APP_KEY=

PUSHER_APP_SECRET=

PUSHER_APP_CLUSTER=mt1MIX_PUSHER_APP_KEY=“${PUSHER_APP_KEY}”

MIX_PUSHER_APP_CLUSTER=“${PUSHER_APP_CLUSTER}”

CircleCI

CircleCI offers 1k minutes for your testing and deployment joy. It proved to be real fast — compared to Gitlab CI; it cut off one whole minute (from 3 mins to 2 mins, with the same basic build), since they run on optimized CPUs.

For CircleCI i use the same configuration of .env files, but a different configuration. This time, you’ll have a folder called .circleci, and inside should be a config.yml:

version: 2jobs:

test:

docker:

- image: edbizarro/gitlab-ci-pipeline-php:7.2

- image: circleci/mysql:5.7

environment:

MYSQL_DATABASE: “test”

MYSQL_ROOT_PASSWORD: “secret”working_directory: /var/www/htmlsteps:

- checkout

# Install Google Chrome

- run: sudo apt-get update -y

- run: sudo apt-get install unzip xvfb libxi6 libgconf-2-4 libnss3 wget -yqq

- run: wget -q -O - https://dl-ssl.google.com/linux/linux_signing_key.pub | sudo apt-key add -

- run: sudo sh -c ‘echo “deb http://dl.google.com/linux/chrome/deb/ stable main” >> /etc/apt/sources.list.d/google.list’

- run: sudo apt-get update -yqq

- run: sudo apt-get install -y google-chrome-stable

- restore_cache:

keys:

- vendor-{{ checksum “composer.json” }}

- run: composer update --no-interaction --no-progress --prefer-dist --optimize-autoloader --ignore-platform-reqs

- save_cache:

paths:

- ./vendor

key: vendor-{{ checksum “composer.json” }}

- run: cp .env.testing.env

- run: php artisan key:generate

- run: php artisan config:cache

- run: php artisan route:cache

- restore_cache:

keys:

- node-{{ checksum “package.json” }}

- run: npm update

- save_cache:

paths:

- ./node_modules

key: node-{{ checksum “package.json” }}

- run: npm run prod

- run: php artisan migrate --seed

- run: php artisan storage:link

- run:

background: true

command: php artisan serve

- run:

background: true

command: ./vendor/laravel/dusk/bin/chromedriver-linux --port=9515

- run: ./vendor/bin/phpunit

- run: php artisan duskworkflows:

version: 2

deploy:

jobs:

- test

This configuration relies on restoring the cache before running composer update or npm update and saving the node_modules and vendor folders into cache for later use.

Service containers

So, as I told you from the beginning, you can start a CI/CD pipeline in small steps, with an env file and a yaml file. You just declare commands, that can either run in background or separately, like service containers.

Service containers are declared in your config and allows you to setup basic things, like Redis, MySQL or MongoDB. All of these are based on Docker containers. You can search containers for your services on Docker Hub. For example, you can find an image for MongoDB and use it in your pipeline:

version: 2jobs:

test:

docker:

- image: edbizarro/gitlab-ci-pipeline-php:7.2

- image: mongo:4.0.3

environment:

MONGO_INITDB_ROOT_USERNAME: root

MONGO_INITDB_ROOT_PASSWORD: example

- image: circleci/mysql:5.7

environment:

MYSQL_DATABASE: “test”

MYSQL_ROOT_PASSWORD: “secret”working_directory: /var/www/htmlsteps:

- checkout

…

This is how it would look like in the Gitlab CI configuration:

…phpunit:

image: edbizarro/gitlab-ci-pipeline-php:7.2

stage: test

services:

- mariadb:10.3.11

- mongo:4.0.3variables:

MYSQL_DATABASE: “test”

MYSQL_ROOT_PASSWORD: “secret”

MONGO_INITDB_ROOT_USERNAME: root

MONGO_INITDB_ROOT_PASSWORD: example

…

Basically, you can open up containers with services and actually set them up environment variables. Make sure that you access them using the image name as the host. For example, the mariadb container won’t be accessed with 127.0.0.1, but with the string mariadb. Make sure that when you set this in your env file, it is this way.

A thing to notice is that I did not set up a container for Redis because in tests you want to receive a response in sync, so you can test them from one end to another in a perfect flowing way — imagine how it would be if you would start a job, and it won’t process completely when your tests would start asserting some model that should have been written in the database — for this kind of tests, use the Mocking feature.

I hope this tutorial will surely help and you if you liked this tutorial, please consider sharing it with others.

Originally published on medium.com

#laravel #php #docker #devops #testing