They’ve been some really interesting applications of style transfer. It basically aims to take the ‘style’ from one image and change the ‘content’ image to meet that style.

But so far it hasn’t really been applied to audio. So I explored the idea of applying neural style transfer to audio. To be frank, the results were less than stellar but I’m going to keep working on this in the future.

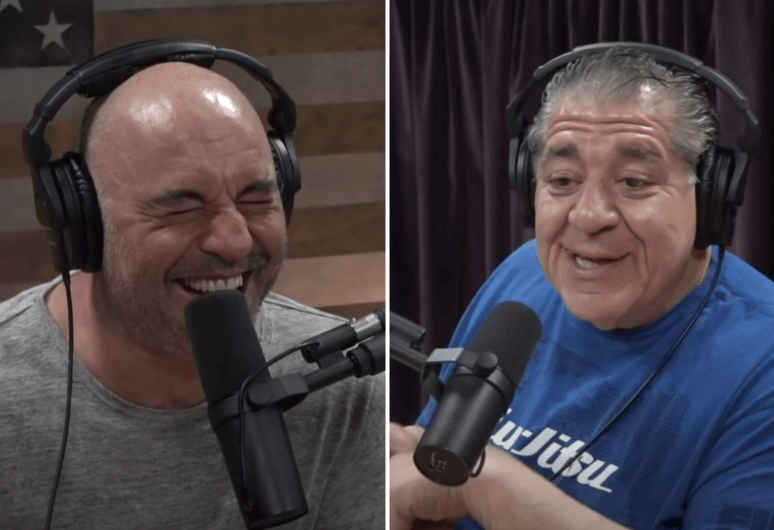

For this exercise, I’m going to be using clips from the joe rogan podcast. I’m trying to make Joe Rogan, from the Joe Rogan Experience, sound like Joey Diaz, from the Church of Whats Happening Now. Joe Rogan already does a pretty good impression of joey diaz. But I’d like to improve his impression using deep learning.

First I’m going to download the youtube videos. There’s a neat trick mentioned on github that allows you to download small segments of youtube videos. That’s handy cause I don’t want to download the entire video. You’ll need youtube-dl and ffmpeg for this step.

import os

# download youtube url using ffmpeg

# adapted from: https://github.com/ytdl-org/youtube-dl/issues/622#issuecomment-162337869

def download_from_url_ffmpeg(url, output, minute_mark = 1):

try:

os.remove(output)

except:

pass

# cmd = 'ffmpeg -loglevel warning -ss 0 -i $(youtube-dl -f 22 --get-url https://www.youtube.com/watch?v=mMZriSvaVP8) -t 11200 -c:v copy -c:a copy react-spot.mp4'

cmd = 'ffmpeg -loglevel warning -ss 0 -i $(youtube-dl -f bestaudio --get-url '+str(url)+') -t '+str(minute_mark*60)+' '+str(output)

os.system(cmd)

return os.getcwd()+'/'+output

url = 'https://www.youtube.com/watch?v=-xY_D8SMNtE'

content_audio_name = download_from_url_ffmpeg(url, 'jre.wav')

url = 'https://www.youtube.com/watch?v=-l88fMJcvWE'

style_audio_name = download_from_url_ffmpeg(url, 'joey_diaz.wav')

Loss Functions

There are two types of loss for this

- Content loss. Lower values for this means that the output audio sounds like joe rogan.

- Style loss. Lower values for this means that the output audio sounds like joey diaz.

Ideally we want both content and style loss to be minimised.

Content loss

The content loss function takes in an input matrix and a content matrix. The content matrix corresponds to joe rogan’s audio. Then it returns the weighted content distance: between the input matrix and the content matrix. This is implemented using a torch module. It can be calculated using nn.MSELoss.

#pytorch #deep-learning #neural-style-transfer #deep learning