By Sasha Sheng and Diogo Almeida

Disclaimer: Opinions belong to the authors of this blog post only and do not reflect the opinions of our employers. Bias in machine learning certainly is a larger structural issue that cannot be solved by purely technical means. This blog post only focuses on the narrow computer vision task of adapting PULSE to generate more diverse faces.

Motivation

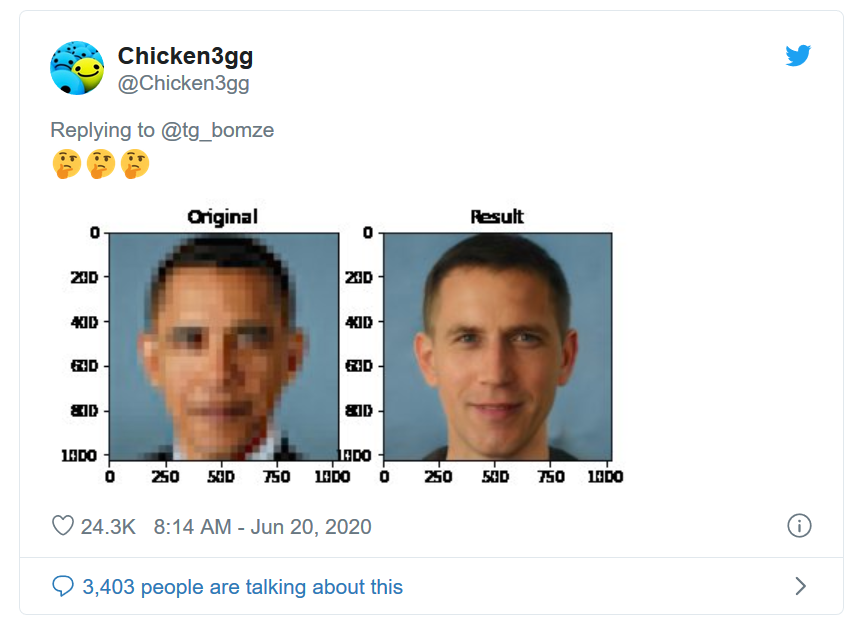

PULSE: Self-Supervised Photo Upsampling via Latent Space Exploration of Generative Models unintentionally caused some heated debate in the machine learning community recently. The conversation that ensued on Reddit inspired us to embark on a weekend-long hack project. Specifically, the second point in the below screenshot got our creative juices flowing and we set out to run several experiments to increase racial diversity in PULSE’s super-resolution generations. We were pleasantly surprised that several experiments led to more diversity in age and gender, but unfortunately not race. This is further evidence that reducing the bias of machine learning models is a hard problem, and is not possible to fully address with only algorithmic changes

Reddit comment in response to “How would you fix PULSE?”

The tweet that caused a lot of discussion showing a low-resolution photo of Barack Obama upsampled to what looks like a white person

Quick Review

Previous super-resolution techniques start with a downsampled image and try to add real detail. PULSE constrains images to a GAN’s outputs and searches for an image that matches the downsampled image.

#machine-learning #super-resolution #diversity #deep learning