Part one deals with some basic data preparation and very basic stats. In part two we are going to take a look at the word to vec (and Bert embeddings) transformation and address the use of vector similarity for clustering (spoiler — it doesn’t work and using KMeans on the result is misleading to say the least). Finally in part 3 we are going to apply LDA, combine it with the results of word to vec embeddings and attempt to identify main themes together with their graphical representation in good old 2D.

First thing is first, I am not trying to make any political statements here. It’s way too cliché these days. The real purpose behind this exercise is to look at possible applications of the clustering algorithm I described in my previous two articles (here and here). I was curious to see how it would fair in a really high dimensional space and with Spacey’s 300-feature and Bert family 768-feature vectors — NLP was really the obvious choice. It turns out it is not all about using the right clustering algo (although in itself it proved to be very instructive).

Trump’s tweets were relatively easy to obtain and have the advantage of being relatively short (being tweets — this is a given), fairly messy (plenty of abbreviations, links, hashtags etc.) and cover a range of subjects, as well as, having plenty of purely slogan/single uttering kind of messages (of which “CHINA!” is probably my all time favourite — reminds me of Father Jack’s DRINK! punctuating some of the best Father Ted episodes). In short — it is a real challenge, especially if we start looking at topic recognition.

Data preparation

Let’s get the imports out of the way:

We are going to need the standard plotting pack, pandas and numpy (naturally), also going to use some regex, time module.

%matplotlib inline

import matplotlib.pylab as plt

import seaborn as sns

import pandas as pd

pd.options.display.max_columns = 999

import numpy as np

import datetime

import re

I will mostly use spacy for cleaning, but for whatever reason I had nltk stopwords on hand so I used a bit of nltk as well:

from nltk.tokenize import RegexpTokenizer

from nltk.corpus import stopwords

stop_words = stopwords.words('english')

tokenizer = RegexpTokenizer(r'\w+')

import spacy

import en_core_web_lg

nlp = en_core_web_lg.load()

Could probably get away with the smaller version of spacy’s vocabulary, but using the large version just because we can.

For the time being I am leaving out pretrained Bert vectors.

The dataset I am using lives here: http://www.trumptwitterarchive.com/archive

It can be a bit tricky to load, I ended up using semicolon as a delimiter and using this load it in. I am using 4 years worth of tweets — up to and including May of this year. No particular reason for truncating it there, of course.

tr = pd.read_csv(

'trump_tweet.csv',

delimiter=';',

engine='python',

encoding='utf-8-sig',

quotechar='"',

escapechar='\\',

error_bad_lines=False

)

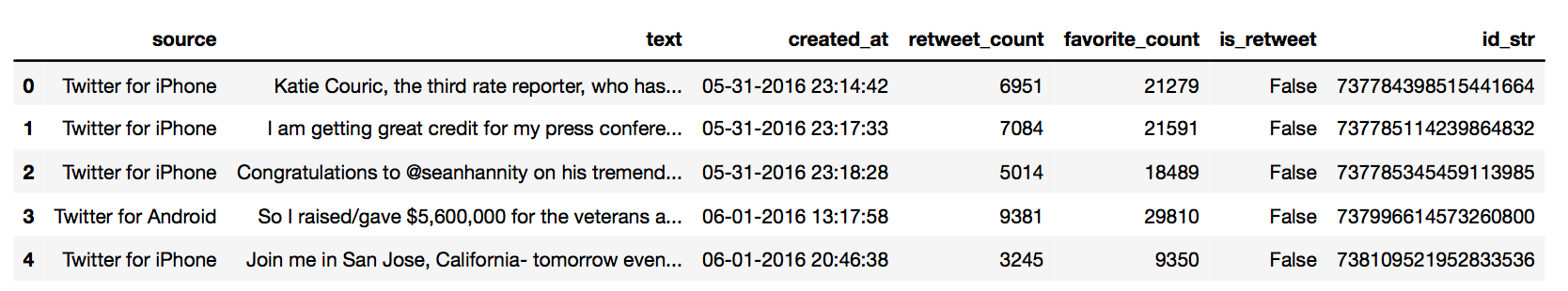

Quick peek:

Before we even start cleaning and preparing the dataset for NLP analysis, we can take a quick look at some of the trends, mentions etc. In part, it’s good to do it early because we would want to get rid of retweets for the NLP part. Arguably, this will not limit us to the tweets written by Mr Trump alone. There are still going to be multiple tweets sent by his staff and plenty of promotional automated tweets sent both from handheld devices and from web management suits. Still I felt like retweets can be verbose enough to muddle the picture significantly and we know he is not the author, so out they go. I will stick with the clean up here and will come back to the pre-clean dataset to look at the accounts he regularly retweets in the next section.

All RTs have zero favourite count as those are kept with the original, so I am going to use that to filter. You can also use regex or str module to find everything that starts with RT as well. I also restrict tweets to the ones sent from Android and from iPhone.

As a little side note — I have seen some articles suggesting that Trump was using Android as a personal phone and that iPhone was being used by his staff. This has changed in 2017, now everything is sent from iPhone. Comparing the two streams I don’t see a strong evidence to suggest that the nature of tweets from one device is significantly different to the ones from the other, so I am ignoring this suggestion here.

#nlp #data-science #machine-learning #twitter #trump #deep learning