While managing Kubernetes clusters, we can face some demanding challenges. This article helps you manage your cluster resources properly, especially in an autoscaling environment.

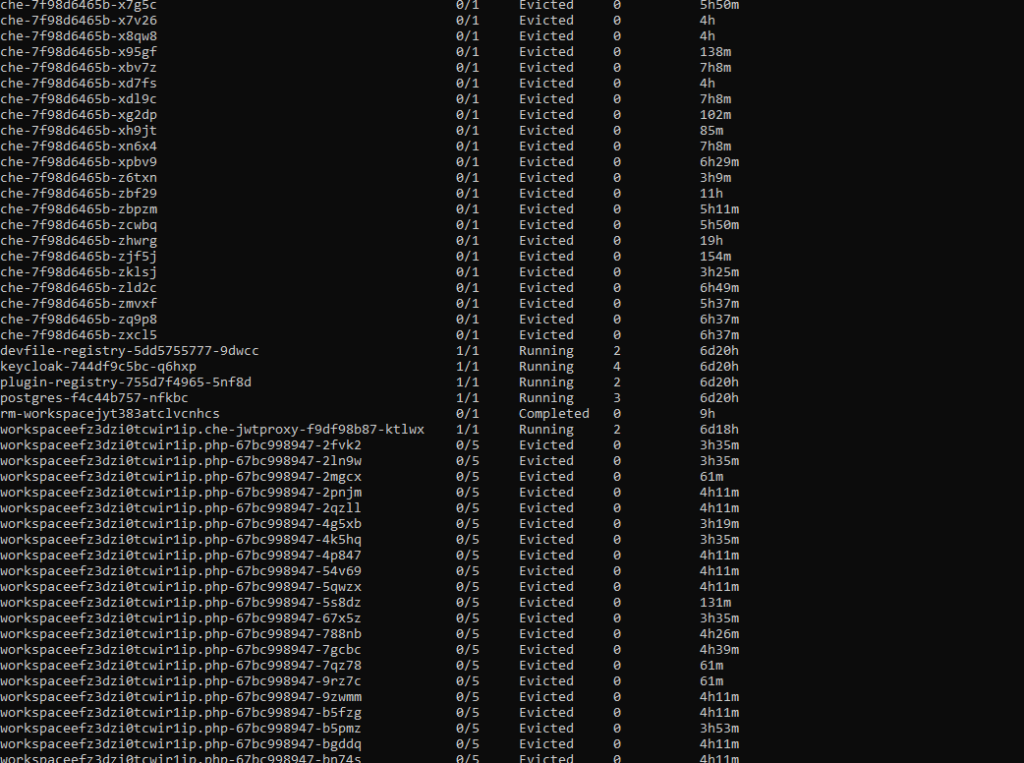

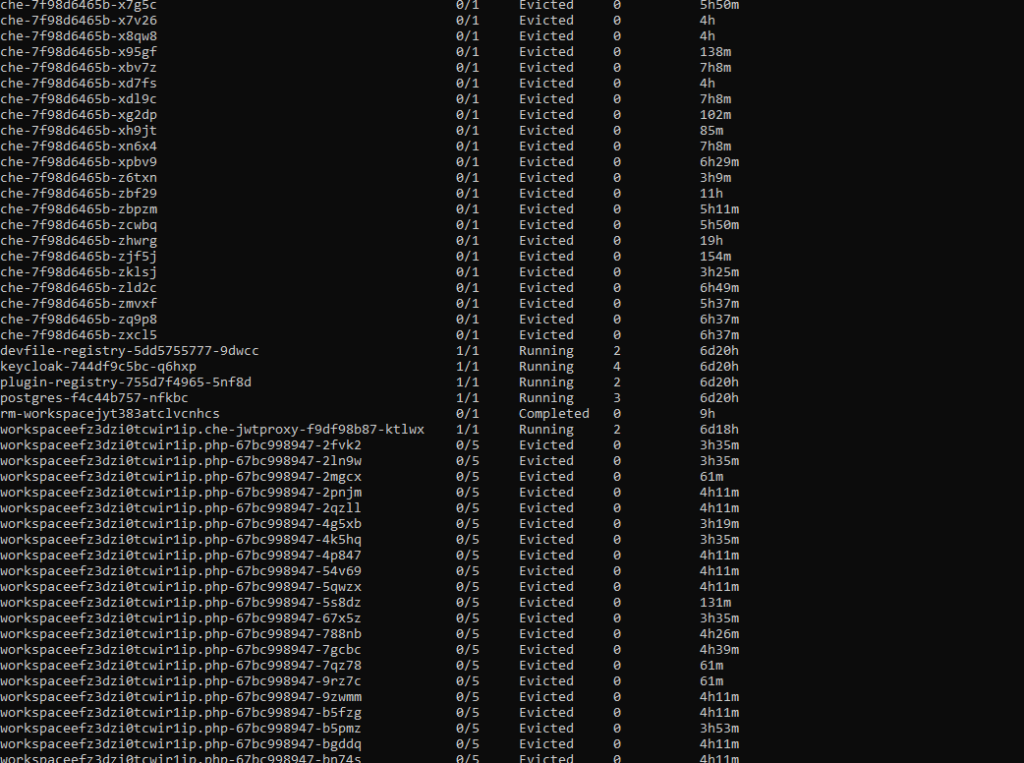

If you try to run a resource-hungry application, especially on a cluster which has autoscaling enabled, at some point this happens:

For the first time, it may look bad, especially if you see dozens of evicted pods in kubectl get, and you only wanted to run 5 pods. With all that claims, that you can run containers without worries about the orchestration, as Kubernetes does all of that for you, you may find it overwhelming.

Well, this is true to some extent, but the answer is — it depends, and it all boils down to a crucial topic associated with Kubernetes cluster management. Let’s dive into the problem.

Kubernetes Cluster Resources Management

While there is a general awareness that resources are never limitless — even in a huge cluster as a service solution, we do not often consider the exact layout of the cluster resources. And the general idea of virtualization and containerization makes it seem like resources are treated as a single, huge pool — which may not always be true. Let’s see how it looks.

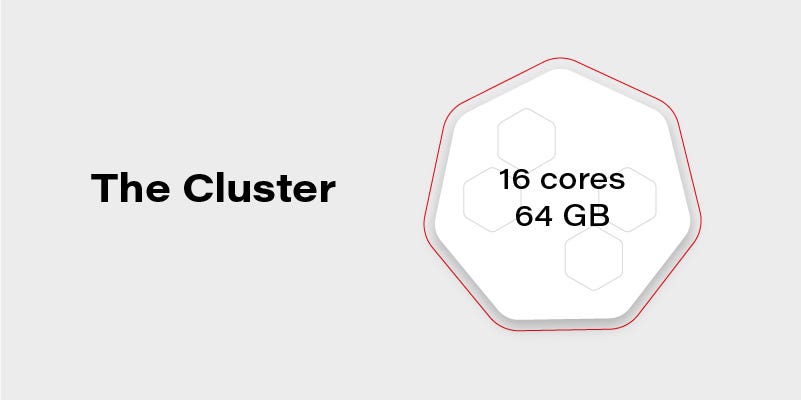

Let’s assume we have a Kubernetes cluster with 16 vCPU and 64GB of RAM.

Can we run on it our beautiful AI container, which requires 20GB of memory to run? Obviously, not. Why not? We have 64GB of memory available on the cluster!

#kubernetes #cloud-native #containers #kubernetes-cluster #cloud