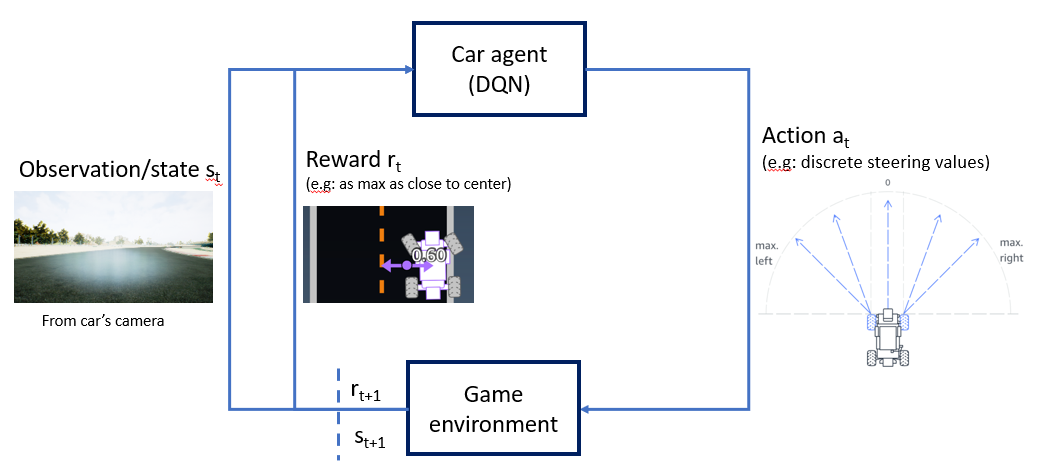

Autonomous vehicles become popular nowadays, so does deep reinforcement learning. This post can provide you with an idea to set up the environment for you to begin learning and experimenting with deep reinforcement learning for autonomous vehicles. Besides, I will share the first experiment I’ve done with Deep-Q-Network for a self-driving car on the race track in the simulator.

First, I’ll show you a short demo of my experiment. The .gif image highlights the steps of training a car to drive autonomously at a right turn for about 12 hours. In this experiment, the input for training the navigation model is the image frames recorded from a camera mounted on the head-front of the car. The bottom right window shows the live recording of that camera.

The 8x-fast-motion clip highlights the 12 hours of training a DQN model for the car at a right turn. Observations are the RGB frames from the head-front camera of the car.

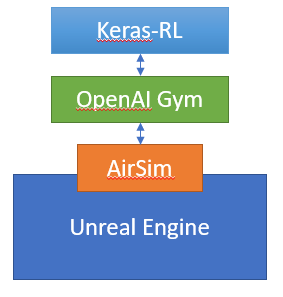

There are 3 main components are used to conduct this experiment. Those are AirSim (run on Unreal Engine), Open AI Gym, and Keras-RL. Here I introduce briefly those main components if you are not familiar with those yet.

- AirSim is an add-on run on game engines like Unreal Engine(UE) or Unity. AirSim is developed as a platform for AI research to experiment with deep learning, computer vision, and reinforcement learning algorithms for autonomous vehicles.

- OpenAI Gym provides a range of game environments to play and evaluate reinforcement learning algorithms.

- Keras-RL is the python library implementing different deep reinforcement learning algorithms based on Keras. Keras-RL works with OpenAI Gym out of the box. This means that evaluating and playing around with different algorithms is easy.

The system diagram shows the components and their connections.

For my environment setup, I used Unreal Engine as the game engine with the map RaceCourse which offers several race tracks. Meanwhile, AirSim provides the car and APIs to control it (e.g: steering, acceleration), also the APIs to record images. So I can say that I already have a car driving game to play with.

The next step is connecting this driving game to the deep reinforcement learning tools Keras-RL and OpenAI Gym. To do that, first, a customized OpenAI Gym environment was created, this customized Gym environment calls the necessary AirSim APIs, like controlling the car or capturing images. As Keras-RL works with Gym environment, it means now Keras-RL algorithms can interact with the game through the customized Gym environment.

After combining the above components together, I’ve already had a framework to experiment with deep reinforcement learning for autonomous vehicles. One additional thing I need to do before running the first experiment is locating the race track in the map because the location of the track will be required to determine the reward for the car during training and evaluation, but this kind of information is not available in the map yet. To locate the track I put some waypoints manually in the center of the road, and measure the width of the road. With the list of waypoints, I can locate the track approximately. Note that these waypoints will be invisible once the game starts.

#autonomous-vehicles #airsim #keras-rl #openai-gym #deep learning