Ensemble to harness the true potential of all models combined!!

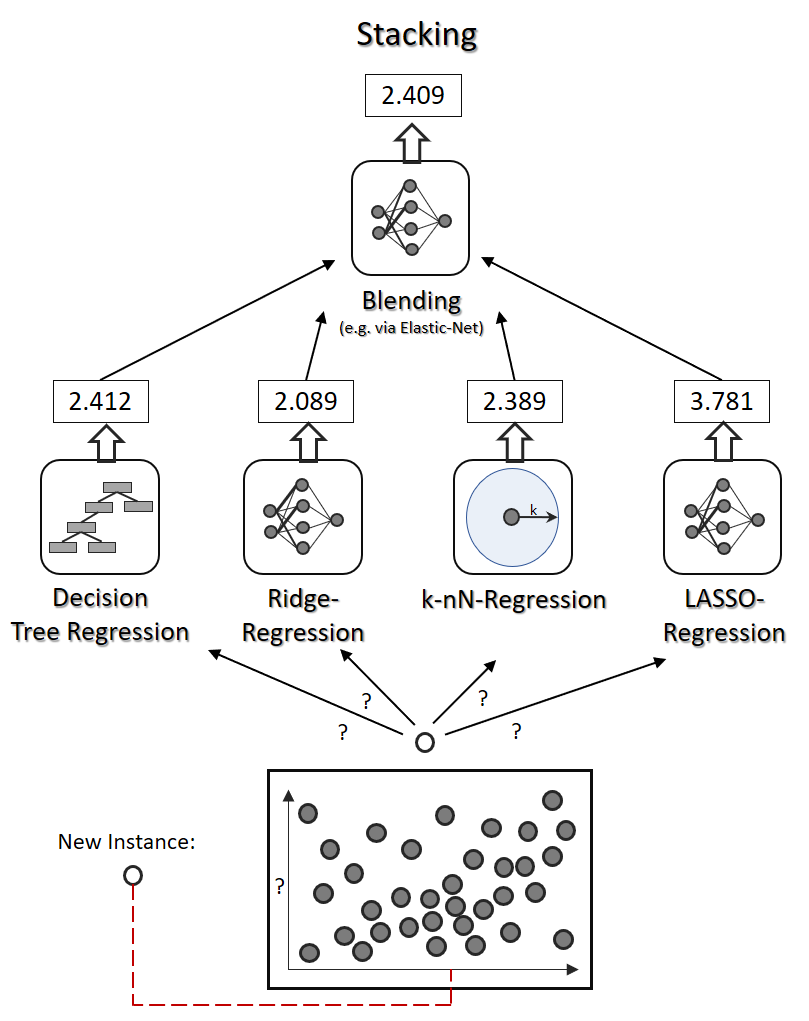

You must’ve come across these terms in Kaggle competitions before. Nowadays, Ensembling and **Stacking **of small models is even done at industrial levels due to advancements in computing power. Moreover, smaller models are easier to fine-tune.

Ensembling:

Ensembling is nothing but a combination of different models. The models can be combined by their predictions/probabilities. One way would be to compute an _average _of all the predictions from the models ( Note: If outliers are present, _median _would perform better).

Ensemble Probabilities = (M1 + M2 + … + Mn) / n

where M(i) = probability of ith model

**A Random Forest is an ensemble of multiple decision trees. **However, as shown in the above diagram, combination of different models like KNN, SVM, Logistic regression can also be ensembled.

#kaggle #bagging #ensemble-learning #stacking #machine-learning