Actually, this post was planned as a short note about using [NodeAffinity](https://kubernetes.io/docs/concepts/scheduling-eviction/assign-pod-node/) for Kubernetes Pod:

But then, as often happens, after starting writing about one thing, I faced another, and then another one, and as a result — I made this long-read post about Kubernetes load-testing.

So, I’ve started about NodeAffinity, but then wondered how will Kubernetes [cluster-autoscaler](https://github.com/kubernetes/autoscaler/tree/master/cluster-autoscaler) work - will it take into account the NodeAffinity setting during new WorkerNodes creation?

To check this I made a simple load-test using Apache Benchmark to trigger Kubernetes HorizontalPodAutoscaler which had to create new pods, and those new pods had to trigger [cluster-autoscaler](https://github.com/kubernetes/autoscaler/tree/master/cluster-autoscaler) to create new AWS EC2 instances that will be attached to a Kubernetes cluster as WorkerNodes.

Then I started a more complicated load-test and face an issue when pods stopped scaling.

And then… I decided that as I’m doing load-tests then it could be a good idea to test various AWS EC2 instance types — T3, M5, C5. And of course, need to add results to this post.

And after this — we’ve started full load-testing and face a couple of other issues, and obviously I had to write about how I solved them.

Eventually, this post is about Kubernetes load-testing in general, and about EC2 instance types, and about networking and DNS, and a couple of other things around the high-loaded application in a Kubernetes cluster.

Note: kk here: alias kk="kubectl" > ~/.bashrc

- The Task

- Choosing an EC2 type

- EC2 AMD instances

- EC2 Graviton instances

- eksctl and Kubernetes WorkerNode Groups

- The Testing plan

- Kubernetes NodeAffinity && nodeSelector

- Deployment update

- nodeSelector by a custom label

- nodeSelector by Kuber label

- Testing AWS EC2 t3 vs m5 vs c5

- Kubernetes NodeAffinity vs Kubernetes ClusterAutoscaler

- Load Testing

- Day 1

- Day 2

- net/http: request canceled (Client.Timeout exceeded while awaiting headers)

- AWS RDS — “Connection refused”

- AWS RDS max connections

- Day 3

- Kubernetes Liveness and Readiness probes

- Kubernetes: PHP logs from Docker

- The First Test

- php_network_getaddresses: getaddrinfo failed и DNS

- Kubernetes dnsPolicy

- Running a NodeLocal DNS in Kubernetes

- Kubernetes Pod dnsConfig && nameservers

- The Second Test

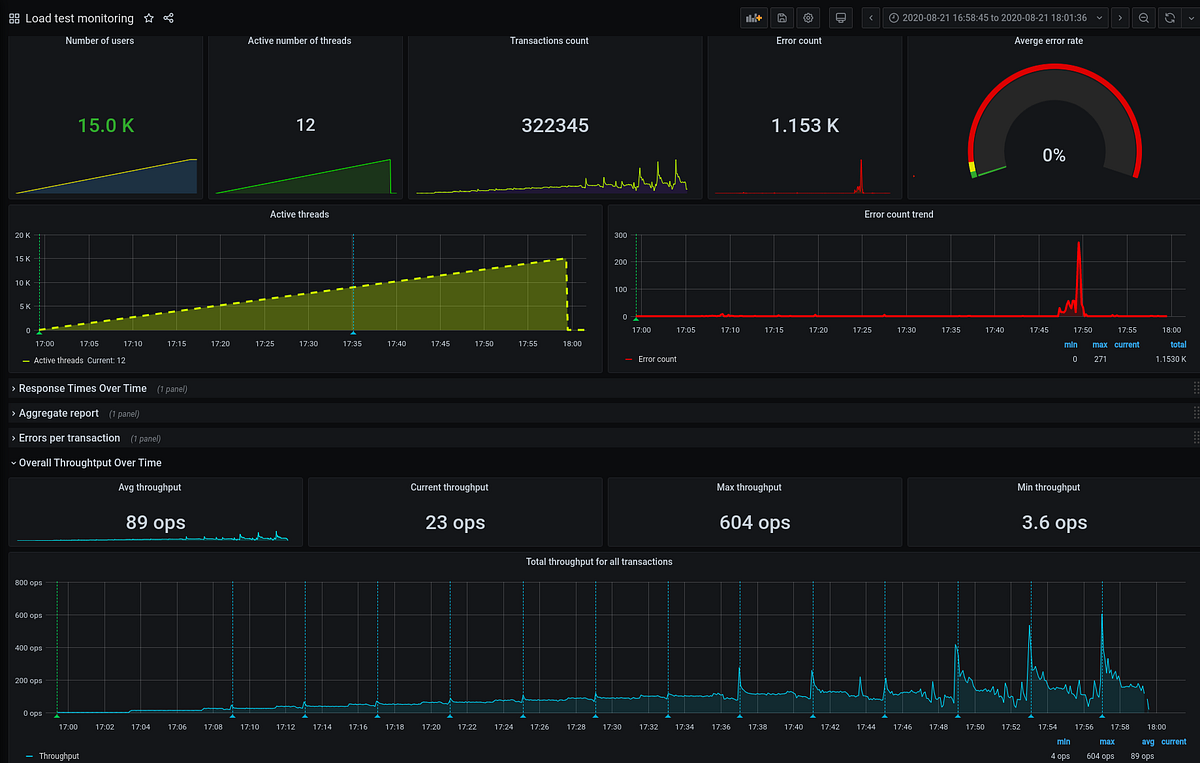

- Apache JMeter и Grafana

The Task

So, we have an application that really loves CPU.

PHP, Laravel. Currently, it’s running in DigitalOcean on 50 running droplets, plus NFS share, Memcache, Redis, and MySQL.

What do we want is to move this application to a Kubernetes cluster in AWS EKS to save some money on the infrastructure, because the current one in DigitalOcean costs us about 4.000 USD/month, while one AWS EKS cluster costing us about 500–600 USD (the cluster itself, plus by 4 AWS _t3.medium_EC2 instances for WorkerNodes in two separated AWS AvailabilityZones, totally 8 EC2).

With this setup on DigitalOcean, the application stopped working on 12.000 simulations users (48.000 per hour).

We want to keep up to 15.000 users (60.000/hour, 1.440.000/day) on our AWS EKS with autoscaling.

The project will live on a dedicated WorkerNodes group to avoid affecting other applications in the cluster. To make new pods to be created only on those WorkerNodes — we will use the NodeAffinity.

Also, we will perform load-testing using three different AWS Ec2 instance types — t3, m5, c5, to chose which one will better suit our application’s needs, and will do another load-testing to check how HorizontalPodAutoscaler and cluster-autoscaler will work.

#aws #highload #aws-eks #load-testing #kubernetes