When you ask a developer, what are the two key things that are required for better performance of an application, the answer will surely be

- Number of CPU Cores.

- Application RAM.

When it comes to monolithic architecture, we estimate the total CPU and RAM required for an application and pick one huge machine with a very huge number of CPU Cores as well as hundreds of GB’s of RAM.

The issue with such huge machines is they are pretty costly compared to multiple horizontally scaled lightweight machines as well as they are hard to get one instantaneously unlike lightweight machines which are available on the fly.

As this article is mainly intended on autoscaling and Docker Image Size, let’s not get into the monolithic vs microservices discussion.

Things that we will be discussing in this article are

- What’s autoscaling and when is it required.

- What’s the relation between docker image size and autoscaling.

- How it impacts end-users w.r.t Single Node & Multi-Node Kubernetes Deployments

Let’s start our discussion

- What is autoscaling

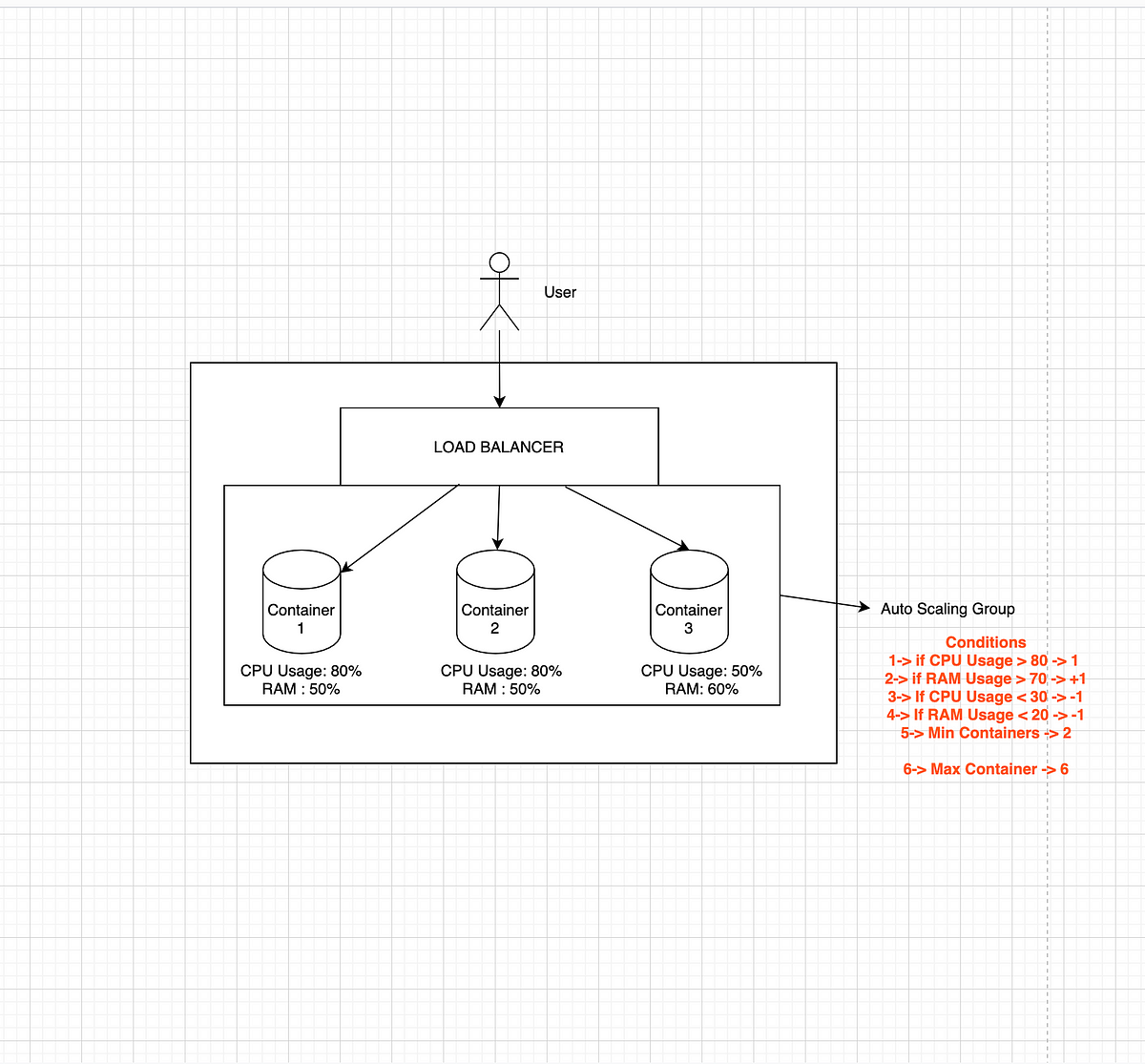

Let’s say my estimated number of requests is 50000 RPM(Requests/Min) and my SLA on response time is around 50 milliseconds. Based on these requests and my SLA, I benchmark my server and database and come up with the number of containers required and the capacity of each container(CPU & RAM). Let’s visualize how things will be.

#docker #autoscaling #docker-image #multinode-cluster #kubernetes