I recently learned about logistic regression and feed forward neural networks and how either of them can be used for classification. What bugged me was what was the difference and why and when do we prefer one over the other. So, I decided to do a comparison between the two techniques of classification theoretically as well as by trying to solve the problem of classifying digits from the MNIST dataset using both the methods. In this article, I will try to present this comparison and I hope this might be useful for people trying their hands in Machine Learning.

Source

The code that I will be using in this article are the ones used in the tutorials by Jovian.ml and freeCodeCamp on YouTube. The link has been provided in the references below.

Problem Statement

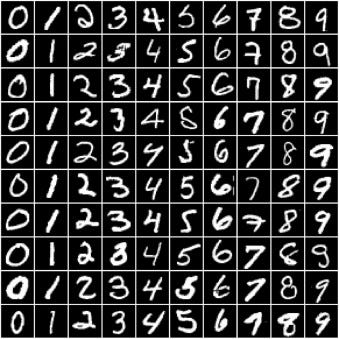

Given a handwritten digit, the model should be able to tell whether the digit is a 0,1,2,3,4,5,6,7,8 or 9.

We will use the MNIST database which provides a large database of handwritten digits to train and test our model and eventually our model will be able to classify any handwritten digit as 0,1,2,3,4,5,6,7,8 or 9. It consists of 28px by 28px grayscale images of handwritten digits (0 to 9), along with labels for each image indicating which digit it represents. We will learn how to use this dataset, fetch all the data once we look at the code.

Let us have a look at a few samples from the MNIST dataset.

Examples of handwritten digits from the MNIST dataset

Now that we have a clear idea about the problem statement and the data-source we are going to use, let’s look at the fundamental concepts using which we will attempt to classify the digits.

You can ignore these basics and jump straight to the code if you are already aware of the fundamentals of logistic regression and feed forward neural networks.

Logistic Regression

I will not talk about the math at all, you can have a look at the explanation of Logistic Regression provided by Wikipedia to get the essence of the mathematics behind it.

So, Logistic Regression is basically used for classifying objects. It predicts the probability(P(Y=1|X)) of the target variable based on a set of parameters that has been provided to it as input. For example, say you need to say whether an image is of a cat or a dog, then if we model the Logistic Regression to produce the probability of the image being a cat, then if the output provided by the Logistic Regression is close to 1 then essentially it means that Logistic Regression is telling that the image that has been provided to it is that of a cat and if the result is closer to 0, then the prediction is that of a dog.

It is called Logistic Regression because it used the logistic function which is basically a sigmoid function. A sigmoid function takes in a value and produces a value between 0 and 1. Why is this useful ? Because probabilities lie within 0 to 1, hence sigmoid function helps us in producing a probability of the target value for a given input.

The sigmoid/logistic function looks like:

where e is the exponent and **_t _**is the input value to the exponent.

Generally **_t _**is a linear combination of many variables and can be represented as :

The standard logistic function:

NOTE: Logistic Regression is simply a linear method where the predictions produced are passed through the non-linear sigmoid function which essentially renders the predictions independent of the linear combination of inputs.

Neural networks

Artificial Neural Networks are essentially the mimic of the actual neural networks which drive every living organism. They are currently being used for variety of purposes like classification, prediction etc. In Machine Learning terms, why do we have such a craze for Neural Networks ? Because they can approximate any complex function and the proof to this is provided.

What does a neural network look like ? Like this:

ANN with 1 hidden layer, each circle is a neuron. Source: Wikipedia

That picture you see above, we will essentially be implementing that soon. Now, what you see in that image is called a neural network architecture, you can make your own architecture by defining more than one hidden layers, add more number of neurons to the hidden layers etc. Now, there are some different kind of architectures of neural networks currently being used by researchers like Feed Forward Neural Networks, Convolutional Neural Networks, Recurrent Neural Networks etc.

In this article we will be using the Feed Forward Neural Network as its simple to understand for people like me who are just getting into the field of machine learning.

Feed Forward Neural Networks

Let us talk about perceptron a bit. This is a neural network unit created by Frank Rosenblatt in 1957 which can tell you to which class an input belongs to. It is a type of linear classifier.

What do you mean by linearly separable data ?

As you can see in image A that with one single line( which can be represented by a linear equation) we can separate the blue and green dots, hence this data is called linearly classifiable.

And what does a non-linearly separable data look like ?

Like the one in **image B. **We can see that the red and green dots cannot be separated by a single line but a function representing a circle is needed to separate them. As the separation cannot be done by a linear function, this is a non-linearly separable data.

Why do we need to know about linear/non-linear separable data ?

Because a single perceptron which looks like the diagram below is only capable of classifying linearly separable data, so we need feed forward networks which is also known as the multi-layer perceptron and is capable of learning non-linear functions.

A single layer perceptron and-multi-layer-perceptrons-the-artificial-neuron-at-the-core-of-deep-learning/)

A Feed forward neural network/ multi layer perceptron:

A multi layer perceptron(Feed Forward Neural Network). Source: missinglink.ai

I get all of this, but how does the network learn to classify ?

Well we must be thinking of this now, so how these networks learn comes from the perceptron learning rule which states that a perceptron will learn the relation between the input parameters and the target variable by playing around (adjusting ) the weights which is associated with each input. If the weighted sum of the inputs crosses a particular thereshold which is custom, then the neuron produces a true else it produces a false value. Now, when we combine a number of perceptrons thereby forming the Feed forward neural network, then each neuron produces a value and all perceptrons together are able to produce an output used for classification.

Now that was a lot of theory and concepts !

I have tried to shorten and simplify the most fundamental concepts, if you are still unclear, that’s perfectly fine. I am sure your doubts will get answered once we start the code walk-through as looking at each of these concepts in action shall help you to understand what’s really going on. I have also provided the references which have helped me understand the concepts to write this article, please go through them for further understanding.

Let’s start the most interesting part, the code walk-through!

Importing Libraries

We will be working with the MNIST dataset for this article. torchvision library provides a number of utilities for playing around with image data and we will be using some of them as we go along in our code.

#logistic-regression #artificial-intelligence #machine-learning #classification-algorithms #artificial-neural-network #algorithms