The bigger model is not always the better model

Miniaturization of electronics started by NASA’s push became an entire consumer products industry. Now we’re carrying the complete works of Beethoven on a lapel pin listening to it in headphones. —_ Neil deGrasse Tyson, astrophysicist and science commentator_

_[…] the pervasiveness of ultra-low-power embedded devices, coupled with the introduction of embedded machine learning frameworks like TensorFlow Lite for Microcontrollers will enable the mass proliferation of AI-powered IoT devices. — _Vijay Janapa Reddi, Associate Professor at Harvard University

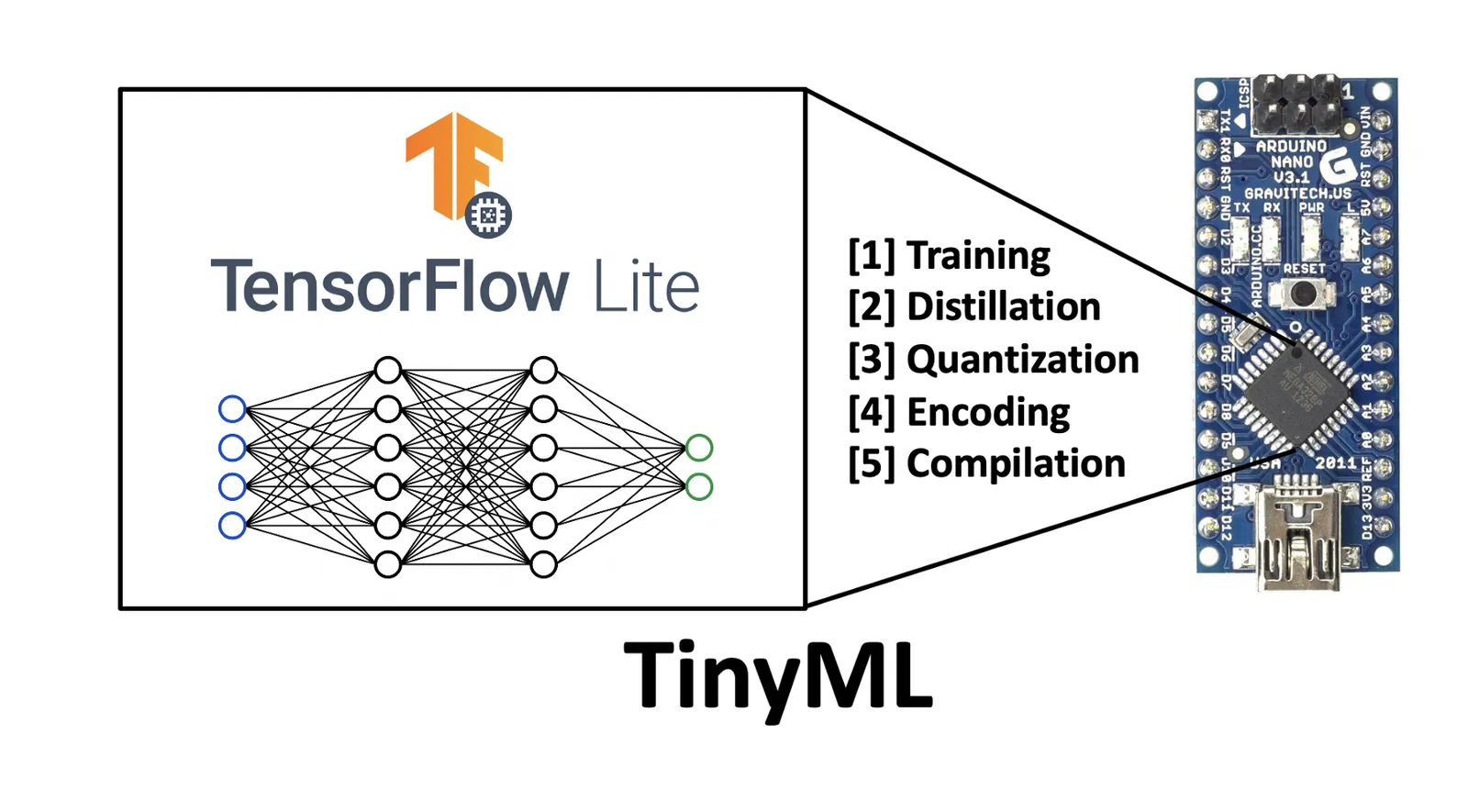

Overview of tiny machine learning (TinyML) with embedded devices.

This is the first in a series of articles on tiny machine learning. The goal of this article is to introduce the reader to the idea of tiny machine learning and its future potential. In-depth discussion of specific applications, implementations, and tutorials will follow in subsequent articles in the series.

Introduction

Over the past decade, we have witnessed the size of machine learning algorithms grow exponentially due to improvements in processor speeds and the advent of big data. Initially, models were small enough to run on local machines using one or more cores within the central processing unit (CPU).

Shortly after, computation using graphics processing units (GPUs) became necessary to handle larger datasets and became more readily available due to introduction of cloud-based services such as SaaS platforms (e.g., Google Colaboratory) and IaaS (e.g., Amazon EC2 Instances). At this time, algorithms could still be run on single machines.

More recently, we have seen the development of specialized application-specific integrated circuits (ASICs) and tensor processing units (TPUs), which can pack the power of ~8 GPUs. These devices have been augmented with the ability to distribute learning across multiple systems in an attempt to grow larger and larger models.

This came to a head recently with the release of the GPT-3 algorithm (released in May 2020), boasting a network architecture containing a staggering 175 billion neurons — more than double the number present in the human brain (~85 billion). This is more than 10x the number of neurons than the next-largest neural network ever created, Turing-NLG (released in February 2020, containing ~17.5 billion parameters). Some estimates claim that the model cost around $10 million dollars to train and used approximately 3 GWh of electricity (approximately the output of three nuclear power plants for an hour).

While the achievements of GPT-3 and Turing-NLG are laudable, naturally, this has led to some in the industry to criticize the increasingly large carbon footprint of the AI industry. However, it has also helped to stimulate interest within the AI community towards more energy-efficient computing. Such ideas, like more efficient algorithms, data representations, and computation have been the focus of a seemingly unrelated field for several years: tiny machine learning.

#ai & machine learning #embedded iot devices #tinyml #machine-learning