In part 1, I’ve talked about the basics of contract-based testing. I haven’t talked about the specifics of contract-based testing yet, I will do so in part 3.

Before I dive deep into the details of contract-based testing I want to take a small step back to talk about end-to-end testing. Both contract-based tests and end-to-end tests are ways to test full applications. These approaches have a lot of overlap. In this article, I want to talk about some issues of end-to-end tests and why contract-based tests are a better fit for most situations.

End-to-end testing

When testing on an end-to-end environment we deploy all applications required for a test in the same environment. We try to make this environment as similar to production as possible.

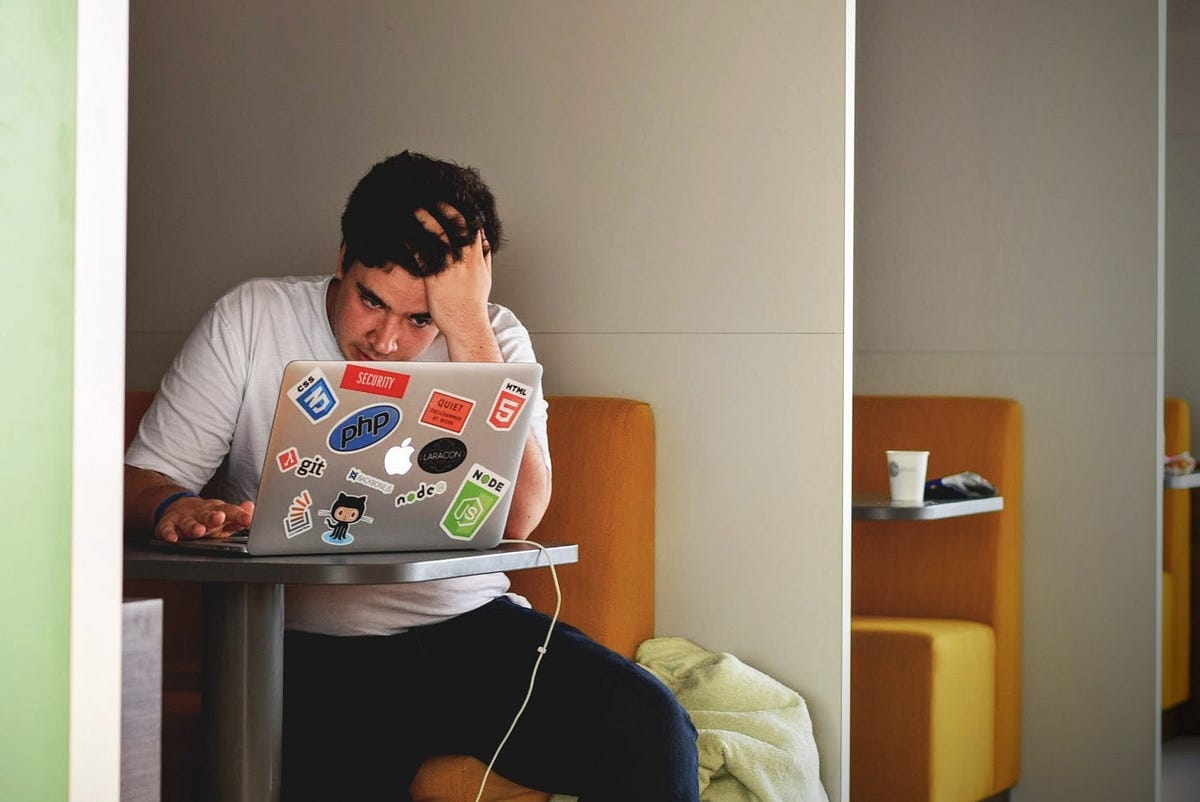

Image by Vilas Pultoo

In this example, we have two applications running on the end-to-end environment. A provider application called Amazing and a consumer application named Belle. Amazing and Belle can talk to each other via an API. We feed the applications with everything they need to run. Think of environment-specific dependencies like data, files, certificates, etc. With all of this setup, we can start testing.

Image by Vilas Pultoo

When we execute a test, we’ll do so from a separate environment, like a laptop or a pipeline. Note that all the complex stuff happens in the end-to-end environment.

The good stuff

Testing like this is very effective. Because of the production-likeness, we can test anything and everything that can happen in production. It’s no wonder that this way of testing is popular; at a passing glance, it’s clear to everybody why end-to-end tests are useful.

- Test complex interactions

- Functional tests

- Technical tests

- Manual tests

- Let the business preview features

- Replay production issues

- Lots of coverage per test

Headache fuel

Despite the awesome possibilities, I get headaches from testing on an end-to-end environment.

- Tests are brittle.

- This is especially the case when teams release often. Many applications on end-to-end environments will run multiple versions every day, with no notice upfront. Having this many versions, some of which untested, hurts the stability of the environment. Another effect of this is that your test results can’t tell you if things will work with the production versions of the applications you depend on.

- Tests take a long time to make.

- When making tests you often depend on other teams to do things, like adding data to their database. This is slow because those teams have different priorities. This gets more painful when you need data to be in sync across multiple applications for your tests to pass.

- Maintenance is very time-consuming.

- End-to-end environments tend to have a lot of tests and all of them need maintenance at some point. It’s not an issue when we have full control over all parts of the test, but some parts of our tests live in other people’s applications and vice versa. This results in situations where no one knows what’s part of which test and if we can remove it. This causes the environment to be slowly polluted with parts of decommissioned tests.

What’s interesting about the issues listed above is that they are all born from the same root cause; tight coupling. We intertwine our applications and teams to such a degree that it becomes impossible to manage things like data and versions effectively. The teams involved constantly depend on each other. For me, the result is a headache at the end of the day.

Slow feedback

The final headache inducer is the slow feedback end-to-end tests provide. With a continuous integration pipeline, we can get test results of low-level tests in a few minutes. But end-to-end tests tend to be slow processes. When we add these very high-level tests to the pipeline our feedback time is increased dramatically, often 10-fold. And that’s assuming a best-case scenario where …

- You’re executing all tests.

- Skipping tests can result in you missing an issue. Don’t compromise the quality of your test run by cutting corners.

- You’ve automated all your end-to-end tests.

- Including manual tests in a continuous integration pipeline is a bad idea. It will inflate your feedback time to when-the-tester-has-time-to-look-at-it which will drive developers crazy.

- The end-to-end environment has been stable for the duration of the test run.

- For example, an application you’re depending on might get a new release while you’re running your tests. This release causes some downtime which will make your tests fail. You analyze the error and will have to restart the pipeline. Again. This further increases your feedback time.

This slow feedback will lead to a longer time-to-market for all changes to your application, including bugfixes. This has a direct impact on the experience of the end-user and the ferocity of my headache.

Headaches begone

Contract-based testing can mitigate a lot of our headaches, though it’s not the almighty hero that will save us in every situation. Some think all end-to-end tests can be replaced with contract-tests. That may be the case for some applications, but it should never be the goal. End-to-end testing is not a bad way of testing, it’s just a very time-consuming one you should use sparsely. Remember, contract-based testing is placed below end-to-end testing on the test pyramid. It does not replace it.

After you finish reading about the details of contract-based testing, ask yourself how many of your end-to-end tests you can replace with contract tests. I guestimate that in my case we can replace somewhere between 70% and 100% of all end-to-end tests with contract-tests.

Moving away from end-to-end

I’ve talked quite a bit about contract-based testing so far. Let’s explore if we really need it to get rid of our end-to-end headaches.

Image by Vilas Pultoo

When we transition away from our end-to-end environment we get the situation above. We run all applications in their own isolated environment. Our applications still expect other applications to be there. We create stubs to fake the behaviour of our dependencies.

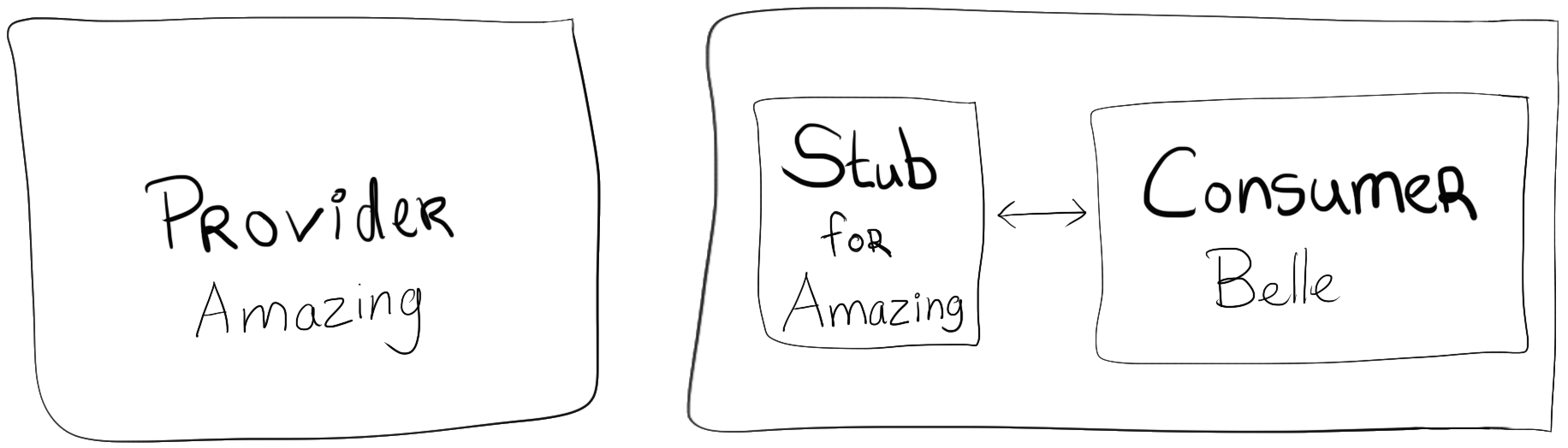

Image by Vilas Pultoo

It will work great in the beginning but after a few releases production will start failing left and right. We can’t rely on our tests to catch integration regression as nothing links our applications anymore. If an API provider introduces a breaking change to an API we depend on, our tests cannot catch the issue. This happens because during testing there is no coupling between our applications, and production will suffer because of it.

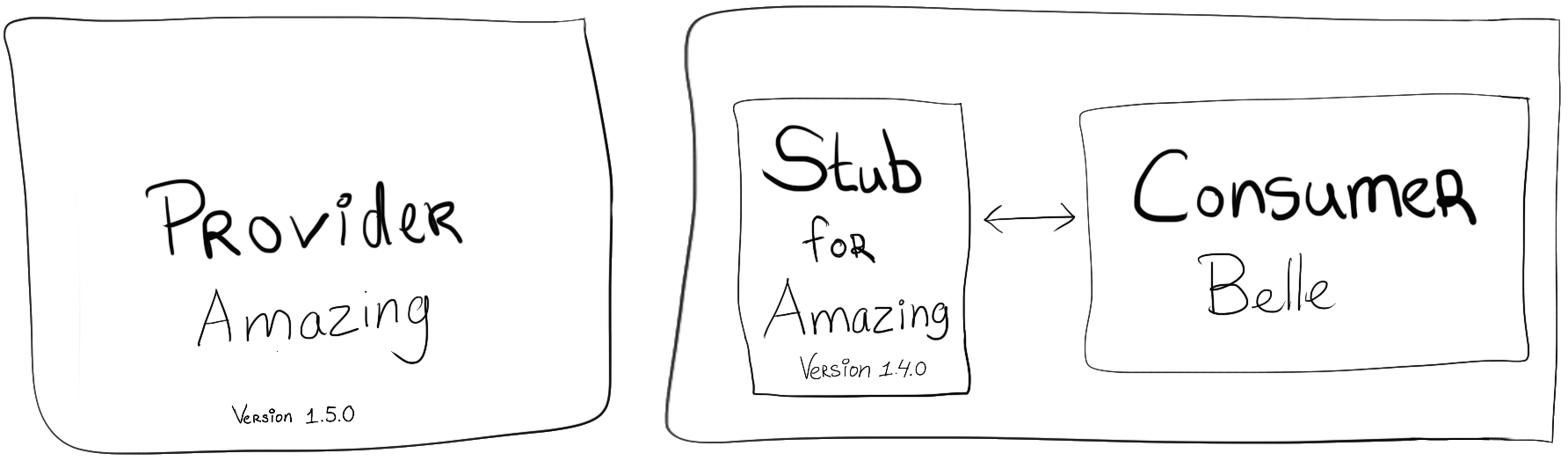

Image by Vilas Pultoo

Take, for example, a situation where the provider releases Amazing@1.5.0 while our stubs still work as if Amazing is at version 1.4.0. This is called stub drift; the stubs have silently drifted from the reality of production. Stub drift will lead to false positives in our tests.

#end-to-end-testing #contract-testing #testing #paradigm