Gradient descent_ is an optimization algorithm used to minimize a cost function (i.e. Error) parameterized by a model._

We know that Gradient means the slope of a surface or a line. This algorithm involves calculations with slope.

To understand about Gradient Descent, we must know what is a cost function.

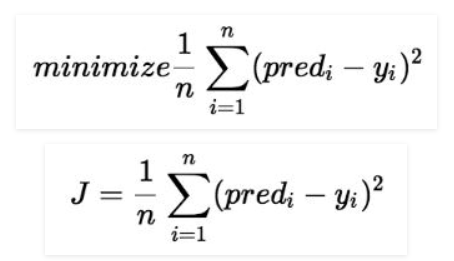

Cost function(J) of Linear Regression is the Root Mean Squared Error (RMSE) between predicted y value (predicted) and true y value (y).

Source: https://www.geeksforgeeks.org/ml-linear-regression/

For a Linear Regression model, our ultimate goal is to get the minimum value of a Cost Function.

To become familiar about Linear Regression, please go through this article.

First Let us visualize how the Gradient Descent looks like to understand better.

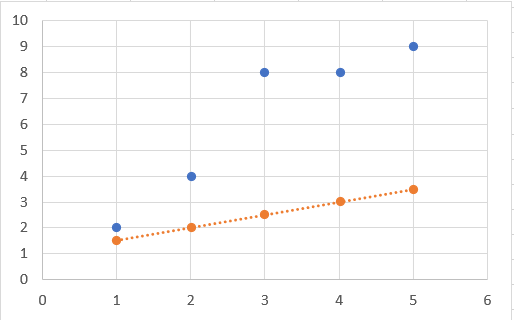

As Gradient Descent is an iterative algorithm, we will be fitting various lines to find the best fit line iteratively.

Each time we get an error value (SSE).

If we fit all the error values in a graph, it will become a parabola.

W

hat is the Relationship between Slope, Intercept and SSE? Why Gradient Descent is a Parabola?

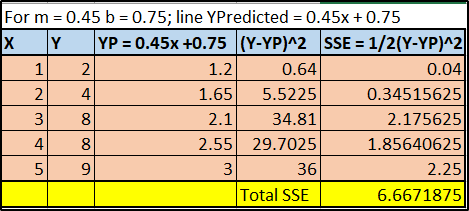

To answer these 2 questions, Let’s see the below example.

Made in MS. Excel.

You can notice that I took random value of m = 0.45 & c = 0.75.

For this slope and intercept we have come up with a new Y predicted values for a regression line.

#gradient-descent #linear-regression #multiple-linearregression #cost-function