Regularization techniques are crucial for preventing your models from overfitting and enables them perform better on your validation and test sets. This guide provides a thorough overview with code of four key approaches you can use for regularization in TensorFlow.

By Ahmad Anis, Machine learning and Data Science Student.

Photo by Jungwoo Hong on Unsplash.

Reguaralization

According to Wikipedia,

In mathematics, statistics, and computer science, particularly in machine learning and inverse problems, regularization is the process of adding information in order to solve an ill-posed problem or to prevent overfitting.

This means that we add some extra information in order to solve a problem and to prevent overfitting.

Overfitting simply means that our Machine Learning Model is trained on some data, and it will work extremely well on that data, but it will fail to generalize on new unseen examples.

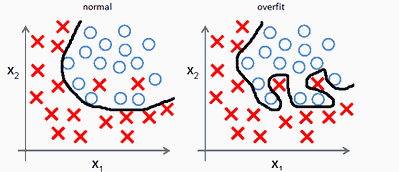

We can see overfitting in this simple example

Where our data is strictly attached to our training examples. This results in poor performance on test/dev sets and good performance on the training set.

#2020 aug tutorials # overviews #machine learning #regularization #tensorflow