Disclaimer: My opinions are informed by my experience maintaining Cortex, an open source platform for machine learning engineering.

If you frequent any part of the tech internet, you’ve come across GPT-3, OpenAI’s new state of the art language model. While hype cycles forming around new technology isn’t new—GPT-3’s predecessor, GPT-2, generated quite a few headlines as well—GPT-3 is in a league of its own.

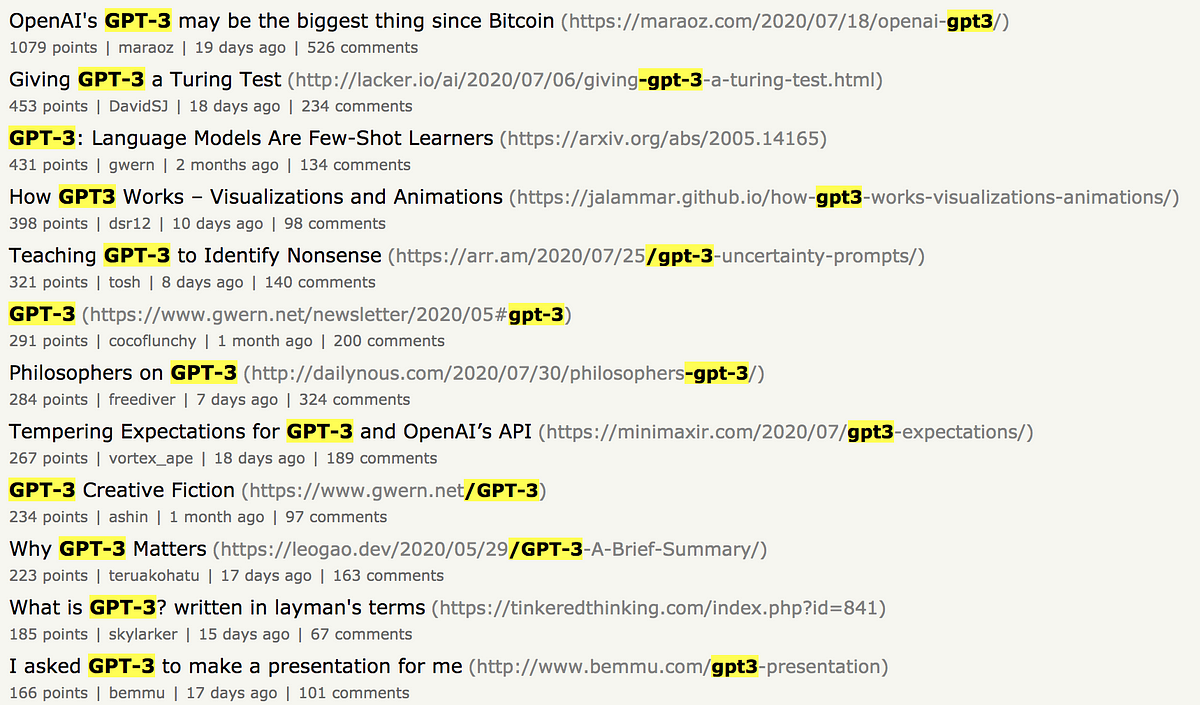

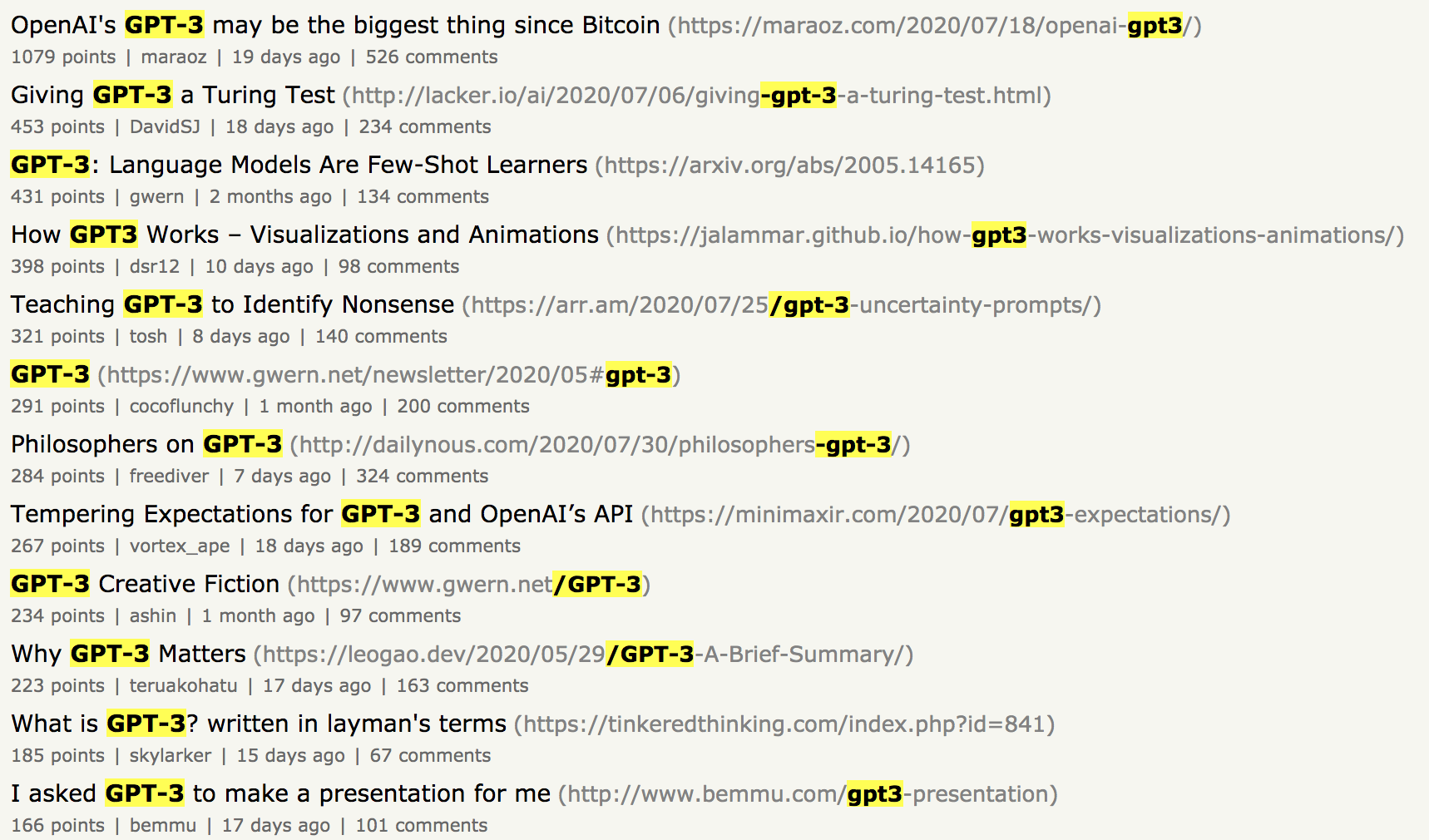

Looking at Hacker News for the last couple months, there have been dozens of hugely popular posts, all about GPT-3:

If you’re on Twitter, you’ve no doubt seen projects built on GPT-3 going viral, like this Apple engineer who used GPT-3 to write Javascript using a specific 3D rendering library:

And of course, there have been plenty of “Is this the beginning of SkyNet?” articles written:

Nuanced and insightful journalism courtesy of Coindesk

The excitement over GPT-3 is just a piece of an bigger trend. Every month, we see more and more new initiatives release, all built on machine learning.

To understand why this is happening, and what the trend’s broader implications are, GPT-3 serves as a useful study.

What’s so special about GPT-3?

The obvious take here is that GPT-3 is simply more powerful than any other language model, and that the increase in production machine learning lately can be chalked up to similar improvements across the field.

Undoubtedly, yes. This is a factor. But, and this is crucial, GPT-3 isn’t so popular just because it’s powerful. GPT-3 is ubiquitous because it is usable.

#technology #deep-learning #programming #machine-learning #artificial-intelligence #deep learning