A quick answer is, Pushes “Intelligence” to the Edge of the Network. What we could make out of this depends on, how much we know about different aspects of the network architecture and its evolution. Herewith I am trying to give an outlook to these attributes. To begin with, we will get down to the evolution of computing architecture in terms of network layer [components] placement.

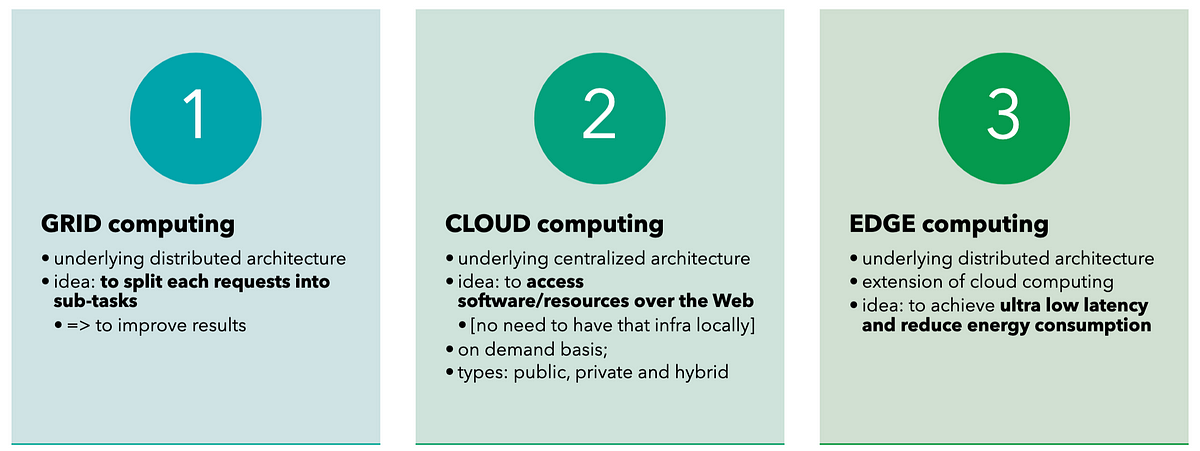

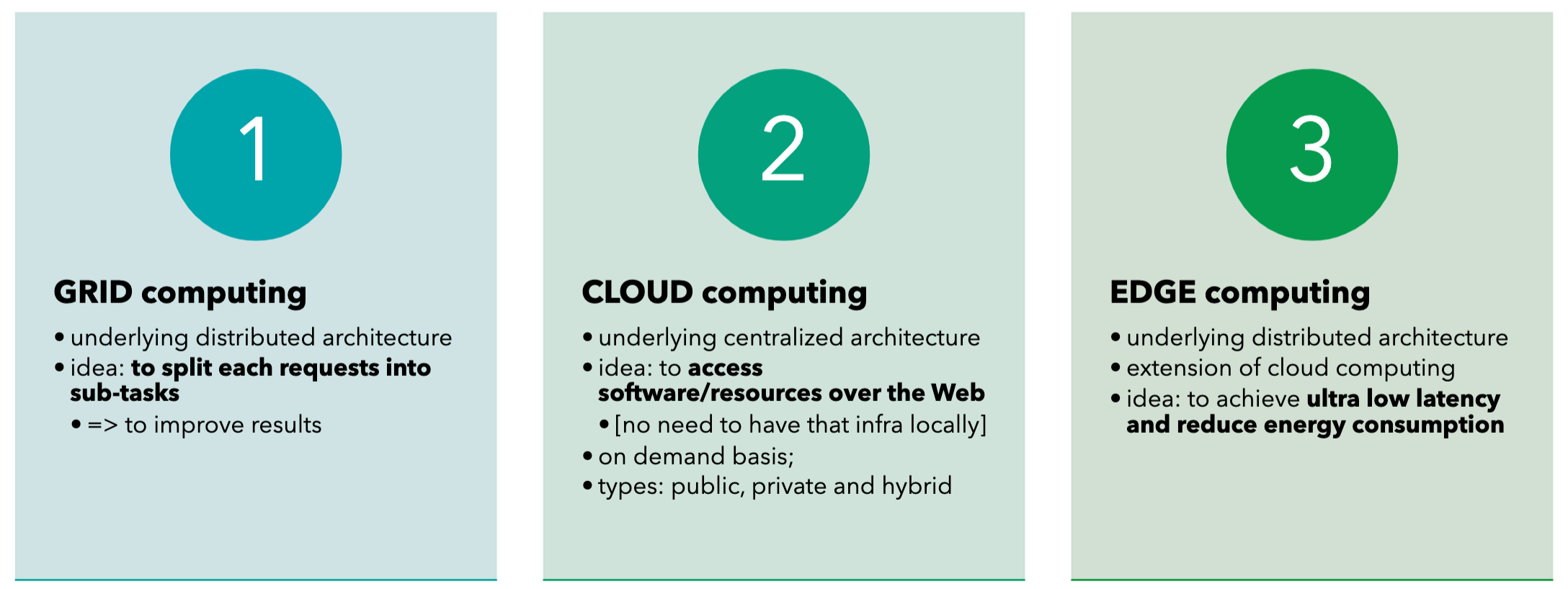

As expressed in the above picture, In the ’1990s, the rise of GRID computing was to speed up the computation time by splitting the requests into multiple sub-tasks and process those in parallel using a group of computers connected to each other physically; so that we can serve the user faster. In the ‘2000s, cloud computing takes the center stage and start moving towards accessing resources using Web instead of users owning the entire infrastructure in their premises [own infra could increase the CapEx, and we end up underutilizing most of the resources]. Cloud addresses these issues; the user would eventually use the resources on-demand basis (pay only for what you use).

Between the 2010s to present, we hear the term “Edge Computing” more often. This requirement arises when the number of users to cloud increases [put the stress on bandwidth] and the need for ultra-low latency of critical fields like IoT. Hence cloud data center computing capacity is extended to users via the architecture called “EDGE”. Edge would introduce a new layered approach by placing infrastructure close to user proximity. So that users would get the response without much delay which was caused by transmitting the data to a larger distance to the cloud. This EDGE layer is now having the capacity to do computation/storage/caching for the user without reaching to the cloud. This is what we define it as pushing intelligence to the edge of the network.

#ultra-low-latency #edge-computing #grid-computing #cloud