This article requires some basic knowledge of Recurrent neural networks and GRU’s. I’ll give a brief intro to this concept in this article.

In Deep Learning sequence to sequence, models have achieved a lot of success for the tasks of machine language translation. In this article, we will be building a Spanish to English translator.

**Remember any bump in the animations refers to a mathematical operation being computed behind the scenes. **Also if you come across any french words in the animations consider them as Spanish words and continue(I tried to collect the best animations I could from the web ( ͡° ͜ʖ ͡°))

seq 2 seq visualization

A brief intro to RNN’s:

Each RNN unit takes in an input vector(input #1) and previous hidden state vector(hidden state #0) to compute current hidden state vector(hidden state #1) and the output vector(output #1) of that particular unit. we stack many of these units to make build our model(if we are specifically talking about the first unit, logically there would be no previous state so initialize that with zeros). In the coming animations, you would encounter encoder and decoder parts, each of those encoder-decoder parts is stacked with these RNN’s(GRU’s in our current example, they can be LSTM’s as well but GRU’s would suffice).

Let’s begin with seq2seq model:

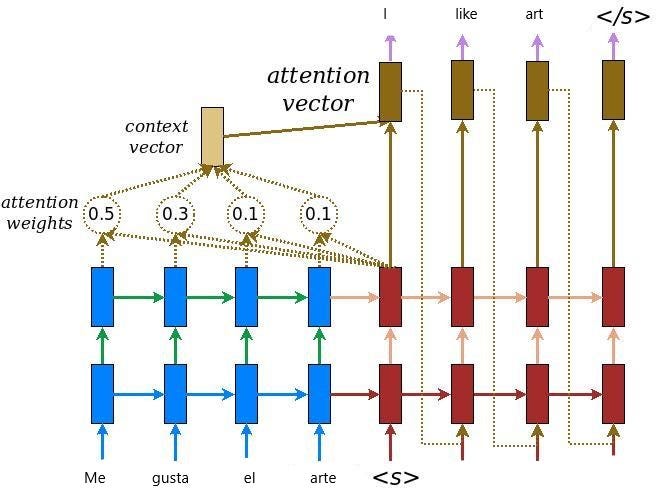

This seq2seq model takes input as a sequence of items and outputs another sequence of items. For example, in this model, it would take in a Spanish sentence as input “Me gusta el arte” and outputs translated English sentence “I like art”.

A high-level seq2seq attention model

The attention mechanism was born to help memorize long source sentences in neural machine translation (NMT). Rather than building a single context vector out of the encoder’s last hidden state, the secret sauce invented by attention is to create shortcuts between the context vector and the entire source input.

#attention-model #deep-learning #visualize-nlp #sequence-to-sequence #nlp