In this post, you will learn how modern face detection algorithms work under the hood, the privacy concerns related to the use of such technology, and finally, how to make use of the PixLab API to detect faces at first, extract their coordinates and finally apply a blur filter for each extracted face (i.e. bounding boxes). Let’s dive in!

Face Detection Algorithms

Face detection has been a solved problem since the early 2000s, but it still faces a few challenges, including detecting tiny, non-frontal faces at real-time on cheap CPUs on low-end mobile/IoT devices.

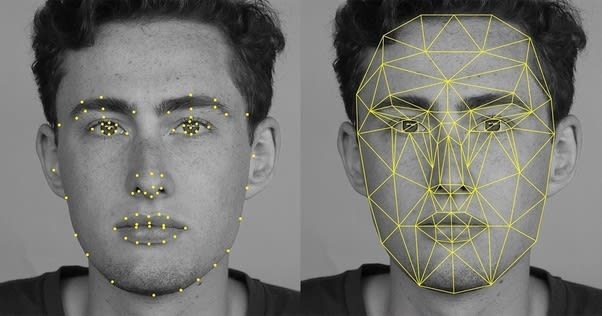

The most widely used technique is a combination of Histogram of Oriented Gradients (HOG for short) and Support Vector Machine (SVM) that achieve mediocre to relatively good detection ratios given a good quality image.

PixLab, on the other side, developed a brand new architecture targeting single class object detection and suitable for face detection. This detector is based on an architecture named RealNets and uses a set of decision tress organized as a classification cascade that works at Real-time on the CPU of cheap Android devices and easily outperform the HOG/SVM combination.

The detector is already shipped within the open source SOD Computer Vision Library (An OpenCV alternative) and has even been ported to Javascript/WebAssembly so you can perform real-time face detection on your browser. You can play with the detector on your browser as well find more information about the WebAssembly port via this blog post. Let’s talk about the RealNets architecture in the next section.

#javascript #php #python #artifical intelligence #privacy #webdev #computer vision