One of the painful things about training a neural network is the sheer number of hyperparameters we have to deal with. For example

- Learning rate

- Momentum or the hyperparameters for Adam optimization algorithm

- Number of layers

- Number of hidden units

- Mini-batch size

- Activation function

- etc

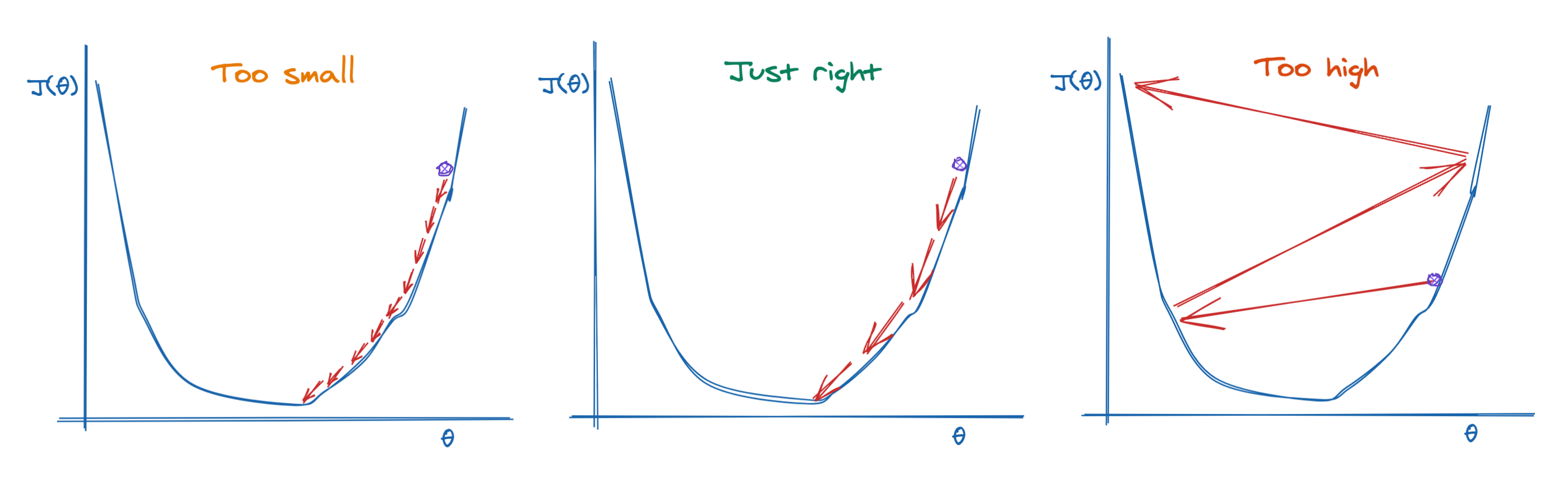

Among them, the most important parameter is the learning rate. If your learning rate is set to low, training will progress very slowly as you are making very tiny updates to the weights in your network. However, if your learning rate is set too high, it can cause undesirable divergent behavior in your loss function.

Image created by author using https://excalidraw.com/

When training a neural network, it is often useful to reduce the learning rate as the training progresses. This can be done by using learning rate schedules or adaptive learning rate. In this article, we will focus on adding and customizing learning rate schedule in our machine learning model and look at examples of how we do them in practice with Keras and TensorFlow 2.0

Learning Rate Schedules

Learning Rate Schedules seek to adjust the learning rate during training by reducing the learning rate according to a pre-defined schedule. The popular learning rate schedules include

- Constant learning rate

- Time-based decay

- Step decay

- Exponential decay

For the demonstration purpose, we will build an image classifier to tackle Fashion MNIST, which is a dataset that has 70,000 grayscale images of 28-by-28 pixels with 10 classes.

Please check out my Github repo for source code.

#machine-learning #tensorflow #deep-learning #keras