Apple’s WWDC 2020 (digital-only) event kickstarted with a bang. There were a lot of new surprises (read: Apple’s own silicon chips for Macs) from the world of SwiftUI, ARKit, PencilKit, Create ML, and Core ML. But the one that stood out for me was computer vision.Apple’s Vision framework got bolstered with a bunch of exciting new APIs that perform some complex and critical computer vision algorithms in a fairly straightforward way.Starting with iOS 14, the Vision framework now supports Hand and Body Pose Estimation, Optical Flow, Trajectory Detection, and Contour Detection.While we’ll provide an in-depth look at each of these some other time, right now, let’s dive deeper into one particularly interesting addition—the contour detection Vision request.

Our Goal

- Understanding Vision’s contour detection request.Running it in an iOS 14 SwiftUI application to detect contours along coins.Simplifying the contours by leveraging Core Image filters for pre-processing the images before passing them on to the Vision request. We’ll look to mask the images in order to reduce texture noise.

Vision Contour Detection

Contour detection detects outlines of the edges in an image. Essentially, it joins all the continuous points that have the same color or intensity.This computer vision task is useful for shape analysis, edge detection, and is helpful in scenarios where you need to find similar types of objects in an image.Coin detection and segmentation is a fairly common use case in OpenCV, and now by using Vision’s new VNDetectContoursRequest, we can perform the same in our iOS applications easily (without the need for third-party libraries).To process images or frames, the Vision framework requires a VNRequest, which is passed into an image request handler or a sequence request handler. What we get in return is a VNObservation class.You can use the respective VNObservation subclass based on the type of request you’re running. In our case, we’ll use VNContoursObservation, which provides all the detected contours from the image.We can inspect the following properties from the VNContoursObservation:

[normalizedPath](https://developer.apple.com/documentation/vision/vncontoursobservation/3548363-normalizedpath)— It returns the path of detected contours in normalized coordinates. We’d have to convert it into the UIKit coordinates, as we’ll see shortly.contourCount— The number of detected contours returned by the Vision request.topLevelContours— An array ofVNContoursthat aren’t enclosed inside any contour.contour(at:)— Using this function, we can access a child contour by passing its index orIndexPath.confidence— The level of confidence in the overallVNContoursObservation.

Note: Using

_topLevelContours_and accessing child contours is handy when you need to modify/remove them from the final observation.

Now that we’ve got an idea of Vision contour detection request, let’s explore how it might work it in an iOS 14 application.

There’s a lot to consider when starting a mobile machine learning project. Our new free ebook explores the ins and outs of the entire project development lifecycle.

Getting Started

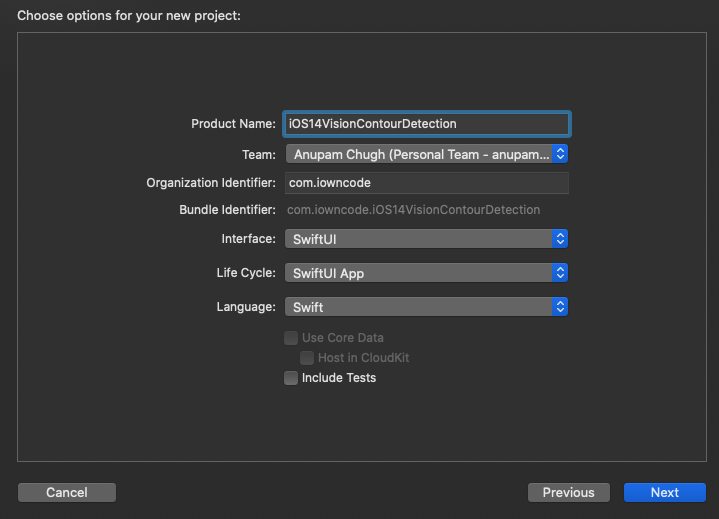

To start off, you’ll need Xcode 12 beta as the bare minimum. That’s about it, as you can directly run Vision image requests in your SwiftUI Previews.Create a new SwiftUI application in the Xcode wizard and notice the new SwiftUI App lifecycle:

You’ll be greeted with the following code once you complete the project setup:

@main

struct iOS14VisionContourDetection: App {

var body: some Scene {

WindowGroup {

ContentView()

}

}

}

_Note: Starting in iOS 14,

_SceneDelegate_has been deprecated in favor of the SwiftUI_App_protocol, specifically for SwiftUI-based applications. The __@main_annotation on the top of the_struct_indicates it’s the starting point of the application.

#computer-vision #programming #ios #heartbeat #ios-app-development