Overview

The idea is to first train(or use a pretrained model, if available) a language model on the wikipedia dataset of that language that can accurately predict the next word given a set of words( kind of what the keyboards on our phones do when they suggest a word). We then use that model to classify reviews, tweets, articles etc and amazingly with a few tweaks, we can build a state-of-the-art model for text classification in that language. For purpose of this article, I’ll be building the language model for Hindi language and use it to classify reviews/articles.

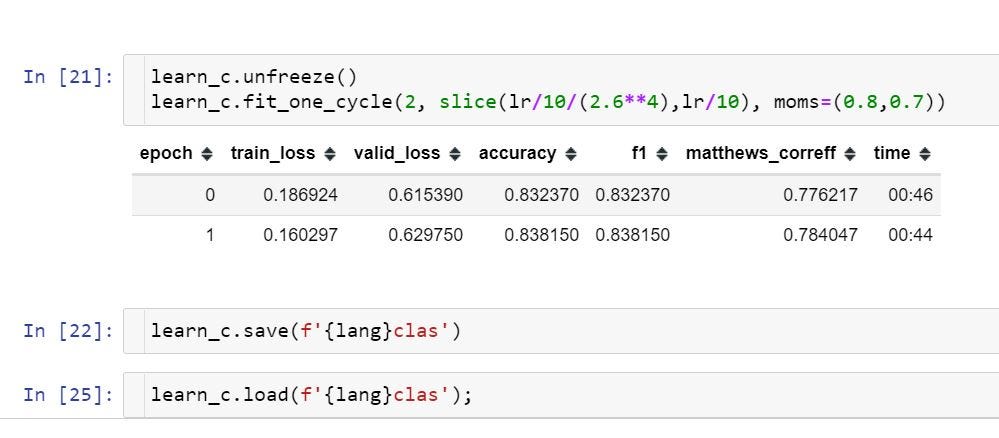

The method used in building this model is ULMFiT(Universal Language Model Fine-Tuning for Text Classification). The underlying concepts behind ULMFit are intricate and complex and the explaining its details would be better in a different article. However, the fastaiv1 library makes the task of language modelling and text classification quite easy and straightforward(requires less than 20 lines of code!!).

ULMFiT is also described by Jeremy Howard in in his mooc’s and can be found at these links. All the code mentioned below is available on my github.

The Wikipedia Dataset

We start off by downloading and cleaning the Wikipeda articles written in Hindi.

%reload_ext autoreload

%autoreload 2

%matplotlib inline

## importing the required libraries

from fastai import *

from fastai.text import *

torch.cuda.set_device(0)

## Initializing variables

## each lang has its code which is defined here(under the colunmn

## 'wiki': https://meta.wikimedia.org/wiki/List_of_Wikipedias

data_path = Config.data_path()

lang = 'hi'

name = f'{lang}wiki'

path = data_path/name

path.mkdir(exist_ok=True, parents=True) ## create directory

lm_fns = [f'{lang}_wt', f'{lang}_wt_vocab']

Now let’s download the articles from wikipedia. Wikipedia mantains a List of Wikipedias that contains information regarding the number of articles present in a particular language, number of edits, and depth. The ‘depth’ column (defined as [Edits/Articles] × [Non-Articles/Articles] × [1 − Stub-ratio] ) is a rough indicator of a Wikipedia’s quality, showing how frequently its articles are updated. The higher the depth, the greater would be the quality of the articles.

#deep-learning #nlp #naturallanguageprocessing #fastai #machine-learning