We are into the Innovation and Post-Digital Revolution era where everyone in the IT world is trying to develop many advanced technologies, tools, and ways of application designing so as to fulfill the rise in business demands and solve the complexities across the IT ecosystem resulting in massive technology drift. Every day, we get to face new showstoppers when it comes to running our IT systems efficiently in a controlled fashion. Considering the available options to resolve it, we can get a whole new bunch of tools and technologies, as some organizations are dedicatedly working to make our life awesome.

Similarly, talking about the traditional way of developing our applications using the waterfall model and monolithic architecture, it was difficult to sustain when our systems became dynamic in nature. Clients did not want to wait for months of time to see their application in production, which gave birth to the agile development model. Monolithic architecture failed to prove its worth when it came to pushing new changes, as it was required to validate the whole application functionality. As we started facing these problems, multiple architecture patterns were evolved and microservice architecture is one of them. To sustain in this race and fulfill ever-changing and ever-increasing user demands, organizations must focus on:

- Streamlining and improving business process efficiency

- Intelligent IT & Information for Journey to New Tech

- Balancing Speed, Cost, Quality, and Risk

- Increasing Capacity to Innovate

New patterns and ways of designing applications are focused on making the end-user experience more wonderful, which increases many complexities and creates more challenges like:

- Maintaining Business Continuity

- Unpredictable Web Traffic

- Security Threats

- Operational Complexities

- Lack of Integration, Control, and Visibility

Microservice-based application architecture is one of the design patterns which was introduced to tackle a couple of above-specified challenges. This approach allowed the developers to split the application into multiple independent parts, but in turn, increased the operational strain. Though it addresses a couple of business issues, it did create some more challenges, when it came to interaction and control between microservices, which is called service mesh.

We needed a solution to control how different parts of the application can share data with one another in a controllable manner due to challenges like:

- No Encryption

- No retries, no failover

- No Intelligent load balancing

- No routing decisions

- No metrics or traces

- No default Access Control

To solve all these issues, ISTIO was developed.

What Is ISTIO?

ISTIO is an open-source service mesh platform. It is a dedicated layer that can be introduced to make service to service communication secure, efficient, and reliable.

It includes APIs that let it integrate into any logging platform or policy system. It is designed to run in a variety of environments, such as on-premise, cloud-hosted, in Kubernetes containers, and more. It provides behavioral insights and control over service mesh for microservices. Let us look at its architecture and components.

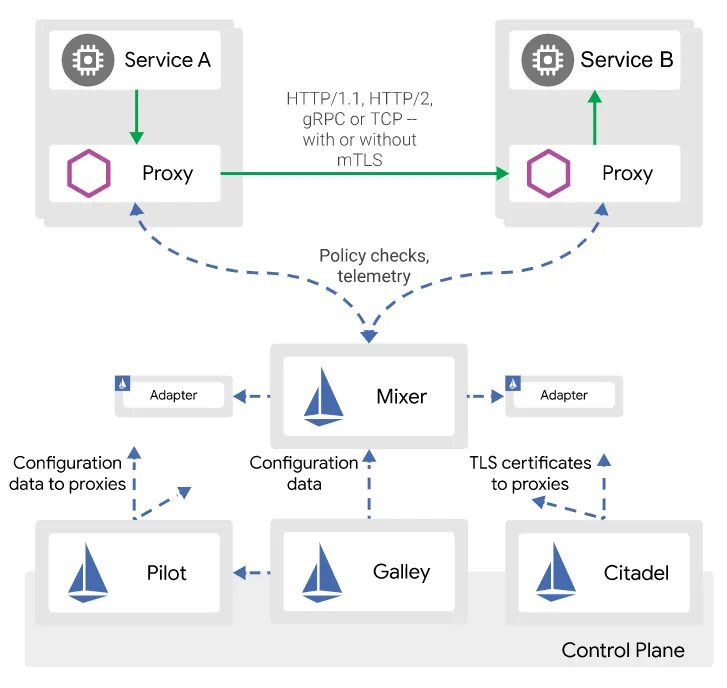

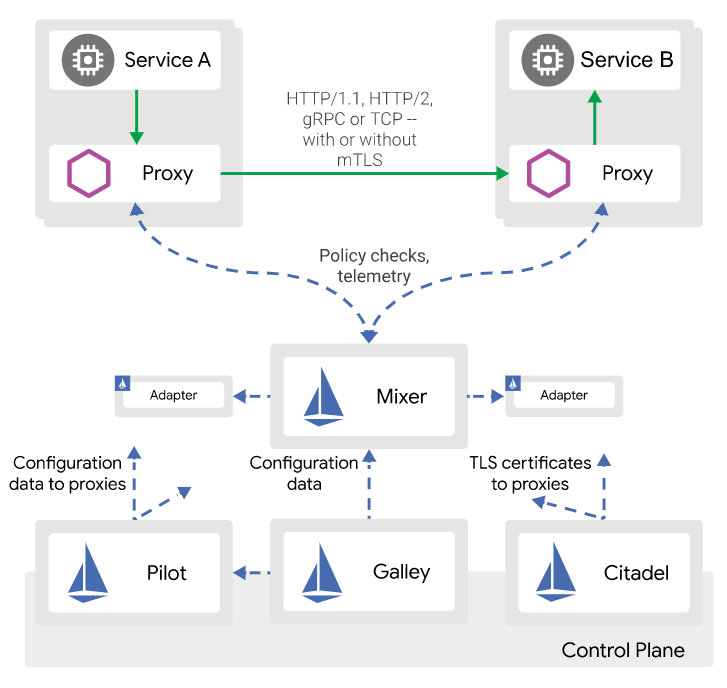

1.1 ISTIO Architecture (Source: https://istio.io/docs/ops/deployment/architecture/)

Istio service mesh is logically split into a data plane and control plane.

- Data Plane: It is responsible for handling the communication between the services and take care of the functionalities like Service Discovery, Load Balancing, Traffic Management, Health Check, etc.

- Pilot: It is responsible for providing all the configuration data from a centralized place.

- Citadel: It provides TLS Certs for all envoy proxy.

- Control Plane: Its responsibility is to manage all the components which allow for the central management of this service mesh.

Why Do I Need ISTIO?

It takes too much effort to address service mesh challenges at the application source code level. One possible solution is to add a proxy to every microservice which is called sidecar deployment and ISTIO does this magic for us. Now, instead of communicating directly to microservice, we can communicate via sidecars to solve service mesh challenges. ISTIO gives us:

- Automatic load balancing for HTTP, WebSocket, and TCP traffic.

- Full control of traffic behavior with advanced routing rules, retries, failovers, and fault injection.

- A plug and play policy layer to configure API supporting access controls, rate limits and quotas.

- Automatic metrics and logs for traffic.

- Secure service-to-service authentication with strong identity management between services in a cluster.

Now, after discussing ISTIO and the challenges it tends to solve, let us see this in action. The focus has been placed on Enabling Istio for Kubernetes based application, Advanced Routing, Canary Deployment, and Grafana Dashboard Setup to see service metrics.

In this article, we will see how we can deploy ISTIO into microservices running on the Kubernetes platform. For demo purposes, GKE- Google Kubernetes Engine has been used to host the Kubernetes Cluster.

#kubernetes #microservice #istio #service mesh