Q-learning is one of the simplest algorithms to try reinforcement learning. Reinforcement learning, as the name suggests, focuses on learning (by an agent) in a reinforced environment. The agent performs an action, analyses the outcome, and gets a reward. The agent then learns to interact with its environment by taking into consideration the rewards which it will get by performing specific actions in a particular state.

Q-learning is simple because it works without a neural network (the one with a neural network becomes deep Q-learning). We update a Q table, which serves as a map for our agent, i.e., it tells our agent which action to perform in which state so as to get maximum reward.

You may find the GitHub project here:

Before we start …

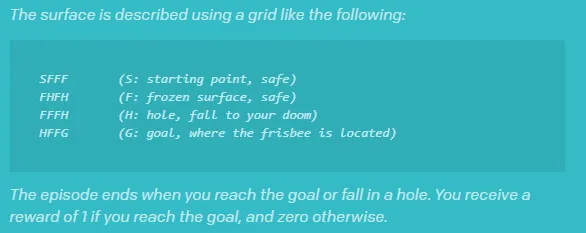

This article assumes that the readers are familiar with terms like _s_tate, action, episode, and reward in the context of reinforcement learning. Basic knowledge of Q-learning would be helpful. You’ll see an implementation of the Frozen Lake environment ahead, which is quite similar to Open AI’s Gym in Python.

As this is an Android project, you’ll see much more code in the GitHub repo than in the snippets below: The code that updates the UI as the agent that performs an action is not written in the code snippets. Also, you’ll find Kotlin Coroutines in action. To focus only on the Q-learning part, I have eliminated these lines (they are present in the GitHub repo) from the snippets below so as to avoid confusion and enhance readability.

So, enough of the disclaimers! Let’s move ahead!

#reinforcement-learning #android #android-app-development #machine-learning #heartbeat