In this article, we explore the Azure Data Lake Analytics and query data using the U-SQL.

Introduction to Azure Data Lake Analytics (ADLA)

Microsoft Azure platform supports big data such as Hadoop, HDInsight, Data lakes. Usually, a traditional data warehouse stores data from various data sources, transform data into a single format and analyze for decision making. Developers use complex queries that might take longer hours for data retrieval. Organizations are increasing their footprints in the Cloud infrastructure. It leverages cloud infrastructure warehouse solutions such as Amazon RedShift, Azure Synapse Analytics (Azure SQL data warehouse), or AWS snowflake. The cloud solutions are highly scalable and reliable to support your data and query processing and storage requirements.

The data warehouse follows the Extract-Transform-Load mechanism for data transfer.

- Extract: Extract data from different data sources

- Transform: Transform data into a specific format

- Load: Load data into predefined data warehouse schema, tables

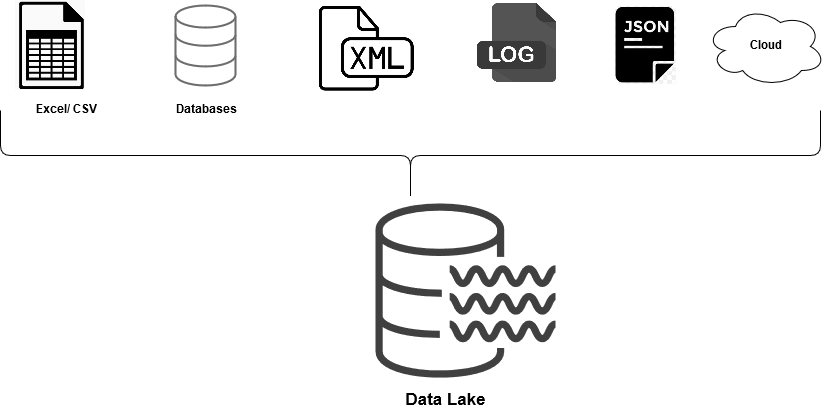

The data lake does not require a rigorous schema and converts data into a single format before analysis. It stores data in its original format such as binary, video, image, text, document, PDF, JSON. It transforms data only when needed. The data can be in structured, semi-structured and unstructured format.

A few useful features of a data lake are:

- It stores raw data ( original data format)

- It does not have any predefined schema

- You can store Unstructured, semi-structured and structured in it

- It can handle PBs or even hundreds of PBs data volumes

- Data lake follows schema on the reading method in which data is transformed as per requirement basis

At a high-level, the Azure data platform architecture looks like below. Image reference: Microsoft docs

- Ingestion: Data collection from various data sources and store into the Azure Data lake in its original format

- **Storage: **Store data into Azure Data Lake Storage, AWS S3 or Google cloud storage

- Processing: Process data from the raw storage into a compatible format

- **Analytics: **Perform data analysis using stored and processed data. You can use Azure Data Lake Analytics(ADLA), HDInsight or Azure Databricks

Creating an Azure Data Lake Analytics (ADLA) Account

We need to create an ADLA account with your subscription to process data with it. Login to the Azure portal using your credentials. In the Azure Services, click on Data Lake Analytics.

In the New Data Lake Analytics account, enter the following information.

- Subscription and Resource group: Select your Azure subscription and resource group, if it already exists. You can create a new resource group from the data lake analytics page as well

- **Data Lake Analytics Name: **Specify a suitable name for the analytic service

- **Location: **Select the Azure region from the drop-down

- **Storage subscription: **Select the storage subscription from the drop-down list

- **Azure Data Lake Storage Gen1: **Create a new Azure Data Lake Storage Gen1 account

- **Pricing package: **You can select a pay-as-you model or monthly commitment as per your requirement

#sql azure #u-sql #azure data lake analytics