TL;DR — Transformers are an exciting and (relatively) new part of Machine Learning (ML) but there are a lot of concepts that need to be broken down before you can understand them. This is the first post in a column I’m writing about them. Here we focus on how the basic self-attention mechanism works, which is the first layer of a Transformer model. Essentially for each input vector Self-Attention produces a vector that is the weighted sum over the vectors in its neighbourhood. The weights are determined by the relationship or _connectedness _between the words. This column is aimed at ML novices and enthusiasts who are curious about what goes on under the hood of Transformers.

Contents:

1. Introduction

Transformers are an ML architecture that have been used successfully in a wide variety of NLP tasks, especially sequence to sequence (seq2seq) ones such as machine translation and text generation. In seq2seq tasks, the goal is to take a set of inputs (e.g. words in English) and produce a desirable set of outputs (- the same words in German). Since their inception in 2017, they’ve usurped the dominant architecture of their day (LSTMs) for seq2seq and have become almost ubiquitous in any news about NLP breakthroughs (for instance OpenAI’s GPT-2 even appeared in mainstream media!).

Fig 1.1 — machine translation (EN → DE)⁴

This column is intended as a very gentle, gradual introduction to the math, code and concept behind Transformer architecture. There’s no better place to start with than the attention mechanism because:

The most basic transformers rely purely on attention mechanisms³.

2. Self-Attention — the math

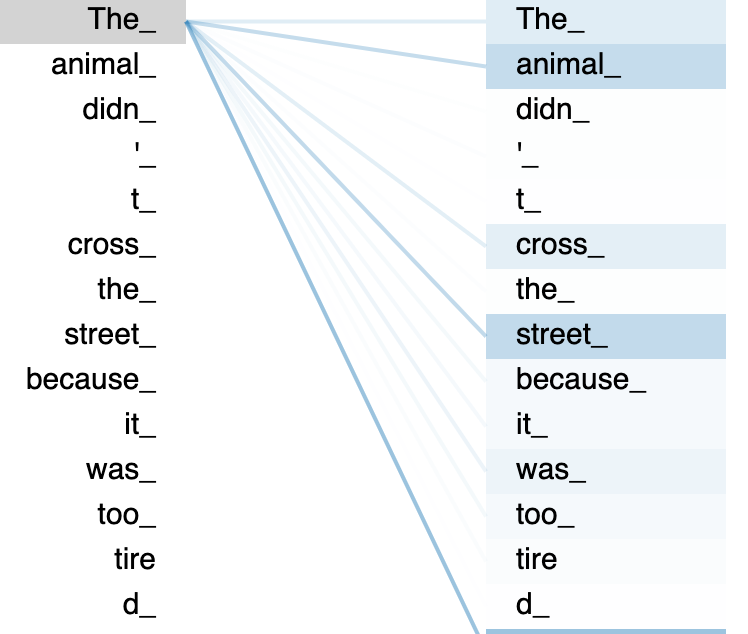

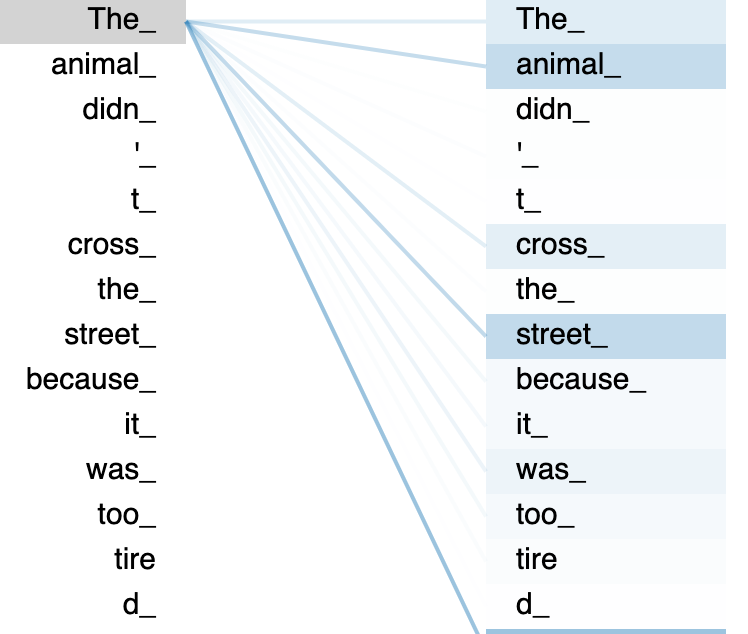

We want an ML system to learn the important relationships between words, similar to the way a human being understands words in a sentence. In Fig 2.1 you and I both know that “The” is referring to “animal” and thus should have a strong connection with that word. As the diagram’s colour coding shows, this system knows that there is some connection between “animal”, “cross”,“street” and “the” because they’re all related to “animal”, the subject of the sentence. This is achieved through Self-Attention.⁴

Fig 2.1 — which words does “The” pay attention to?⁴

At its most basic level, Self-Attention is a process by which one sequence of vectors x is encoded into another sequence of vectors _z _(Fig 2.2). Each of the original vectors is just a block of numbers that **represents a word. **Its corresponding z vector represents both the original word _and _its relationship with the other words around it.

Fig 2.2: sequence of input vectors x getting turned into another equally long sequence of vectors z

Vectors represent some sort of thing in a _space, _like the flow of water particles in an ocean or the effect of gravity at any point around the Earth. You can think of words as vectors in the total space of words. The direction of each word-vector means something. Similarities and differences between the vectors correspond to similarities and differences between the words themselves (I’ve written about the subject before here).

Let’s just start by looking at the first three vectors and only looking in particular at how the vector x2, our vector for “cat”, gets turned into z2. All of these steps will be repeated for each of the input vectors.

#tocaat #machine-learning #nlp #data-science #deep learning