Exploring the use of VGG16 for Image Classification

What is Transfer Learning?

**Transfer learning **is a research problem in machine learning that focuses on storing knowledge gained while solving one problem and applying it to a different but related problem.

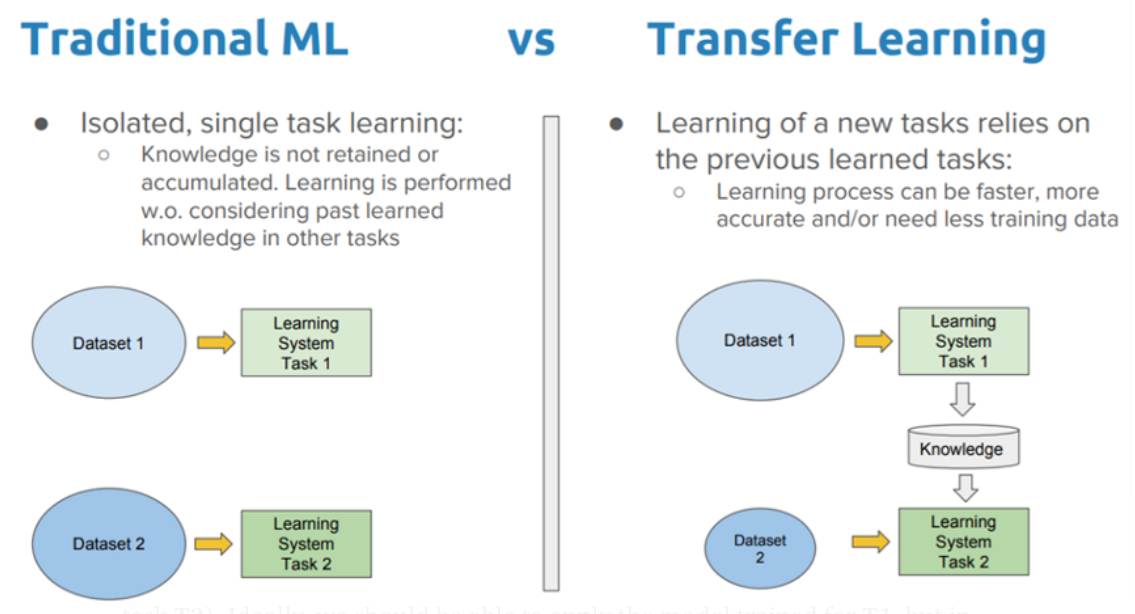

Traditional Machine Learning VS Transfer Learning (source: Dipanjan Sarkar)

The traditional machine learning approach generalizes unseen data based on patterns learned from the training data, whereas for transfer learning, it begins from previously learned patterns to solve a different task.

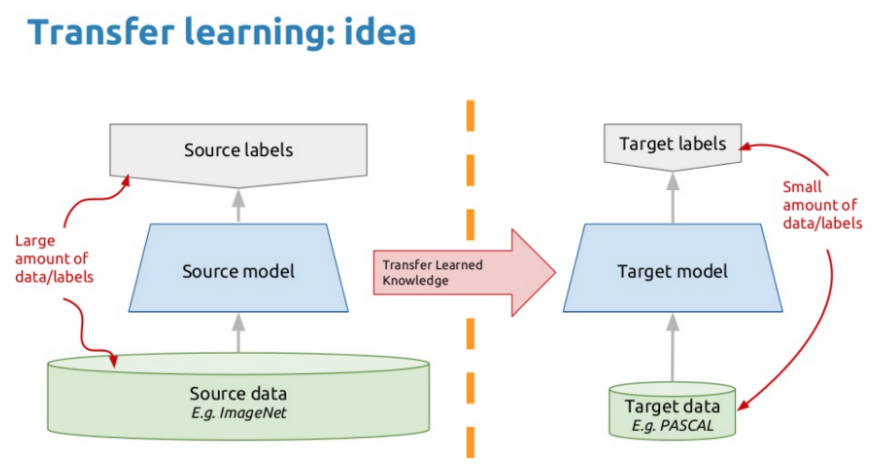

Basic Idea of Transfer Learning (source: Integrate.ai)

There are two common approaches to transfer learning:

- Developed model approach: Develop a model that is better than a naive model to ensure that some feature learning has been performed. Reuse and tune the developed model for the task of interest.

- Pre-trained model approach: Select a pre-trained source model from available models to reuse and fine-tune.

In this post, we shall focus on the pre-trained model approach as it is commonly used in the field of deep learning.

What are Pre-trained Models?

A pre-trained model is a saved network that was previously trained on a large dataset, typically on a large-scale image-classification task. One can use the pre-trained model as it is or use transfer learning to customize this model to a given task.

The intuition behind transfer learning is that if a model is trained on a large and general enough dataset, this model will effectively serve as a generic model of the visual world. We can then take advantage of these learned feature maps without having to start from scratch by training a large model on a large dataset.

Let’s take a deep dive into VGG16 - a notable pre-trained model submitted to the Large Scale Visual Recognition Challenge in 2014.

#transfer-learning #pokemon #using-pretrained-model #vgg16 #deep learning