I love web scraping. Not only has it become a big industry in recent years, it’s also a lot of fun. It can help you easily find interesting insights using data that already exists. Today, I’m going to be building a very basic web scraper that can search through DuPont Registry (a marketplace for expensive cars, homes, and yachts); for this scraper, I’ll be focusing on the car section.

I will be using two relevant gems: Nokogiri and HTTParty. Nokogiri is a gem which allows you to parse HTML and XML into Ruby objects. HTTParty, on the other hand, simplifies the process of pulling raw HTML into your Ruby code. These two gems will work together in our scraper.

It’s important to note that although Nokogiri and HTTParty are cool, they are more like sprinkles on top. The core skills for building a program with this are things like defining and initializing a class and its instance(s), iterating through hashes and arrays, and building helper methods.

Getting Started

If you have not already, make sure that you have your essential gems installed and required in your code. Also, I like to use byebug or pry so that I can stop the code if needed and take a look at what’s going on!

require 'nokogiri'

require 'httparty'

require 'byebug'

require 'pry'

class Scraper

#we will be adding code here shortly

end

Initializing an Instance

Now, let’s think about our Scraper class. What kind of methods should it have? What does this class need to keep track of?

We will need to decide this before defining how our new scraper is initialized. I’ve already given this a little thought, and here’s what I came up with:

class Scraper

attr_reader :url, :make, :model

def initialize(make, model)

@make = make.capitalize

@model = model.capitalize

@url = "https://www.dupontregistry.com/autos/results/#{make}/#{model}/for-sale".sub(" ", "--")

end

end

initialize.rb

Let’s break this down. Each instance of a Scraper class should know what make and model it’s supposed to be looking at and which URL it needs to visit to find its data. It would also be nice if these instance variables were readable, so I’ve added an attr_reader to each of them.

DuPont Registry’s URL paths are fairly straightforward, so we can pull in the template literals from ‘make’ and ‘model’ to generate our destination URL and then save that to our instance variable of @url. The .sub(“ “, “ — “) at the end of the URL string is just a method that replaces whitespace with two dashes.

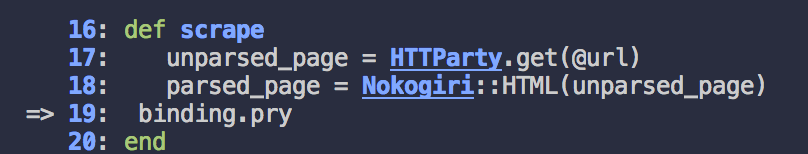

Time to add a little more functionality. We need our scraper instance to actually, well, scrape. Let’s start building out some instance methods.

class Scraper

attr_reader :url, :make, :model

def initialize(make, model)

@make = make.capitalize

@model = model.capitalize

@url = "https://www.dupontregistry.com/autos/results/#{make}/#{model}/for-sale".sub(" ", "--")

end

def parse_url(url)

unparsed_page = HTTParty.get(url)

Nokogiri::HTML(unparsed_page)

end

def scrape

parsed_page = parse_url(@url)

binding.pry

end

binding.pry

0

end

gistfile1.rb

I want to get started on these helper methods early before our #scrape method becomes a novel. The #parse_url method is taking in a URL as an argument, calls on HTTParty to pull in raw HTML, and then Nokogiri is taking that unparsed page and… umm… parsing it. Just like that, we can take an entire webpage and transform it into a usable Ruby object! We then save that entire object into a local variable inside of #scrape called parsed_page. Now is a pretty good time to set a binding.pry and take a look at what we actually have before we go any further.

Let’s go ahead and run this code in our terminal using the bash command ruby scraper.rb (this assumes that you’re coding in a file titled scraper.rb, if your file has a different name then adjust accordingly). Make sure that you are in the correct directory.

Now, you are (or should be) in a pry session, so we can start typing Ruby code into our terminal. First, let’s create a new Scraper instance:

bentley = Scraper.new("bentley", "continental GT")

Cool, we have a new instance of a Scraper that is going to be looking for Bentley Continental GTs at https://www.dupontregistry.com/autos/results/bentley/continental–gt/for-sale. We can confirm this by typing the following into our terminal:

bentley.url

#=> "https://www.dupontregistry.com/autos/results/bentley/continental--gt/for-sale"

Great! Now let’s get down to business. We need to call our #scrape method on our Scraper instance of bentley so that we can hit our second binding.pry and take a look at what we got:

bentley.scrape

What I’m interested in at this point is what exactly parsed_page is since theres a lot of Ruby gem trickery going on. Let’s call on the parsed_page local variable.

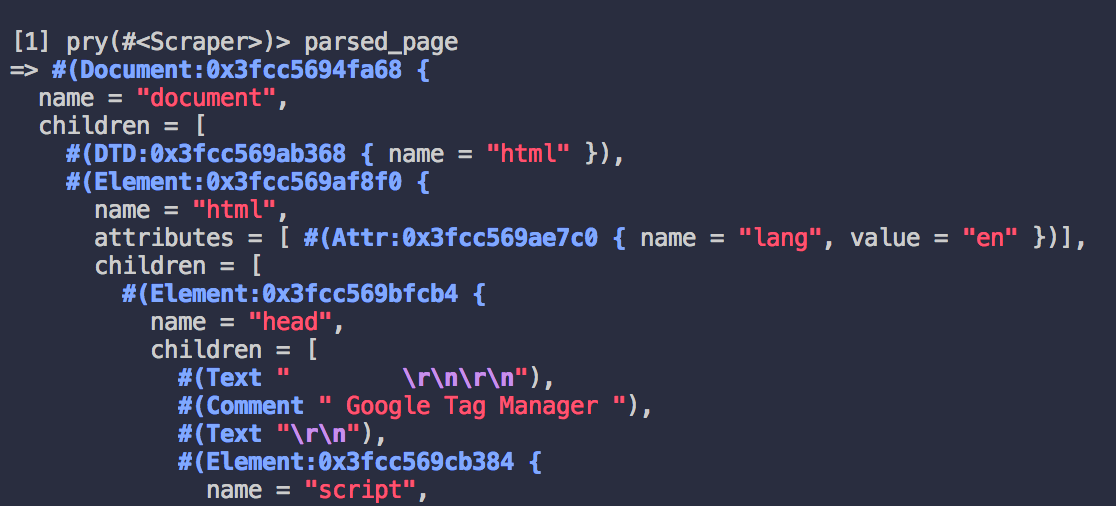

pry(#<Scraper>)> parsed_page

Whoa! Okay. That’s a lot of stuff. Clearly it’s doing something, I’m just not sure what to do with all of this yet. Mine looked something like this:

This may look different if you are scraping a different website.

Before we get too deep into trying to figure out how to scrape our bits of data from this huge object, let’s look at what I’m trying to get to so we can reverse engineer it. I’d like a hash that looks something like this:

{ year: 2007,

name: "Bentley",

model: "Continental GT",

price: 150000,

link: "https://www.dupontregistry.com/link_to_my_car" }

hash.rb

Cool, so rather than trying to pull out every piece of data, we’re just looking for these five pieces of data: year, make, model, price, and link. But wait! We already know our make and model from when we instantiated our scraper instance, so we’re really only looking for 3 data points.

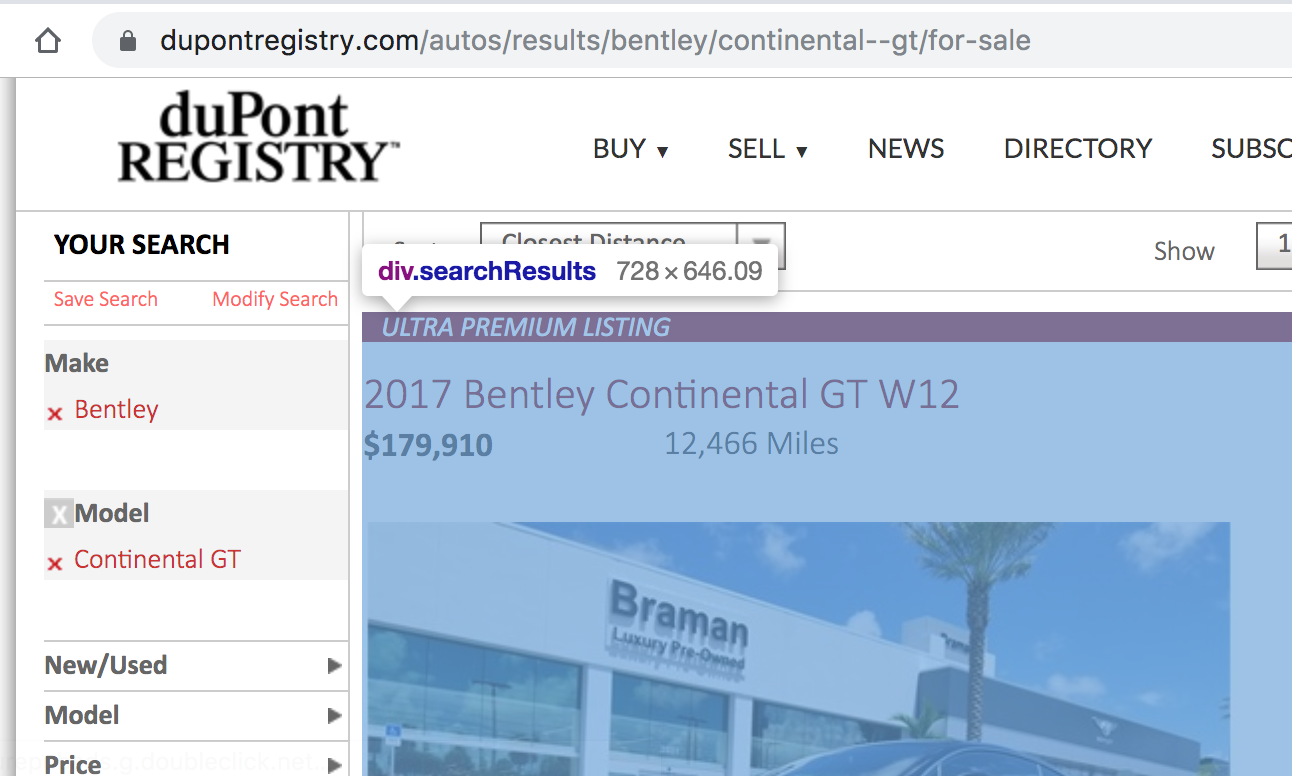

Dealing With HTML

Now that we know what we’re looking for, let’s look through the HTML of the page we want to scrape to find our paths. First, let’s see if we can find the container which includes all of our relevant car info. We’ll need to visit that listings page and open up developer tools.

Let’s save each listing in a variable that we can use later. We’ll use the .css method from Nokogiri to build this array.

cars = parsed_page.css('div.searchResults')

#creates an array where each element is parsed HTML pertaining to a different listing

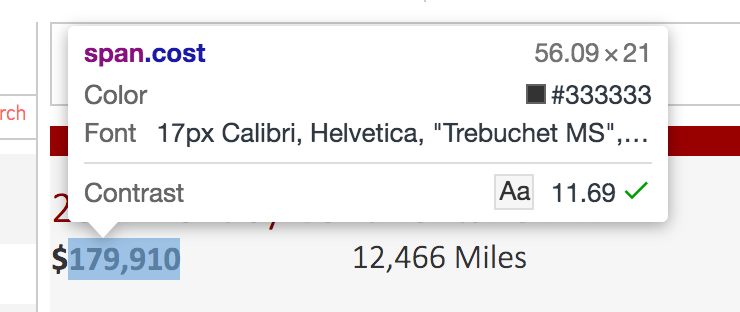

Now, let’s just try to find the price.

We’ll have to play around with this in order to pull out the text we need. It’s best to do this in a pry or byebug session. I was able to pull out the price using the following code:

car.css('.cost').children[1].text.sub(",","").to_i

#looks in an instance of car for the cost div

#looks in its children at an index of 1 (this is where it lives)

#converts it to text

#gets rid of the comma

#converts the string into an integer

We’ve got price, now let’s get year. In the same fashion, we’ll need to poke around with developer tools to find our string that contains the year of the car. In my case, this ended up being a string that looked like “2017 Bentley Continental GT V8 S” so I decided to just steal the first 4 characters and convert it into an integer:

car.css('a').children[0].text[0..4].strip.to_i

#looks in an instance of car for the <a> tag

#looks in its children at an index of 0 (this is where it lives)

#converts to text

#takes the first 4 characters (ie "2017")

#strips any whitespace if it exists

#converts to integer

Last but not least, lets get our link to the car’s page.

car.css('a').attr('href').value

#looks in an instance of car for the <a> tag

#gets the attribute of <href>

#pulls out is value (as a string)

So, we have all of our JQuery-esque stuff figured out. As you read on, you’ll see how these are implemented in the helper method create_car_hash.

Finishing Up The Scraper

From here, it’s pretty easy to finish up our scraper. Lets get our helper methods set up so that our #scrape method will have everything it needs.

def create_car_hash(car_obj)

#creates a hash with the values we need from our parsed car object

car_obj.map { |car|

{ year: car.css('a').children[0].text[0..4].strip.to_i,

name: @make,

model: @model,

price: car.css('.cost').children[1].text.sub(",","").to_i,

link: "https://www.dupontregistry.com/#{car.css('a').attr('href').value}" }

}

end

def get_all_page_urls(array_of_ints)

#gets URLs of all pages, not just the first page

array_of_ints.map { |number|

@url + "/pagenum=#{number}" }

end

def get_number_of_pages(listings, cars_per_page)

#finds how many pages of listings exist

a = listings % cars_per_page

if a == 0

listings / cars_per_page

else

listings / cars_per_page + 1

end

end

def build_full_cars(number_of_pages)

#builds an array of car hashes for each page of listings, starting on page 2

a = [*2..number_of_pages]

all_page_urls = get_all_page_urls(a)

all_page_urls.map { |url|

pu = parse_url(url)

cars = pu.css('div.searchResults')

create_car_hash(cars)

}

end

helper_methods.rb

I ended up making 4 different helper methods:

- create_car_hash creates a hash based on the values we need

- get_all_page_urls does what it sounds like! Collects all urls into an array which will allow us to factor in pagination

- get_number_of_pages is pretty self explanatory — this will also help us handle pagination

- build_full_cars gives us an array of car hashes from page 2 and beyond

Let’s put it all together in the #scrape method so that we can get what we want. At the end, this is what my code looks like:

require 'nokogiri'

require 'rest-client'

require 'httparty'

require 'byebug'

require 'pry'

class Scraper

attr_reader :url, :make, :model

def initialize (make, model)

@make = make.capitalize

@model = model.capitalize

@url = "https://www.dupontregistry.com/autos/results/#{make}/#{model}/for-sale".sub(" ", "--")

end

def parse_url(url)

unparsed_page = HTTParty.get(url)

Nokogiri::HTML(unparsed_page)

end

def scrape

parsed_page = parse_url(@url)

cars = parsed_page.css('div.searchResults') #Nokogiri object containing all cars on a given page

per_page = cars.count #counts the number of cars on each page, should be 10

total_listings = parsed_page.css('#mainContentPlaceholder_vehicleCountWithin').text.to_i

total_pages = self.get_number_of_pages(total_listings, per_page)

first_page = create_car_hash(cars)

all_other = build_full_cars(total_pages)

first_page + all_other.flatten

end

def create_car_hash(car_obj)

car_obj.map { |car|

{ year: car.css('a').children[0].text[0..4].strip.to_i,

name: @make,

model: @model,

price: car.css('.cost').children[1].text.sub(",","").to_i,

link: "https://www.dupontregistry.com/#{car.css('a').attr('href').value}" }

}

end

def get_all_page_urls(array_of_ints)

array_of_ints.map { |number|

@url + "/pagenum=#{number}" }

end

def get_number_of_pages(listings, cars_per_page)

a = listings % cars_per_page

if a == 0

listings / cars_per_page

else

listings / cars_per_page + 1

end

end

def build_full_cars(number_of_pages)

a = [*2..number_of_pages]

all_page_urls = get_all_page_urls(a)

all_page_urls.map { |url|

pu = parse_url(url)

cars = pu.css('div.searchResults')

create_car_hash(cars) }

end

end

DuP.rb

It may seem a little weird to build our hashes for the first page and the rest of the pages separately (see line 32), and you could probably consolidate this, but the first page’s URL is a little different and is important for getting our initial data set up.

Let’s run this really quick to make sure it does what we want it to do.

ruby scraper.rb

bentley = Scraper.new("bentley", "continental gt")

bentley.scrape

Our output is massive! I had to truncate it a bit. This is what I got:

[{:year=>2017,

:name=>"Bentley",

:model=>"Continental gt",

:price=>179910,

:link=>"https://www.dupontregistry.com//autos/listing/2017/bentley/continental--gt/2080775"},

{:year=>2012,

:name=>"Bentley",

:model=>"Continental gt",

:price=>86200,

:link=>"https://www.dupontregistry.com//autos/listing/2012/bentley/continental--gt/2070330"},

{:year=>2016,

:name=>"Bentley",

:model=>"Continental gt",

:price=>135988,

:link=>"https://www.dupontregistry.com//autos/listing/2016/bentley/continental--gt/2077824"},

{:year=>2016,

:name=>"Bentley",

:model=>"Continental gt",

:price=>139989,

:link=>"https://www.dupontregistry.com//autos/listing/2016/bentley/continental--gt/2086794"},

{:year=>2016,

:name=>"Bentley",

:model=>"Continental gt",

:price=>0,

:link=>"https://www.dupontregistry.com//autos/listing/2016/bentley/continental--gt--speed/2086825"},

{:year=>2015,

:name=>"Bentley",

:model=>"Continental gt",

:price=>119888,

:link=>"https://www.dupontregistry.com//autos/listing/2015/bentley/continental--gt/2086890"},

...}]

output_hash.rb

If we call .length on this array, we indeed get 120, which matches our number of listings. Perfect!

There are still many methods we would want to build into this to make it useful. For example, we could create a method that returns the link of the cheapest Bentley currently listed, or one that calculates the average asking price. You could even beautify this code quite a bit as its far from perfect. However, I’m going to stop here for today before this article becomes War and Peace! Here’s a link to the GitHub repository if you’d like to use it for inspiration for your own web scraper.

#ruby #Scraper #programming