How to export datastores from multiple projects using Google Dataflow — with additional filtering of entities.

This is a short extension to my previous story , where I described how to incrementally export data from Datastore to BigQuery. Here, I discuss how to extend the previous solution to the situation where you have Datastores in multiple projects. The goal remains the same, we would like to have the data in BigQuery.

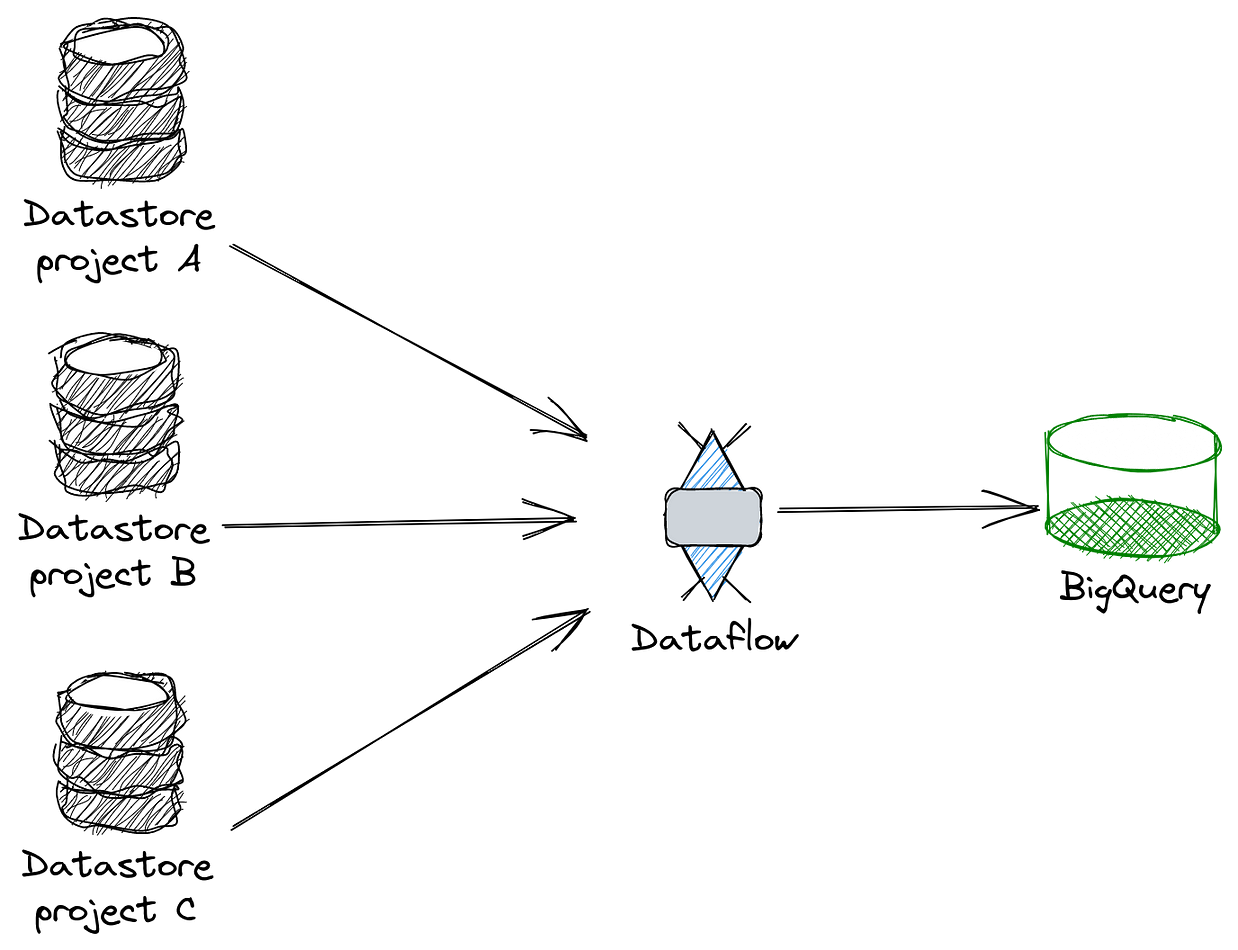

Overall, the problem can be expressed with the following diagram

Sketch of the architecture (by author)

The Dataflow process can live either in one of the source projects or can be put in a separate project — I will put the dataflow process in a separate project. The results can be stored in BigQuery that is located either in the same project as the dataflow process, or in another project.

Generalization

Let’s begin with the generalization. First, I have extended the config file with two new fields: SourceProjectIDs which is nothing more than a list of source GCP projects, and Destination that defines where the output BigQuery dataset lives.

#data-engineering #gcp #bigquery #serverless #python