Find out why you should change your job and become a Philosopher

This week I came across several articles that challenge the development and utilization of AI-based system across several domains.

I’ve never had to genuinely reflect on the philosophical and legal aspects of my contributions as a machine learning practitioner, but this has changed after reading some interesting articles that present the consequences of AI advancement that are happening now, and those that are yet to happen.

Our lives today could look entirely different tomorrow.

This week the articles I found interesting covered the following topics:

- Why Philosophers will be the last ones standing

- Facial recognition in the legal spotlight

- How to develop an effective data science portfolio

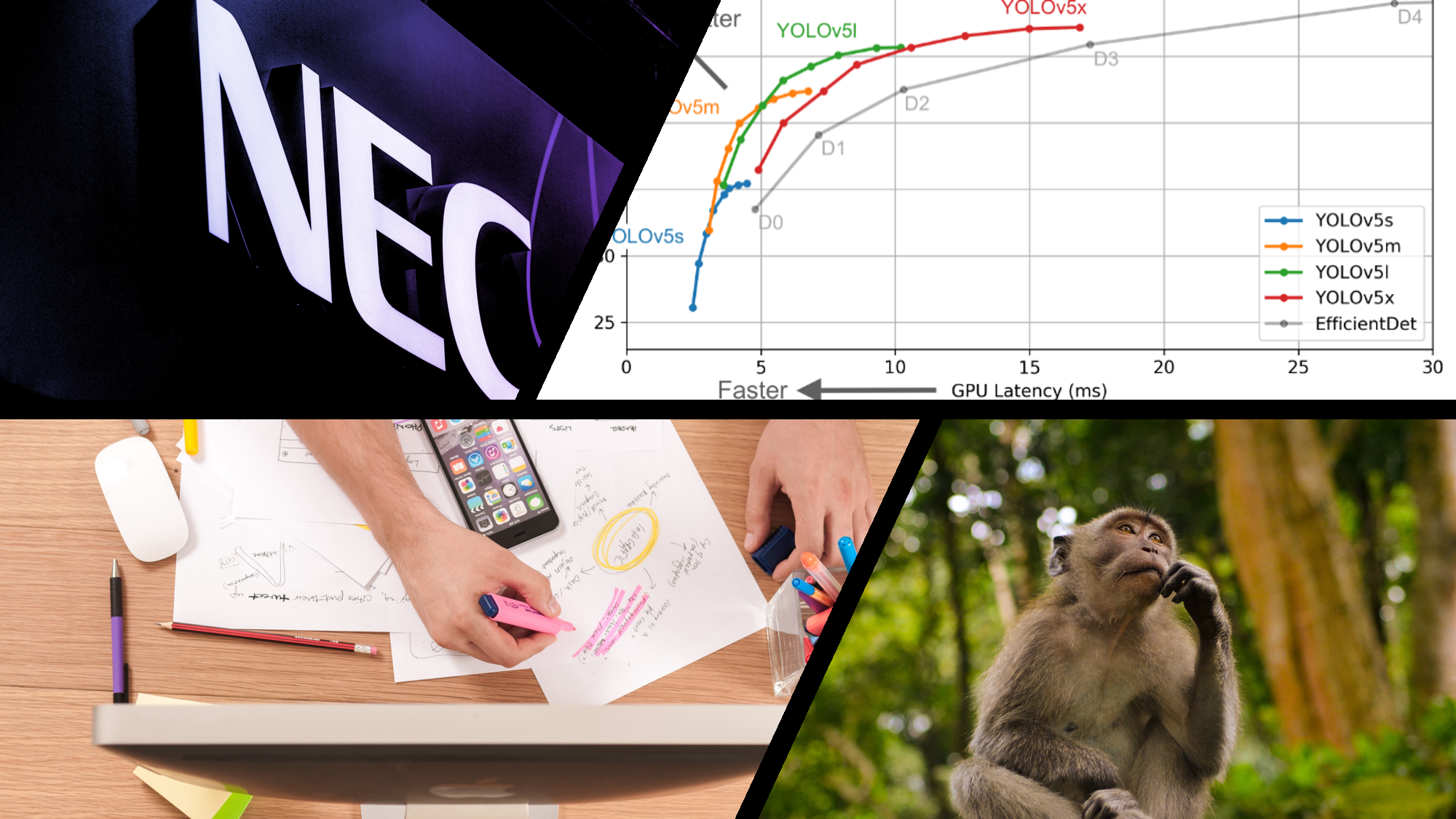

- A battle between object detection algorithms

Cover images of the articles included

A Facial Recognition Giant Refuses to Share Details About Its Algorithm Dataset by Dave Gershgorn

Would you let a machine learning model that has a failure rate of 98% and a false positive rate of 81% into production?

Well, these claimed performance figures are from a facial recognition system that is in use by the policing force in South Wales and other parts of the United Kingdom.

Dave Gershgorn article starts with a description akin to the setting of a dystopian future where an overseeing governing system monitors everyone; which is hysterically a foreshadowing of a foreseeable future.

South Wales Police have been using facial recognition systems since 2017 and have done this in no secrecy from the public. They’ve made arrests as a result of the facial recognition system.

On the surface value the utilization of the technology to combat crime and aiding effective policing doesn’t sound any alarms, but the accuracy metrics and performance audit results of these systems that are used to conduct arrests paint a more startling picture.

Dave’s article includes information of a lawsuit that has been taken against the governing bodies using the facial recognition software. The lawsuit is due to the facial recognition system inherent algorithmic racial bias and ineffectiveness — a subject matter that’s made headlines lately.

But yet, the main point of Dave’s coverage of the event is to bring to light that NEC technology(provider of the facial recognition tool) is not willing to reveal details of their dataset.

Even the police, who are using the tool has no idea of how the system is trained. In my opinion, it makes sense to expose the data that is used to train systems that have a direct/indirect effect on the public, especially in public policing.

Dave article mentions the UK influence across the rest of Europe and the world. It states that their actions and views towards facial recognition could set the precedent of how other countries in Europe move forward with the adoption and utilization of facial recognition technology.

The latter half of Dave article is brief coverage of articles that tackle complex problems within autonomous vehicle.

Read this article to gain an awareness of the legal consequences and actions that are taken against companies developing AI systems that are used in public settings.

#machine-learning #news #data-science #deep-learning #deep learning