While most of us can keep count while exercising or measuring the pulse, it would be great to have something to keep count for us and even provide valuable information about the repeated actions. Especially for actions with a longer period like planetary cycles or periods that are too short like a manufacturing belt.

A recent paper by the Google Research & DeepMind team, published in CPVR 2020, called **Counting Out Time: Class Agnostic Video Repetition Counting in the Wild **addresses this interesting problem.

They employ a pretty “simple” approach to identifying and counting repeated actions, and eventually predicting the periodicity of the action. But the main hurdle the authors identify is curating a large enough dataset to be able to do this. Thus, this paper[1] mainly contributes in:

- Releasing Countix: A new video repetition counting dataset which is ∼ 90 times larger than the previous largest dataset

- Releasing RepNet: A neural network architecture for counting and measuring periodicity of repetitions in videos “in the wild”[1] such that they outperform previous state-of-the-art methods

- Using synthetic, unlabeled clips and generating augmented videos that can be used for training

The Countix Dataset

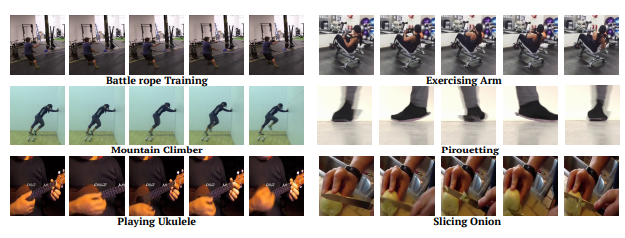

Samples in Countix from [1]

The authors, before anything else, propose a new, HUGE dataset of annotated videos with repeating actions — since the existing datasets for repetition are just too small.

#machine-learning #computer-vision #google-research #deep-learning #deep learning