Do you ever wonder why people still use SQL when there are Python, pandas, and all the other tools for working with Big Data? Yes, pandas library can do everything that SQL can, sometimes even better, except for one thing. It cannot work with remote or local databases all by itself.

Even though flat files are ideal for small-sized data, they suck at dealing with massive amounts of information. They don’t offer as much flexibility and speed. They also lack one key aspect that database tables have: you can’t make them **communicate **with each other.

Learning how to get from data in remote database servers to retrieving them into nice-looking [pandas](https://pandas.pydata.org/) DataFrames is a must-have skill for any data scientist. This article teaches you how to connect to three different database types: MySQL, PostgreSQL, and SQLite.

Tip: For each function and package used in the code snippets, I hyperlinked their documentation. Be sure to check them out, too.

Connecting to PostgreSQL databases in Python

Endangered, majestic creatures doing our bidding. Image by EDB

The first in our list of the most common relational database management systems is PostgreSQL.

We will use a module called [psycopg2](https://www.psycopg.org/docs/) . It is a PostgreSQLadapter that provides all the relevant functions to connect to a remote or a local database server and query them for relevant data.

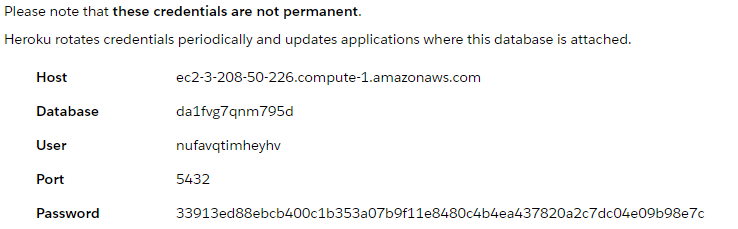

Every PostgreSQLdatabase has its own credentials for each user. To connect using Python, you will be required 4 arguments: database name, hostname, username, and password. I created a sample database on Heroku for illustration purposes. When I go to the credentials page of my database, I will see this:

#machine-learning #python #data-science